mirror of

https://github.com/huggingface/text-generation-inference.git

synced 2025-10-09 06:55:24 +00:00

- Refactor code to allow supporting multiple versions of the generate.proto at the same time - Add v3/generate.proto (ISO to generate.proto for now but allow for future changes without impacting v2 backends) - Add Schedule trait to abstract queuing and batching mechanisms that will be different in the future - Add SchedulerV2/V3 impl |

||

|---|---|---|

| .. | ||

| src | ||

| Cargo.toml | ||

| README.md | ||

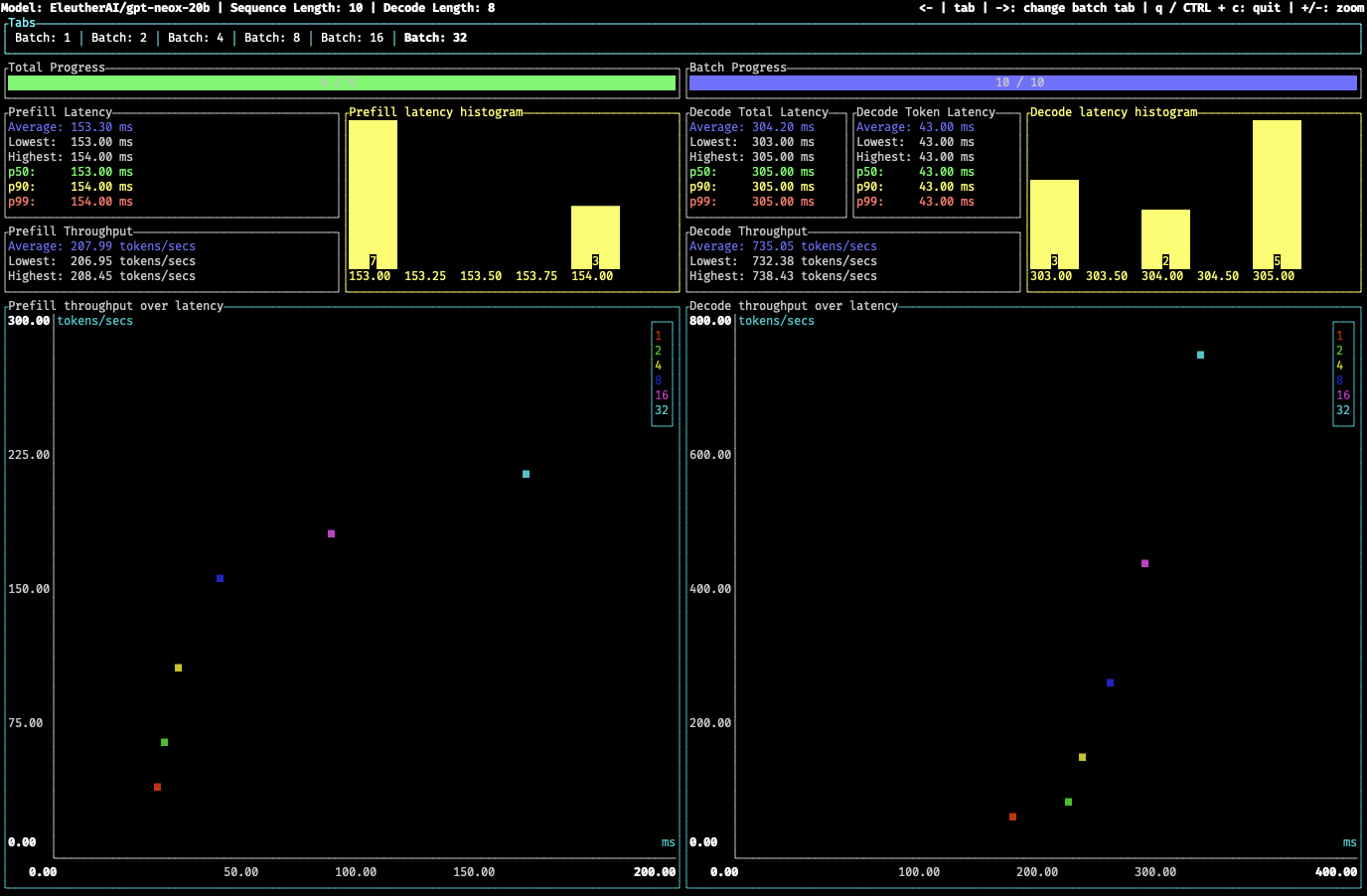

A lightweight benchmarking tool based inspired by oha and powered by tui.

Install

make install-benchmark

Run

First, start text-generation-inference:

text-generation-launcher --model-id bigscience/bloom-560m

Then run the benchmarking tool:

text-generation-benchmark --tokenizer-name bigscience/bloom-560m