mirror of

https://github.com/huggingface/text-generation-inference.git

synced 2025-09-09 11:24:53 +00:00

Merge branch 'main' into gptq-cuda-kernels

This commit is contained in:

commit

edfbfdfb3f

302

Cargo.lock

generated

302

Cargo.lock

generated

@ -148,18 +148,18 @@ checksum = "16e62a023e7c117e27523144c5d2459f4397fcc3cab0085af8e2224f643a0193"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "async-trait"

|

name = "async-trait"

|

||||||

version = "0.1.68"

|

version = "0.1.71"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "b9ccdd8f2a161be9bd5c023df56f1b2a0bd1d83872ae53b71a84a12c9bf6e842"

|

checksum = "a564d521dd56509c4c47480d00b80ee55f7e385ae48db5744c67ad50c92d2ebf"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -410,9 +410,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "clap"

|

name = "clap"

|

||||||

version = "4.3.10"

|

version = "4.3.11"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "384e169cc618c613d5e3ca6404dda77a8685a63e08660dcc64abaf7da7cb0c7a"

|

checksum = "1640e5cc7fb47dbb8338fd471b105e7ed6c3cb2aeb00c2e067127ffd3764a05d"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"clap_builder",

|

"clap_builder",

|

||||||

"clap_derive",

|

"clap_derive",

|

||||||

@ -421,9 +421,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "clap_builder"

|

name = "clap_builder"

|

||||||

version = "4.3.10"

|

version = "4.3.11"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "ef137bbe35aab78bdb468ccfba75a5f4d8321ae011d34063770780545176af2d"

|

checksum = "98c59138d527eeaf9b53f35a77fcc1fad9d883116070c63d5de1c7dc7b00c72b"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"anstream",

|

"anstream",

|

||||||

"anstyle",

|

"anstyle",

|

||||||

@ -440,7 +440,7 @@ dependencies = [

|

|||||||

"heck",

|

"heck",

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -492,9 +492,9 @@ checksum = "e496a50fda8aacccc86d7529e2c1e0892dbd0f898a6b5645b5561b89c3210efa"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "cpufeatures"

|

name = "cpufeatures"

|

||||||

version = "0.2.8"

|

version = "0.2.9"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "03e69e28e9f7f77debdedbaafa2866e1de9ba56df55a8bd7cfc724c25a09987c"

|

checksum = "a17b76ff3a4162b0b27f354a0c87015ddad39d35f9c0c36607a3bdd175dde1f1"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"libc",

|

"libc",

|

||||||

]

|

]

|

||||||

@ -633,12 +633,12 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "dashmap"

|

name = "dashmap"

|

||||||

version = "5.4.0"

|

version = "5.5.0"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "907076dfda823b0b36d2a1bb5f90c96660a5bbcd7729e10727f07858f22c4edc"

|

checksum = "6943ae99c34386c84a470c499d3414f66502a41340aa895406e0d2e4a207b91d"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"cfg-if",

|

"cfg-if",

|

||||||

"hashbrown 0.12.3",

|

"hashbrown 0.14.0",

|

||||||

"lock_api",

|

"lock_api",

|

||||||

"once_cell",

|

"once_cell",

|

||||||

"parking_lot_core",

|

"parking_lot_core",

|

||||||

@ -736,6 +736,12 @@ dependencies = [

|

|||||||

"cfg-if",

|

"cfg-if",

|

||||||

]

|

]

|

||||||

|

|

||||||

|

[[package]]

|

||||||

|

name = "equivalent"

|

||||||

|

version = "1.0.1"

|

||||||

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

|

checksum = "5443807d6dff69373d433ab9ef5378ad8df50ca6298caf15de6e52e24aaf54d5"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "errno"

|

name = "errno"

|

||||||

version = "0.3.1"

|

version = "0.3.1"

|

||||||

@ -924,7 +930,7 @@ checksum = "89ca545a94061b6365f2c7355b4b32bd20df3ff95f02da9329b34ccc3bd6ee72"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -1023,7 +1029,7 @@ dependencies = [

|

|||||||

"futures-sink",

|

"futures-sink",

|

||||||

"futures-util",

|

"futures-util",

|

||||||

"http",

|

"http",

|

||||||

"indexmap",

|

"indexmap 1.9.3",

|

||||||

"slab",

|

"slab",

|

||||||

"tokio",

|

"tokio",

|

||||||

"tokio-util",

|

"tokio-util",

|

||||||

@ -1038,13 +1044,19 @@ checksum = "8a9ee70c43aaf417c914396645a0fa852624801b24ebb7ae78fe8272889ac888"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "hashbrown"

|

name = "hashbrown"

|

||||||

version = "0.13.2"

|

version = "0.13.1"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "43a3c133739dddd0d2990f9a4bdf8eb4b21ef50e4851ca85ab661199821d510e"

|

checksum = "33ff8ae62cd3a9102e5637afc8452c55acf3844001bd5374e0b0bd7b6616c038"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"ahash",

|

"ahash",

|

||||||

]

|

]

|

||||||

|

|

||||||

|

[[package]]

|

||||||

|

name = "hashbrown"

|

||||||

|

version = "0.14.0"

|

||||||

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

|

checksum = "2c6201b9ff9fd90a5a3bac2e56a830d0caa509576f0e503818ee82c181b3437a"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "heck"

|

name = "heck"

|

||||||

version = "0.4.1"

|

version = "0.4.1"

|

||||||

@ -1053,9 +1065,9 @@ checksum = "95505c38b4572b2d910cecb0281560f54b440a19336cbbcb27bf6ce6adc6f5a8"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "hermit-abi"

|

name = "hermit-abi"

|

||||||

version = "0.3.1"

|

version = "0.3.2"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "fed44880c466736ef9a5c5b5facefb5ed0785676d0c02d612db14e54f0d84286"

|

checksum = "443144c8cdadd93ebf52ddb4056d257f5b52c04d3c804e657d19eb73fc33668b"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "hmac"

|

name = "hmac"

|

||||||

@ -1190,6 +1202,16 @@ checksum = "bd070e393353796e801d209ad339e89596eb4c8d430d18ede6a1cced8fafbd99"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"autocfg",

|

"autocfg",

|

||||||

"hashbrown 0.12.3",

|

"hashbrown 0.12.3",

|

||||||

|

]

|

||||||

|

|

||||||

|

[[package]]

|

||||||

|

name = "indexmap"

|

||||||

|

version = "2.0.0"

|

||||||

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

|

checksum = "d5477fe2230a79769d8dc68e0eabf5437907c0457a5614a9e8dddb67f65eb65d"

|

||||||

|

dependencies = [

|

||||||

|

"equivalent",

|

||||||

|

"hashbrown 0.14.0",

|

||||||

"serde",

|

"serde",

|

||||||

]

|

]

|

||||||

|

|

||||||

@ -1254,12 +1276,12 @@ checksum = "28b29a3cd74f0f4598934efe3aeba42bae0eb4680554128851ebbecb02af14e6"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "is-terminal"

|

name = "is-terminal"

|

||||||

version = "0.4.8"

|

version = "0.4.9"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "24fddda5af7e54bf7da53067d6e802dbcc381d0a8eef629df528e3ebf68755cb"

|

checksum = "cb0889898416213fab133e1d33a0e5858a48177452750691bde3666d0fdbaf8b"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"hermit-abi",

|

"hermit-abi",

|

||||||

"rustix 0.38.1",

|

"rustix 0.38.4",

|

||||||

"windows-sys 0.48.0",

|

"windows-sys 0.48.0",

|

||||||

]

|

]

|

||||||

|

|

||||||

@ -1292,9 +1314,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "itoa"

|

name = "itoa"

|

||||||

version = "1.0.6"

|

version = "1.0.8"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "453ad9f582a441959e5f0d088b02ce04cfe8d51a8eaf077f12ac6d3e94164ca6"

|

checksum = "62b02a5381cc465bd3041d84623d0fa3b66738b52b8e2fc3bab8ad63ab032f4a"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "jobserver"

|

name = "jobserver"

|

||||||

@ -1397,7 +1419,7 @@ version = "0.1.0"

|

|||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "8263075bb86c5a1b1427b5ae862e8889656f126e9f77c484496e8b47cf5c5558"

|

checksum = "8263075bb86c5a1b1427b5ae862e8889656f126e9f77c484496e8b47cf5c5558"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"regex-automata",

|

"regex-automata 0.1.10",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -1432,9 +1454,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "metrics"

|

name = "metrics"

|

||||||

version = "0.21.0"

|

version = "0.21.1"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "aa8ebbd1a9e57bbab77b9facae7f5136aea44c356943bf9a198f647da64285d6"

|

checksum = "fde3af1a009ed76a778cb84fdef9e7dbbdf5775ae3e4cc1f434a6a307f6f76c5"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"ahash",

|

"ahash",

|

||||||

"metrics-macros",

|

"metrics-macros",

|

||||||

@ -1449,7 +1471,7 @@ checksum = "8a4964177ddfdab1e3a2b37aec7cf320e14169abb0ed73999f558136409178d5"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"base64 0.21.2",

|

"base64 0.21.2",

|

||||||

"hyper",

|

"hyper",

|

||||||

"indexmap",

|

"indexmap 1.9.3",

|

||||||

"ipnet",

|

"ipnet",

|

||||||

"metrics",

|

"metrics",

|

||||||

"metrics-util",

|

"metrics-util",

|

||||||

@ -1467,18 +1489,18 @@ checksum = "ddece26afd34c31585c74a4db0630c376df271c285d682d1e55012197830b6df"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "metrics-util"

|

name = "metrics-util"

|

||||||

version = "0.15.0"

|

version = "0.15.1"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "111cb375987443c3de8d503580b536f77dc8416d32db62d9456db5d93bd7ac47"

|

checksum = "4de2ed6e491ed114b40b732e4d1659a9d53992ebd87490c44a6ffe23739d973e"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"crossbeam-epoch",

|

"crossbeam-epoch",

|

||||||

"crossbeam-utils",

|

"crossbeam-utils",

|

||||||

"hashbrown 0.13.2",

|

"hashbrown 0.13.1",

|

||||||

"metrics",

|

"metrics",

|

||||||

"num_cpus",

|

"num_cpus",

|

||||||

"quanta",

|

"quanta",

|

||||||

@ -1530,9 +1552,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "monostate"

|

name = "monostate"

|

||||||

version = "0.1.6"

|

version = "0.1.8"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "0230b703f1ac35df1e24f6d0d2255472bcccaf657ecdfa4f1fcbcad1ad5bb98a"

|

checksum = "3f3f57a8802842f648026a33c3d2e3bb41bb309a35b1609bd7ef2b060b8b6b1b"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"monostate-impl",

|

"monostate-impl",

|

||||||

"serde",

|

"serde",

|

||||||

@ -1540,13 +1562,13 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "monostate-impl"

|

name = "monostate-impl"

|

||||||

version = "0.1.6"

|

version = "0.1.8"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "8795add3e14028f11f8e848bd3294898a8294767b3776b6f733560d33bd2530b"

|

checksum = "e72f4d2e10fde62a0f2fcb4b44ccbf4f9899dcc30c9193449f8dfb9123d71377"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -1701,6 +1723,15 @@ dependencies = [

|

|||||||

"libc",

|

"libc",

|

||||||

]

|

]

|

||||||

|

|

||||||

|

[[package]]

|

||||||

|

name = "num_threads"

|

||||||

|

version = "0.1.6"

|

||||||

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

|

checksum = "2819ce041d2ee131036f4fc9d6ae7ae125a3a40e97ba64d04fe799ad9dabbb44"

|

||||||

|

dependencies = [

|

||||||

|

"libc",

|

||||||

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "number_prefix"

|

name = "number_prefix"

|

||||||

version = "0.3.0"

|

version = "0.3.0"

|

||||||

@ -1773,7 +1804,7 @@ checksum = "a948666b637a0f465e8564c73e89d4dde00d72d4d473cc972f390fc3dcee7d9c"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -1854,7 +1885,7 @@ dependencies = [

|

|||||||

"fnv",

|

"fnv",

|

||||||

"futures-channel",

|

"futures-channel",

|

||||||

"futures-util",

|

"futures-util",

|

||||||

"indexmap",

|

"indexmap 1.9.3",

|

||||||

"js-sys",

|

"js-sys",

|

||||||

"once_cell",

|

"once_cell",

|

||||||

"pin-project-lite",

|

"pin-project-lite",

|

||||||

@ -1870,7 +1901,7 @@ dependencies = [

|

|||||||

"fnv",

|

"fnv",

|

||||||

"futures-channel",

|

"futures-channel",

|

||||||

"futures-util",

|

"futures-util",

|

||||||

"indexmap",

|

"indexmap 1.9.3",

|

||||||

"once_cell",

|

"once_cell",

|

||||||

"pin-project-lite",

|

"pin-project-lite",

|

||||||

"thiserror",

|

"thiserror",

|

||||||

@ -1974,9 +2005,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "paste"

|

name = "paste"

|

||||||

version = "1.0.12"

|

version = "1.0.13"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "9f746c4065a8fa3fe23974dd82f15431cc8d40779821001404d10d2e79ca7d79"

|

checksum = "b4b27ab7be369122c218afc2079489cdcb4b517c0a3fc386ff11e1fedfcc2b35"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "pbkdf2"

|

name = "pbkdf2"

|

||||||

@ -2003,34 +2034,34 @@ source = "registry+https://github.com/rust-lang/crates.io-index"

|

|||||||

checksum = "4dd7d28ee937e54fe3080c91faa1c3a46c06de6252988a7f4592ba2310ef22a4"

|

checksum = "4dd7d28ee937e54fe3080c91faa1c3a46c06de6252988a7f4592ba2310ef22a4"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"fixedbitset",

|

"fixedbitset",

|

||||||

"indexmap",

|

"indexmap 1.9.3",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "pin-project"

|

name = "pin-project"

|

||||||

version = "1.1.1"

|

version = "1.1.2"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "6e138fdd8263907a2b0e1b4e80b7e58c721126479b6e6eedfb1b402acea7b9bd"

|

checksum = "030ad2bc4db10a8944cb0d837f158bdfec4d4a4873ab701a95046770d11f8842"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"pin-project-internal",

|

"pin-project-internal",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "pin-project-internal"

|

name = "pin-project-internal"

|

||||||

version = "1.1.1"

|

version = "1.1.2"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "d1fef411b303e3e12d534fb6e7852de82da56edd937d895125821fb7c09436c7"

|

checksum = "ec2e072ecce94ec471b13398d5402c188e76ac03cf74dd1a975161b23a3f6d9c"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "pin-project-lite"

|

name = "pin-project-lite"

|

||||||

version = "0.2.9"

|

version = "0.2.10"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "e0a7ae3ac2f1173085d398531c705756c94a4c56843785df85a60c1a0afac116"

|

checksum = "4c40d25201921e5ff0c862a505c6557ea88568a4e3ace775ab55e93f2f4f9d57"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "pin-utils"

|

name = "pin-utils"

|

||||||

@ -2046,9 +2077,9 @@ checksum = "26072860ba924cbfa98ea39c8c19b4dd6a4a25423dbdf219c1eca91aa0cf6964"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "portable-atomic"

|

name = "portable-atomic"

|

||||||

version = "1.3.3"

|

version = "1.4.0"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "767eb9f07d4a5ebcb39bbf2d452058a93c011373abf6832e24194a1c3f004794"

|

checksum = "d220334a184db82b31b83f5ff093e3315280fb2b6bbc032022b2304a509aab7a"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "ppv-lite86"

|

name = "ppv-lite86"

|

||||||

@ -2092,9 +2123,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "proc-macro2"

|

name = "proc-macro2"

|

||||||

version = "1.0.63"

|

version = "1.0.64"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "7b368fba921b0dce7e60f5e04ec15e565b3303972b42bcfde1d0713b881959eb"

|

checksum = "78803b62cbf1f46fde80d7c0e803111524b9877184cfe7c3033659490ac7a7da"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"unicode-ident",

|

"unicode-ident",

|

||||||

]

|

]

|

||||||

@ -2294,13 +2325,14 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "regex"

|

name = "regex"

|

||||||

version = "1.8.4"

|

version = "1.9.1"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "d0ab3ca65655bb1e41f2a8c8cd662eb4fb035e67c3f78da1d61dffe89d07300f"

|

checksum = "b2eae68fc220f7cf2532e4494aded17545fce192d59cd996e0fe7887f4ceb575"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"aho-corasick 1.0.2",

|

"aho-corasick 1.0.2",

|

||||||

"memchr",

|

"memchr",

|

||||||

"regex-syntax 0.7.2",

|

"regex-automata 0.3.3",

|

||||||

|

"regex-syntax 0.7.4",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -2312,6 +2344,17 @@ dependencies = [

|

|||||||

"regex-syntax 0.6.29",

|

"regex-syntax 0.6.29",

|

||||||

]

|

]

|

||||||

|

|

||||||

|

[[package]]

|

||||||

|

name = "regex-automata"

|

||||||

|

version = "0.3.3"

|

||||||

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

|

checksum = "39354c10dd07468c2e73926b23bb9c2caca74c5501e38a35da70406f1d923310"

|

||||||

|

dependencies = [

|

||||||

|

"aho-corasick 1.0.2",

|

||||||

|

"memchr",

|

||||||

|

"regex-syntax 0.7.4",

|

||||||

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "regex-syntax"

|

name = "regex-syntax"

|

||||||

version = "0.6.29"

|

version = "0.6.29"

|

||||||

@ -2320,9 +2363,9 @@ checksum = "f162c6dd7b008981e4d40210aca20b4bd0f9b60ca9271061b07f78537722f2e1"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "regex-syntax"

|

name = "regex-syntax"

|

||||||

version = "0.7.2"

|

version = "0.7.4"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "436b050e76ed2903236f032a59761c1eb99e1b0aead2c257922771dab1fc8c78"

|

checksum = "e5ea92a5b6195c6ef2a0295ea818b312502c6fc94dde986c5553242e18fd4ce2"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "reqwest"

|

name = "reqwest"

|

||||||

@ -2397,7 +2440,7 @@ dependencies = [

|

|||||||

"quote",

|

"quote",

|

||||||

"rust-embed-utils",

|

"rust-embed-utils",

|

||||||

"shellexpand",

|

"shellexpand",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

"walkdir",

|

"walkdir",

|

||||||

]

|

]

|

||||||

|

|

||||||

@ -2428,9 +2471,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "rustix"

|

name = "rustix"

|

||||||

version = "0.37.21"

|

version = "0.37.23"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "62f25693a73057a1b4cb56179dd3c7ea21a7c6c5ee7d85781f5749b46f34b79c"

|

checksum = "4d69718bf81c6127a49dc64e44a742e8bb9213c0ff8869a22c308f84c1d4ab06"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"bitflags 1.3.2",

|

"bitflags 1.3.2",

|

||||||

"errno",

|

"errno",

|

||||||

@ -2442,9 +2485,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "rustix"

|

name = "rustix"

|

||||||

version = "0.38.1"

|

version = "0.38.4"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "fbc6396159432b5c8490d4e301d8c705f61860b8b6c863bf79942ce5401968f3"

|

checksum = "0a962918ea88d644592894bc6dc55acc6c0956488adcebbfb6e273506b7fd6e5"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"bitflags 2.3.3",

|

"bitflags 2.3.3",

|

||||||

"errno",

|

"errno",

|

||||||

@ -2476,15 +2519,15 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "rustversion"

|

name = "rustversion"

|

||||||

version = "1.0.12"

|

version = "1.0.13"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "4f3208ce4d8448b3f3e7d168a73f5e0c43a61e32930de3bceeccedb388b6bf06"

|

checksum = "dc31bd9b61a32c31f9650d18add92aa83a49ba979c143eefd27fe7177b05bd5f"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "ryu"

|

name = "ryu"

|

||||||

version = "1.0.13"

|

version = "1.0.14"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "f91339c0467de62360649f8d3e185ca8de4224ff281f66000de5eb2a77a79041"

|

checksum = "fe232bdf6be8c8de797b22184ee71118d63780ea42ac85b61d1baa6d3b782ae9"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "same-file"

|

name = "same-file"

|

||||||

@ -2497,11 +2540,11 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "schannel"

|

name = "schannel"

|

||||||

version = "0.1.21"

|

version = "0.1.22"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "713cfb06c7059f3588fb8044c0fad1d09e3c01d225e25b9220dbfdcf16dbb1b3"

|

checksum = "0c3733bf4cf7ea0880754e19cb5a462007c4a8c1914bff372ccc95b464f1df88"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"windows-sys 0.42.0",

|

"windows-sys 0.48.0",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -2551,29 +2594,29 @@ checksum = "bebd363326d05ec3e2f532ab7660680f3b02130d780c299bca73469d521bc0ed"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "serde"

|

name = "serde"

|

||||||

version = "1.0.164"

|

version = "1.0.171"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "9e8c8cf938e98f769bc164923b06dce91cea1751522f46f8466461af04c9027d"

|

checksum = "30e27d1e4fd7659406c492fd6cfaf2066ba8773de45ca75e855590f856dc34a9"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"serde_derive",

|

"serde_derive",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "serde_derive"

|

name = "serde_derive"

|

||||||

version = "1.0.164"

|

version = "1.0.171"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "d9735b638ccc51c28bf6914d90a2e9725b377144fc612c49a611fddd1b631d68"

|

checksum = "389894603bd18c46fa56231694f8d827779c0951a667087194cf9de94ed24682"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "serde_json"

|

name = "serde_json"

|

||||||

version = "1.0.99"

|

version = "1.0.102"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "46266871c240a00b8f503b877622fe33430b3c7d963bdc0f2adc511e54a1eae3"

|

checksum = "b5062a995d481b2308b6064e9af76011f2921c35f97b0468811ed9f6cd91dfed"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"itoa",

|

"itoa",

|

||||||

"ryu",

|

"ryu",

|

||||||

@ -2582,10 +2625,11 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "serde_path_to_error"

|

name = "serde_path_to_error"

|

||||||

version = "0.1.11"

|

version = "0.1.13"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "f7f05c1d5476066defcdfacce1f52fc3cae3af1d3089727100c02ae92e5abbe0"

|

checksum = "8acc4422959dd87a76cb117c191dcbffc20467f06c9100b76721dab370f24d3a"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

|

"itoa",

|

||||||

"serde",

|

"serde",

|

||||||

]

|

]

|

||||||

|

|

||||||

@ -2697,9 +2741,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "smallvec"

|

name = "smallvec"

|

||||||

version = "1.10.0"

|

version = "1.11.0"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "a507befe795404456341dfab10cef66ead4c041f62b8b11bbb92bffe5d0953e0"

|

checksum = "62bb4feee49fdd9f707ef802e22365a35de4b7b299de4763d44bfea899442ff9"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "socket2"

|

name = "socket2"

|

||||||

@ -2769,9 +2813,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "syn"

|

name = "syn"

|

||||||

version = "2.0.22"

|

version = "2.0.25"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "2efbeae7acf4eabd6bcdcbd11c92f45231ddda7539edc7806bd1a04a03b24616"

|

checksum = "15e3fc8c0c74267e2df136e5e5fb656a464158aa57624053375eb9c8c6e25ae2"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

@ -2786,9 +2830,9 @@ checksum = "2047c6ded9c721764247e62cd3b03c09ffc529b2ba5b10ec482ae507a4a70160"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "sysinfo"

|

name = "sysinfo"

|

||||||

version = "0.29.3"

|

version = "0.29.4"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "5bcd0346f90b6bc83526c7b180039a8acd26a5c848cc556d457f6472eb148122"

|

checksum = "751e810399bba86e9326f5762b7f32ac5a085542df78da6a78d94e07d14d7c11"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"cfg-if",

|

"cfg-if",

|

||||||

"core-foundation-sys",

|

"core-foundation-sys",

|

||||||

@ -2824,9 +2868,9 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "tar"

|

name = "tar"

|

||||||

version = "0.4.38"

|

version = "0.4.39"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "4b55807c0344e1e6c04d7c965f5289c39a8d94ae23ed5c0b57aabac549f871c6"

|

checksum = "ec96d2ffad078296368d46ff1cb309be1c23c513b4ab0e22a45de0185275ac96"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"filetime",

|

"filetime",

|

||||||

"libc",

|

"libc",

|

||||||

@ -2843,13 +2887,13 @@ dependencies = [

|

|||||||

"cfg-if",

|

"cfg-if",

|

||||||

"fastrand",

|

"fastrand",

|

||||||

"redox_syscall 0.3.5",

|

"redox_syscall 0.3.5",

|

||||||

"rustix 0.37.21",

|

"rustix 0.37.23",

|

||||||

"windows-sys 0.48.0",

|

"windows-sys 0.48.0",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "text-generation-benchmark"

|

name = "text-generation-benchmark"

|

||||||

version = "0.9.1"

|

version = "0.9.3"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"average",

|

"average",

|

||||||

"clap",

|

"clap",

|

||||||

@ -2869,7 +2913,7 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "text-generation-client"

|

name = "text-generation-client"

|

||||||

version = "0.9.1"

|

version = "0.9.3"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"futures",

|

"futures",

|

||||||

"grpc-metadata",

|

"grpc-metadata",

|

||||||

@ -2885,7 +2929,7 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "text-generation-launcher"

|

name = "text-generation-launcher"

|

||||||

version = "0.9.1"

|

version = "0.9.3"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"clap",

|

"clap",

|

||||||

"ctrlc",

|

"ctrlc",

|

||||||

@ -2901,7 +2945,7 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "text-generation-router"

|

name = "text-generation-router"

|

||||||

version = "0.9.1"

|

version = "0.9.3"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"async-stream",

|

"async-stream",

|

||||||

"axum",

|

"axum",

|

||||||

@ -2934,22 +2978,22 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "thiserror"

|

name = "thiserror"

|

||||||

version = "1.0.40"

|

version = "1.0.43"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "978c9a314bd8dc99be594bc3c175faaa9794be04a5a5e153caba6915336cebac"

|

checksum = "a35fc5b8971143ca348fa6df4f024d4d55264f3468c71ad1c2f365b0a4d58c42"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"thiserror-impl",

|

"thiserror-impl",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "thiserror-impl"

|

name = "thiserror-impl"

|

||||||

version = "1.0.40"

|

version = "1.0.43"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "f9456a42c5b0d803c8cd86e73dd7cc9edd429499f37a3550d286d5e86720569f"

|

checksum = "463fe12d7993d3b327787537ce8dd4dfa058de32fc2b195ef3cde03dc4771e8f"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -2964,11 +3008,13 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "time"

|

name = "time"

|

||||||

version = "0.3.22"

|

version = "0.3.23"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "ea9e1b3cf1243ae005d9e74085d4d542f3125458f3a81af210d901dcd7411efd"

|

checksum = "59e399c068f43a5d116fedaf73b203fa4f9c519f17e2b34f63221d3792f81446"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"itoa",

|

"itoa",

|

||||||

|

"libc",

|

||||||

|

"num_threads",

|

||||||

"serde",

|

"serde",

|

||||||

"time-core",

|

"time-core",

|

||||||

"time-macros",

|

"time-macros",

|

||||||

@ -2982,9 +3028,9 @@ checksum = "7300fbefb4dadc1af235a9cef3737cea692a9d97e1b9cbcd4ebdae6f8868e6fb"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "time-macros"

|

name = "time-macros"

|

||||||

version = "0.2.9"

|

version = "0.2.10"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "372950940a5f07bf38dbe211d7283c9e6d7327df53794992d293e534c733d09b"

|

checksum = "96ba15a897f3c86766b757e5ac7221554c6750054d74d5b28844fce5fb36a6c4"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"time-core",

|

"time-core",

|

||||||

]

|

]

|

||||||

@ -3078,7 +3124,7 @@ checksum = "630bdcf245f78637c13ec01ffae6187cca34625e8c63150d424b59e55af2675e"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -3209,7 +3255,7 @@ checksum = "b8fa9be0de6cf49e536ce1851f987bd21a43b771b09473c3549a6c853db37c1c"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"futures-core",

|

"futures-core",

|

||||||

"futures-util",

|

"futures-util",

|

||||||

"indexmap",

|

"indexmap 1.9.3",

|

||||||

"pin-project",

|

"pin-project",

|

||||||

"pin-project-lite",

|

"pin-project-lite",

|

||||||

"rand",

|

"rand",

|

||||||

@ -3291,7 +3337,7 @@ checksum = "5f4f31f56159e98206da9efd823404b79b6ef3143b4a7ab76e67b1751b25a4ab"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

@ -3413,9 +3459,9 @@ checksum = "92888ba5573ff080736b3648696b70cafad7d250551175acbaa4e0385b3e1460"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "unicode-ident"

|

name = "unicode-ident"

|

||||||

version = "1.0.9"

|

version = "1.0.10"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "b15811caf2415fb889178633e7724bad2509101cde276048e013b9def5e51fa0"

|

checksum = "22049a19f4a68748a168c0fc439f9516686aa045927ff767eca0a85101fb6e73"

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "unicode-normalization"

|

name = "unicode-normalization"

|

||||||

@ -3484,11 +3530,11 @@ checksum = "711b9620af191e0cdc7468a8d14e709c3dcdb115b36f838e601583af800a370a"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "utoipa"

|

name = "utoipa"

|

||||||

version = "3.3.0"

|

version = "3.4.0"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "68ae74ef183fae36d650f063ae7bde1cacbe1cd7e72b617cbe1e985551878b98"

|

checksum = "520434cac5c98120177d5cc15be032703f6dca7d5ef82e725c798113b375000a"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"indexmap",

|

"indexmap 2.0.0",

|

||||||

"serde",

|

"serde",

|

||||||

"serde_json",

|

"serde_json",

|

||||||

"utoipa-gen",

|

"utoipa-gen",

|

||||||

@ -3496,21 +3542,22 @@ dependencies = [

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "utoipa-gen"

|

name = "utoipa-gen"

|

||||||

version = "3.3.0"

|

version = "3.4.1"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "7ea8ac818da7e746a63285594cce8a96f5e00ee31994e655bd827569cb8b137b"

|

checksum = "6e22e88a487b6e0374533871b79b1f5ded05671bd0936bd547eb42f82fb9060d"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro-error",

|

"proc-macro-error",

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"regex",

|

||||||

|

"syn 2.0.25",

|

||||||

]

|

]

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "utoipa-swagger-ui"

|

name = "utoipa-swagger-ui"

|

||||||

version = "3.1.3"

|

version = "3.1.4"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "062bba5a3568e126ac72049a63254f4cb1da2eb713db0c1ab2a4c76be191db8c"

|

checksum = "4602d7100d3cfd8a086f30494e68532402ab662fa366c9d201d677e33cee138d"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"axum",

|

"axum",

|

||||||

"mime_guess",

|

"mime_guess",

|

||||||

@ -3536,9 +3583,9 @@ checksum = "accd4ea62f7bb7a82fe23066fb0957d48ef677f6eeb8215f372f52e48bb32426"

|

|||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "vergen"

|

name = "vergen"

|

||||||

version = "8.2.1"

|

version = "8.2.4"

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "8b3c89c2c7e50f33e4d35527e5bf9c11d6d132226dbbd1753f0fbe9f19ef88c6"

|

checksum = "bbc5ad0d9d26b2c49a5ab7da76c3e79d3ee37e7821799f8223fcb8f2f391a2e7"

|

||||||

dependencies = [

|

dependencies = [

|

||||||

"anyhow",

|

"anyhow",

|

||||||

"rustc_version",

|

"rustc_version",

|

||||||

@ -3599,7 +3646,7 @@ dependencies = [

|

|||||||

"once_cell",

|

"once_cell",

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

"wasm-bindgen-shared",

|

"wasm-bindgen-shared",

|

||||||

]

|

]

|

||||||

|

|

||||||

@ -3633,7 +3680,7 @@ checksum = "54681b18a46765f095758388f2d0cf16eb8d4169b639ab575a8f5693af210c7b"

|

|||||||

dependencies = [

|

dependencies = [

|

||||||

"proc-macro2",

|

"proc-macro2",

|

||||||

"quote",

|

"quote",

|

||||||

"syn 2.0.22",

|

"syn 2.0.25",

|

||||||

"wasm-bindgen-backend",

|

"wasm-bindgen-backend",

|

||||||

"wasm-bindgen-shared",

|

"wasm-bindgen-shared",

|

||||||

]

|

]

|

||||||

@ -3706,21 +3753,6 @@ version = "0.4.0"

|

|||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

source = "registry+https://github.com/rust-lang/crates.io-index"

|

||||||

checksum = "712e227841d057c1ee1cd2fb22fa7e5a5461ae8e48fa2ca79ec42cfc1931183f"

|

checksum = "712e227841d057c1ee1cd2fb22fa7e5a5461ae8e48fa2ca79ec42cfc1931183f"

|

||||||

|

|

||||||

[[package]]

|

|

||||||

name = "windows-sys"

|

|

||||||

version = "0.42.0"

|

|

||||||

source = "registry+https://github.com/rust-lang/crates.io-index"

|

|

||||||

checksum = "5a3e1820f08b8513f676f7ab6c1f99ff312fb97b553d30ff4dd86f9f15728aa7"

|

|

||||||

dependencies = [

|

|

||||||

"windows_aarch64_gnullvm 0.42.2",

|

|

||||||

"windows_aarch64_msvc 0.42.2",

|

|

||||||

"windows_i686_gnu 0.42.2",

|

|

||||||

"windows_i686_msvc 0.42.2",

|

|

||||||

"windows_x86_64_gnu 0.42.2",

|

|

||||||

"windows_x86_64_gnullvm 0.42.2",

|

|

||||||

"windows_x86_64_msvc 0.42.2",

|

|

||||||

]

|

|

||||||

|

|

||||||

[[package]]

|

[[package]]

|

||||||

name = "windows-sys"

|

name = "windows-sys"

|

||||||

version = "0.45.0"

|

version = "0.45.0"

|

||||||

|

|||||||

@ -8,7 +8,7 @@ members = [

|

|||||||

]

|

]

|

||||||

|

|

||||||

[workspace.package]

|

[workspace.package]

|

||||||

version = "0.9.1"

|

version = "0.9.3"

|

||||||

edition = "2021"

|

edition = "2021"

|

||||||

authors = ["Olivier Dehaene"]

|

authors = ["Olivier Dehaene"]

|

||||||

homepage = "https://github.com/huggingface/text-generation-inference"

|

homepage = "https://github.com/huggingface/text-generation-inference"

|

||||||

|

|||||||

15

Dockerfile

15

Dockerfile

@ -98,6 +98,16 @@ COPY server/Makefile-flash-att Makefile

|

|||||||

# Build specific version of flash attention

|

# Build specific version of flash attention

|

||||||

RUN make build-flash-attention

|

RUN make build-flash-attention

|

||||||

|

|

||||||

|

# Build Flash Attention v2 CUDA kernels

|

||||||

|

FROM kernel-builder as flash-att-v2-builder

|

||||||

|

|

||||||

|

WORKDIR /usr/src

|

||||||

|

|

||||||

|

COPY server/Makefile-flash-att-v2 Makefile

|

||||||

|

|

||||||

|

# Build specific version of flash attention v2

|

||||||

|

RUN make build-flash-attention-v2

|

||||||

|

|

||||||

# Build Transformers CUDA kernels

|

# Build Transformers CUDA kernels

|

||||||

FROM kernel-builder as custom-kernels-builder

|

FROM kernel-builder as custom-kernels-builder

|

||||||

|

|

||||||

@ -146,8 +156,11 @@ COPY --from=flash-att-builder /usr/src/flash-attention/build/lib.linux-x86_64-cp

|

|||||||

COPY --from=flash-att-builder /usr/src/flash-attention/csrc/layer_norm/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

COPY --from=flash-att-builder /usr/src/flash-attention/csrc/layer_norm/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

||||||

COPY --from=flash-att-builder /usr/src/flash-attention/csrc/rotary/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

COPY --from=flash-att-builder /usr/src/flash-attention/csrc/rotary/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

||||||

|

|

||||||

|

# Copy build artifacts from flash attention v2 builder

|

||||||

|

COPY --from=flash-att-v2-builder /usr/src/flash-attention-v2/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

||||||

|

|

||||||

# Copy build artifacts from custom kernels builder

|

# Copy build artifacts from custom kernels builder

|

||||||

COPY --from=custom-kernels-builder /usr/src/build/lib.linux-x86_64-cpython-39/custom_kernels /usr/src/custom-kernels/src/custom_kernels

|

COPY --from=custom-kernels-builder /usr/src/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

||||||

|

|

||||||

# Copy builds artifacts from vllm builder

|

# Copy builds artifacts from vllm builder

|

||||||

COPY --from=vllm-builder /usr/src/vllm/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

COPY --from=vllm-builder /usr/src/vllm/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

||||||

|

|||||||

13

README.md

13

README.md

@ -1,5 +1,7 @@

|

|||||||

<div align="center">

|

<div align="center">

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

# Text Generation Inference

|

# Text Generation Inference

|

||||||

|

|

||||||

<a href="https://github.com/huggingface/text-generation-inference">

|

<a href="https://github.com/huggingface/text-generation-inference">

|

||||||

@ -11,9 +13,6 @@

|

|||||||

<a href="https://huggingface.github.io/text-generation-inference">

|

<a href="https://huggingface.github.io/text-generation-inference">

|

||||||

<img alt="Swagger API documentation" src="https://img.shields.io/badge/API-Swagger-informational">

|

<img alt="Swagger API documentation" src="https://img.shields.io/badge/API-Swagger-informational">

|

||||||

</a>

|

</a>

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

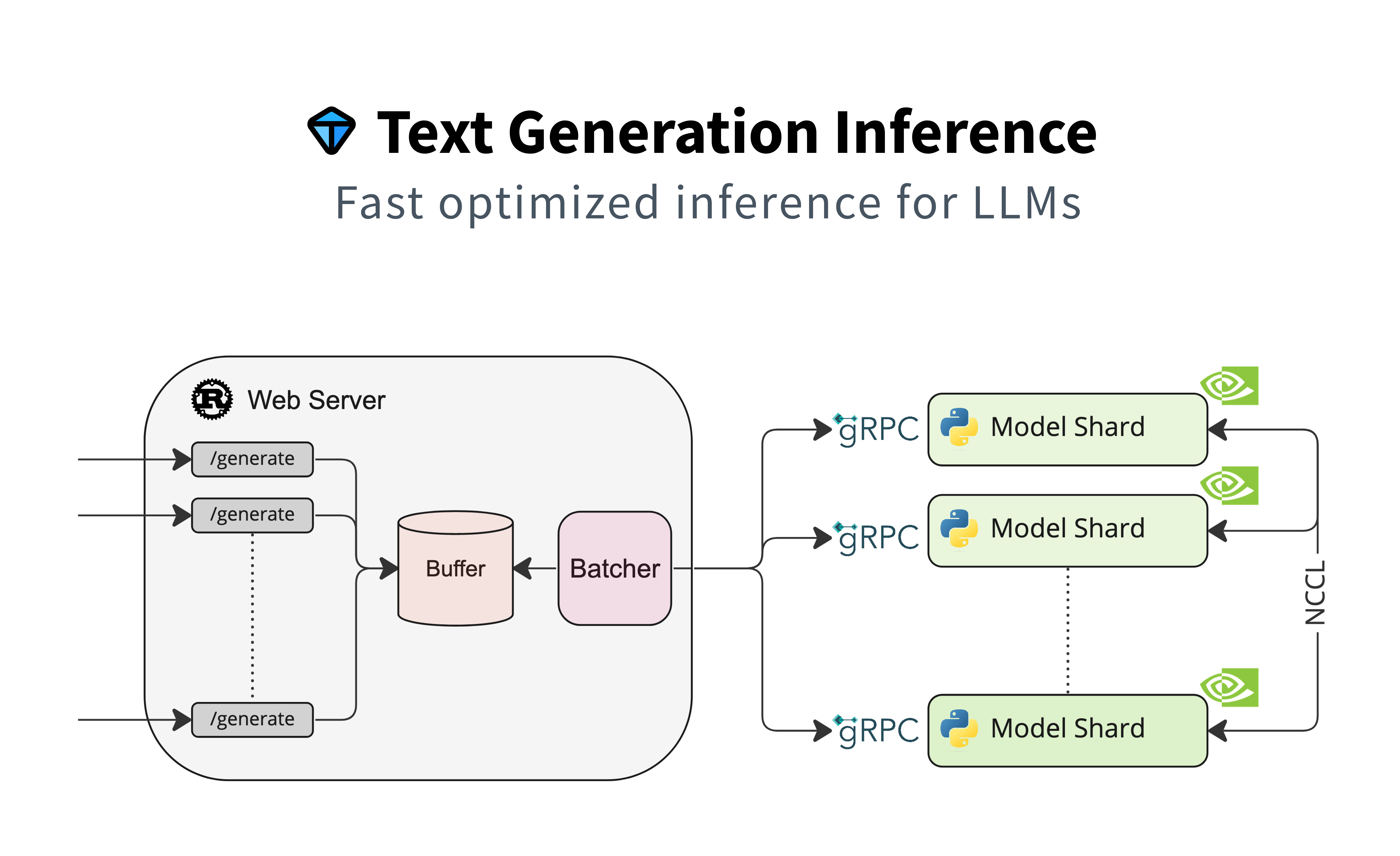

A Rust, Python and gRPC server for text generation inference. Used in production at [HuggingFace](https://huggingface.co)

|

A Rust, Python and gRPC server for text generation inference. Used in production at [HuggingFace](https://huggingface.co)

|

||||||

@ -64,6 +63,8 @@ to power LLMs api-inference widgets.

|

|||||||

- [Starcoder](https://huggingface.co/bigcode/starcoder)

|

- [Starcoder](https://huggingface.co/bigcode/starcoder)

|

||||||

- [Falcon 7B](https://huggingface.co/tiiuae/falcon-7b)

|

- [Falcon 7B](https://huggingface.co/tiiuae/falcon-7b)

|

||||||

- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

|

- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

|

||||||

|

- [MPT](https://huggingface.co/mosaicml/mpt-30b)

|

||||||

|

- [Llama V2](https://huggingface.co/meta-llama)

|

||||||

|

|

||||||

Other architectures are supported on a best effort basis using:

|

Other architectures are supported on a best effort basis using:

|

||||||

|

|

||||||

@ -133,6 +134,10 @@ print(text)

|

|||||||

You can consult the OpenAPI documentation of the `text-generation-inference` REST API using the `/docs` route.

|

You can consult the OpenAPI documentation of the `text-generation-inference` REST API using the `/docs` route.

|

||||||

The Swagger UI is also available at: [https://huggingface.github.io/text-generation-inference](https://huggingface.github.io/text-generation-inference).

|

The Swagger UI is also available at: [https://huggingface.github.io/text-generation-inference](https://huggingface.github.io/text-generation-inference).

|

||||||

|

|

||||||

|

### Using on private models or gated models

|

||||||

|

|

||||||

|

You can use `HUGGING_FACE_HUB_TOKEN` environment variable to set the token used by `text-generation-inference` to give access to protected ressources.

|

||||||

|

|

||||||

### Distributed Tracing

|

### Distributed Tracing

|

||||||

|

|

||||||

`text-generation-inference` is instrumented with distributed tracing using OpenTelemetry. You can use this feature

|

`text-generation-inference` is instrumented with distributed tracing using OpenTelemetry. You can use this feature

|

||||||

@ -212,7 +217,7 @@ sudo apt-get install libssl-dev gcc -y

|

|||||||

### CUDA Kernels

|

### CUDA Kernels

|

||||||

|

|

||||||

The custom CUDA kernels are only tested on NVIDIA A100s. If you have any installation or runtime issues, you can remove

|

The custom CUDA kernels are only tested on NVIDIA A100s. If you have any installation or runtime issues, you can remove

|

||||||

the kernels by using the `BUILD_EXTENSIONS=False` environment variable.

|

the kernels by using the `DISABLE_CUSTOM_KERNELS=True` environment variable.

|

||||||

|

|

||||||

Be aware that the official Docker image has them enabled by default.

|

Be aware that the official Docker image has them enabled by default.

|

||||||

|

|

||||||

|

|||||||

@ -10,7 +10,7 @@

|

|||||||

"name": "Apache 2.0",

|

"name": "Apache 2.0",

|

||||||

"url": "https://www.apache.org/licenses/LICENSE-2.0"

|

"url": "https://www.apache.org/licenses/LICENSE-2.0"

|

||||||

},

|

},

|

||||||

"version": "0.9.1"

|

"version": "0.9.3"

|

||||||

},

|

},

|

||||||

"paths": {

|

"paths": {

|

||||||

"/": {

|

"/": {

|

||||||

|

|||||||

@ -13,7 +13,7 @@ nix = "0.26.2"

|

|||||||

serde = { version = "1.0.152", features = ["derive"] }

|

serde = { version = "1.0.152", features = ["derive"] }

|

||||||

serde_json = "1.0.93"

|

serde_json = "1.0.93"

|

||||||

tracing = "0.1.37"

|

tracing = "0.1.37"

|

||||||

tracing-subscriber = { version = "0.3.16", features = ["json"] }

|

tracing-subscriber = { version = "0.3.16", features = ["json", "env-filter"] }

|

||||||

|

|

||||||

[dev-dependencies]

|

[dev-dependencies]

|

||||||

float_eq = "1.0.1"

|

float_eq = "1.0.1"

|

||||||

|

|||||||

@ -4,10 +4,10 @@ use nix::unistd::Pid;

|

|||||||

use serde::Deserialize;

|

use serde::Deserialize;

|

||||||

use std::env;

|

use std::env;

|

||||||

use std::ffi::OsString;

|

use std::ffi::OsString;

|

||||||

use std::io::{BufRead, BufReader, Read};

|

use std::io::{BufRead, BufReader, Lines, Read};

|

||||||

use std::os::unix::process::{CommandExt, ExitStatusExt};

|

use std::os::unix::process::{CommandExt, ExitStatusExt};

|

||||||

use std::path::Path;

|

use std::path::Path;

|

||||||

use std::process::{Child, Command, Stdio};

|

use std::process::{Child, Command, ExitStatus, Stdio};

|

||||||

use std::sync::atomic::{AtomicBool, Ordering};

|

use std::sync::atomic::{AtomicBool, Ordering};

|

||||||

use std::sync::mpsc::TryRecvError;

|

use std::sync::mpsc::TryRecvError;

|

||||||

use std::sync::{mpsc, Arc};

|

use std::sync::{mpsc, Arc};

|

||||||

@ -15,6 +15,7 @@ use std::thread;

|

|||||||

use std::thread::sleep;

|

use std::thread::sleep;

|

||||||

use std::time::{Duration, Instant};

|

use std::time::{Duration, Instant};

|

||||||

use std::{fs, io};

|

use std::{fs, io};

|

||||||

|

use tracing_subscriber::EnvFilter;

|

||||||

|

|

||||||

mod env_runtime;

|

mod env_runtime;

|

||||||

|

|

||||||

@ -41,6 +42,7 @@ impl std::fmt::Display for Quantization {

|

|||||||

#[derive(Clone, Copy, Debug, ValueEnum)]

|

#[derive(Clone, Copy, Debug, ValueEnum)]

|

||||||

enum Dtype {

|

enum Dtype {

|

||||||

Float16,

|

Float16,

|

||||||

|

#[clap(name = "bfloat16")]

|

||||||

BFloat16,

|

BFloat16,

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -182,8 +184,8 @@ struct Args {

|

|||||||

/// depends on other parameters like if you're using quantization, flash attention

|

/// depends on other parameters like if you're using quantization, flash attention

|

||||||

/// or the model implementation, text-generation-inference cannot infer this number

|

/// or the model implementation, text-generation-inference cannot infer this number

|

||||||

/// automatically.

|

/// automatically.

|

||||||

#[clap(default_value = "16000", long, env)]

|

#[clap(long, env)]

|

||||||

max_batch_total_tokens: u32,

|

max_batch_total_tokens: Option<u32>,

|

||||||

|

|

||||||

/// This setting defines how many tokens can be passed before forcing the waiting

|

/// This setting defines how many tokens can be passed before forcing the waiting

|

||||||

/// queries to be put on the batch (if the size of the batch allows for it).

|

/// queries to be put on the batch (if the size of the batch allows for it).

|

||||||

@ -265,17 +267,9 @@ struct Args {

|

|||||||

#[clap(long, env)]

|

#[clap(long, env)]

|

||||||

ngrok_authtoken: Option<String>,

|

ngrok_authtoken: Option<String>,

|

||||||

|

|

||||||

/// ngrok domain name where the axum webserver will be available at

|

/// ngrok edge

|

||||||

#[clap(long, env)]

|

#[clap(long, env)]

|

||||||

ngrok_domain: Option<String>,

|

ngrok_edge: Option<String>,

|

||||||

|

|

||||||

/// ngrok basic auth username

|

|

||||||

#[clap(long, env)]

|

|

||||||

ngrok_username: Option<String>,

|

|

||||||

|

|

||||||

/// ngrok basic auth password

|

|

||||||

#[clap(long, env)]

|

|

||||||

ngrok_password: Option<String>,

|

|

||||||

|

|

||||||

/// Display a lot of information about your runtime environment

|

/// Display a lot of information about your runtime environment

|

||||||

#[clap(long, short, action)]

|

#[clap(long, short, action)]

|

||||||

@ -285,7 +279,7 @@ struct Args {

|

|||||||

#[derive(Debug)]

|

#[derive(Debug)]

|

||||||

enum ShardStatus {

|

enum ShardStatus {

|

||||||

Ready,

|

Ready,

|

||||||

Failed((usize, Option<String>)),

|

Failed(usize),

|

||||||

}

|

}

|

||||||

|

|

||||||

#[allow(clippy::too_many_arguments)]

|

#[allow(clippy::too_many_arguments)]

|

||||||

@ -310,6 +304,9 @@ fn shard_manager(

|

|||||||

shutdown: Arc<AtomicBool>,

|

shutdown: Arc<AtomicBool>,

|

||||||

_shutdown_sender: mpsc::Sender<()>,

|

_shutdown_sender: mpsc::Sender<()>,

|

||||||

) {

|

) {

|

||||||

|

// Enter shard-manager tracing span

|

||||||

|

let _span = tracing::span!(tracing::Level::INFO, "shard-manager", rank = rank).entered();

|

||||||

|

|

||||||

// Get UDS path

|

// Get UDS path

|

||||||

let uds_string = format!("{uds_path}-{rank}");

|

let uds_string = format!("{uds_path}-{rank}");

|

||||||

let uds = Path::new(&uds_string);

|

let uds = Path::new(&uds_string);

|

||||||

@ -319,7 +316,7 @@ fn shard_manager(

|

|||||||

}

|

}

|

||||||

|

|

||||||

// Process args

|

// Process args

|

||||||

let mut shard_argv = vec![

|

let mut shard_args = vec![

|

||||||

"serve".to_string(),

|

"serve".to_string(),

|

||||||

model_id,

|

model_id,

|

||||||

"--uds-path".to_string(),

|

"--uds-path".to_string(),

|

||||||

@ -331,77 +328,71 @@ fn shard_manager(

|

|||||||

|

|

||||||

// Activate trust remote code

|

// Activate trust remote code

|

||||||

if trust_remote_code {

|

if trust_remote_code {

|

||||||

shard_argv.push("--trust-remote-code".to_string());

|

shard_args.push("--trust-remote-code".to_string());

|

||||||

}

|

}

|

||||||

|

|

||||||

// Activate tensor parallelism

|

// Activate tensor parallelism

|

||||||

if world_size > 1 {

|

if world_size > 1 {

|

||||||

shard_argv.push("--sharded".to_string());

|

shard_args.push("--sharded".to_string());

|

||||||

}

|

}

|

||||||

|

|

||||||

if let Some(quantize) = quantize {

|

if let Some(quantize) = quantize {

|

||||||

shard_argv.push("--quantize".to_string());

|

shard_args.push("--quantize".to_string());

|

||||||

shard_argv.push(quantize.to_string())

|

shard_args.push(quantize.to_string())

|

||||||

}

|

}

|

||||||

|

|

||||||