+

+

+

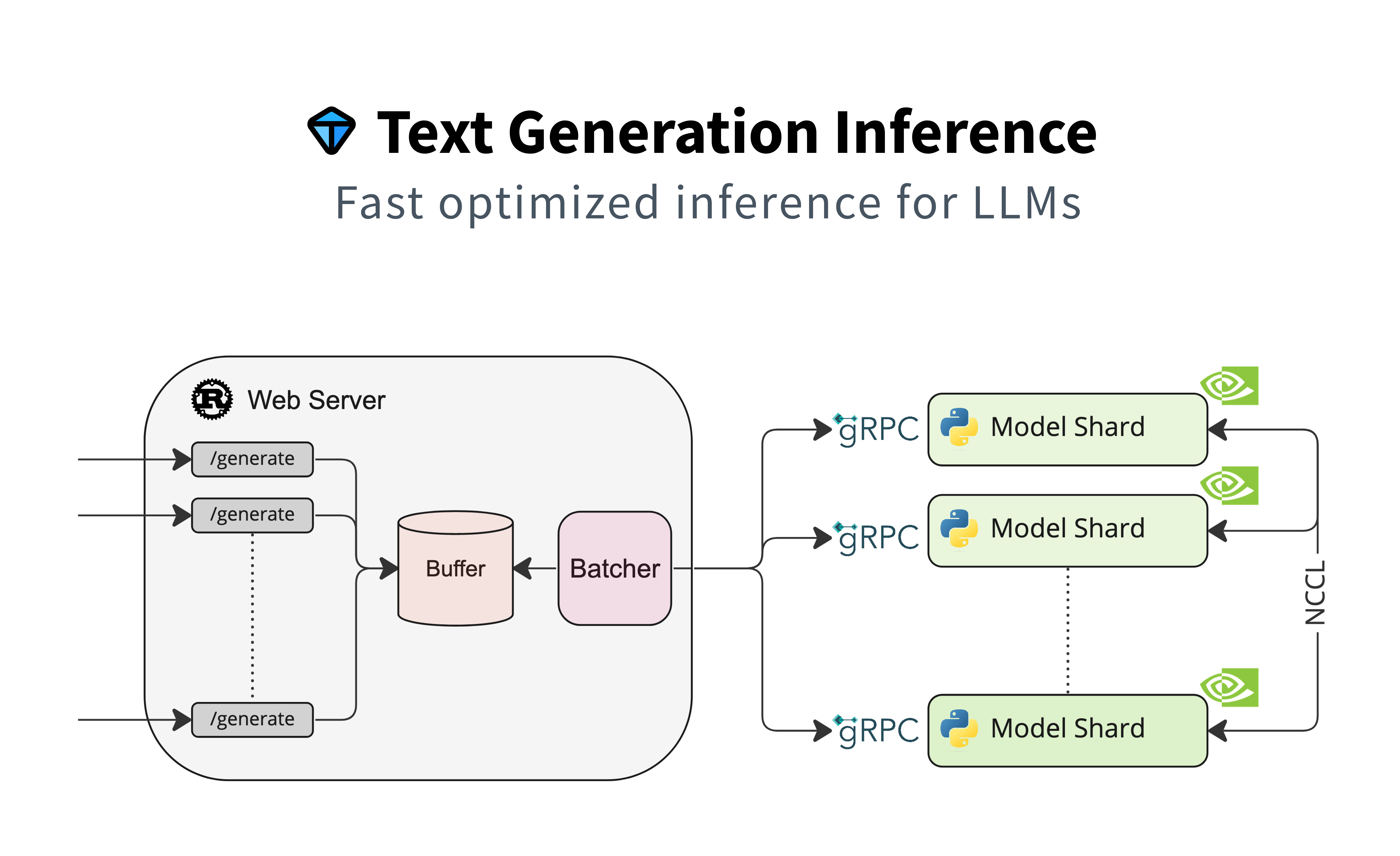

+# Text Generation Inference

+

+

+  +

+

+

+  +

+

+

+  +

+

+

+

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+ +

+

+

+

+

+  +

+

+

+

+

+  +

+

+

+ -

-

-

-

-

-  -

-

-

-

-

-  -

-

-

-