mirror of

https://github.com/huggingface/text-generation-inference.git

synced 2025-09-16 06:44:52 +00:00

Add changes from Optimum Habana's TGI folder

This commit is contained in:

parent

7a6fad6aac

commit

cc744ba426

159

Dockerfile

159

Dockerfile

@ -2,8 +2,6 @@

|

|||||||

FROM lukemathwalker/cargo-chef:latest-rust-1.71 AS chef

|

FROM lukemathwalker/cargo-chef:latest-rust-1.71 AS chef

|

||||||

WORKDIR /usr/src

|

WORKDIR /usr/src

|

||||||

|

|

||||||

ARG CARGO_REGISTRIES_CRATES_IO_PROTOCOL=sparse

|

|

||||||

|

|

||||||

FROM chef as planner

|

FROM chef as planner

|

||||||

COPY Cargo.toml Cargo.toml

|

COPY Cargo.toml Cargo.toml

|

||||||

COPY rust-toolchain.toml rust-toolchain.toml

|

COPY rust-toolchain.toml rust-toolchain.toml

|

||||||

@ -15,9 +13,6 @@ RUN cargo chef prepare --recipe-path recipe.json

|

|||||||

|

|

||||||

FROM chef AS builder

|

FROM chef AS builder

|

||||||

|

|

||||||

ARG GIT_SHA

|

|

||||||

ARG DOCKER_LABEL

|

|

||||||

|

|

||||||

RUN PROTOC_ZIP=protoc-21.12-linux-x86_64.zip && \

|

RUN PROTOC_ZIP=protoc-21.12-linux-x86_64.zip && \

|

||||||

curl -OL https://github.com/protocolbuffers/protobuf/releases/download/v21.12/$PROTOC_ZIP && \

|

curl -OL https://github.com/protocolbuffers/protobuf/releases/download/v21.12/$PROTOC_ZIP && \

|

||||||

unzip -o $PROTOC_ZIP -d /usr/local bin/protoc && \

|

unzip -o $PROTOC_ZIP -d /usr/local bin/protoc && \

|

||||||

@ -35,123 +30,18 @@ COPY router router

|

|||||||

COPY launcher launcher

|

COPY launcher launcher

|

||||||

RUN cargo build --release

|

RUN cargo build --release

|

||||||

|

|

||||||

# Python builder

|

|

||||||

# Adapted from: https://github.com/pytorch/pytorch/blob/master/Dockerfile

|

|

||||||

FROM debian:bullseye-slim as pytorch-install

|

|

||||||

|

|

||||||

ARG PYTORCH_VERSION=2.0.1

|

|

||||||

ARG PYTHON_VERSION=3.9

|

|

||||||

# Keep in sync with `server/pyproject.toml

|

|

||||||

ARG CUDA_VERSION=11.8

|

|

||||||

ARG MAMBA_VERSION=23.1.0-1

|

|

||||||

ARG CUDA_CHANNEL=nvidia

|

|

||||||

ARG INSTALL_CHANNEL=pytorch

|

|

||||||

# Automatically set by buildx

|

|

||||||

ARG TARGETPLATFORM

|

|

||||||

|

|

||||||

ENV PATH /opt/conda/bin:$PATH

|

|

||||||

|

|

||||||

RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -y --no-install-recommends \

|

|

||||||

build-essential \

|

|

||||||

ca-certificates \

|

|

||||||

ccache \

|

|

||||||

curl \

|

|

||||||

git && \

|

|

||||||

rm -rf /var/lib/apt/lists/*

|

|

||||||

|

|

||||||

# Install conda

|

|

||||||

# translating Docker's TARGETPLATFORM into mamba arches

|

|

||||||

RUN case ${TARGETPLATFORM} in \

|

|

||||||

"linux/arm64") MAMBA_ARCH=aarch64 ;; \

|

|

||||||

*) MAMBA_ARCH=x86_64 ;; \

|

|

||||||

esac && \

|

|

||||||

curl -fsSL -v -o ~/mambaforge.sh -O "https://github.com/conda-forge/miniforge/releases/download/${MAMBA_VERSION}/Mambaforge-${MAMBA_VERSION}-Linux-${MAMBA_ARCH}.sh"

|

|

||||||

RUN chmod +x ~/mambaforge.sh && \

|

|

||||||

bash ~/mambaforge.sh -b -p /opt/conda && \

|

|

||||||

rm ~/mambaforge.sh

|

|

||||||

|

|

||||||

# Install pytorch

|

|

||||||

# On arm64 we exit with an error code

|

|

||||||

RUN case ${TARGETPLATFORM} in \

|

|

||||||

"linux/arm64") exit 1 ;; \

|

|

||||||

*) /opt/conda/bin/conda update -y conda && \

|

|

||||||

/opt/conda/bin/conda install -c "${INSTALL_CHANNEL}" -c "${CUDA_CHANNEL}" -y "python=${PYTHON_VERSION}" pytorch==$PYTORCH_VERSION "pytorch-cuda=$(echo $CUDA_VERSION | cut -d'.' -f 1-2)" ;; \

|

|

||||||

esac && \

|

|

||||||

/opt/conda/bin/conda clean -ya

|

|

||||||

|

|

||||||

# CUDA kernels builder image

|

|

||||||

FROM pytorch-install as kernel-builder

|

|

||||||

|

|

||||||

RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -y --no-install-recommends \

|

|

||||||

ninja-build \

|

|

||||||

&& rm -rf /var/lib/apt/lists/*

|

|

||||||

|

|

||||||

RUN /opt/conda/bin/conda install -c "nvidia/label/cuda-11.8.0" cuda==11.8 && \

|

|

||||||

/opt/conda/bin/conda clean -ya

|

|

||||||

|

|

||||||

# Build Flash Attention CUDA kernels

|

|

||||||

FROM kernel-builder as flash-att-builder

|

|

||||||

|

|

||||||

WORKDIR /usr/src

|

|

||||||

|

|

||||||

COPY server/Makefile-flash-att Makefile

|

|

||||||

|

|

||||||

# Build specific version of flash attention

|

|

||||||

RUN make build-flash-attention

|

|

||||||

|

|

||||||

# Build Flash Attention v2 CUDA kernels

|

|

||||||

FROM kernel-builder as flash-att-v2-builder

|

|

||||||

|

|

||||||

WORKDIR /usr/src

|

|

||||||

|

|

||||||

COPY server/Makefile-flash-att-v2 Makefile

|

|

||||||

|

|

||||||

# Build specific version of flash attention v2

|

|

||||||

RUN make build-flash-attention-v2

|

|

||||||

|

|

||||||

# Build Transformers exllama kernels

|

|

||||||

FROM kernel-builder as exllama-kernels-builder

|

|

||||||

WORKDIR /usr/src

|

|

||||||

COPY server/exllama_kernels/ .

|

|

||||||

# Build specific version of transformers

|

|

||||||

RUN TORCH_CUDA_ARCH_LIST="8.0;8.6+PTX" python setup.py build

|

|

||||||

|

|

||||||

# Build Transformers awq kernels

|

|

||||||

FROM kernel-builder as awq-kernels-builder

|

|

||||||

WORKDIR /usr/src

|

|

||||||

COPY server/Makefile-awq Makefile

|

|

||||||

# Build specific version of transformers

|

|

||||||

RUN TORCH_CUDA_ARCH_LIST="8.0;8.6+PTX" make build-awq

|

|

||||||

|

|

||||||

# Build Transformers CUDA kernels

|

|

||||||

FROM kernel-builder as custom-kernels-builder

|

|

||||||

WORKDIR /usr/src

|

|

||||||

COPY server/custom_kernels/ .

|

|

||||||

# Build specific version of transformers

|

|

||||||

RUN python setup.py build

|

|

||||||

|

|

||||||

# Build vllm CUDA kernels

|

|

||||||

FROM kernel-builder as vllm-builder

|

|

||||||

|

|

||||||

WORKDIR /usr/src

|

|

||||||

|

|

||||||

COPY server/Makefile-vllm Makefile

|

|

||||||

|

|

||||||

# Build specific version of vllm

|

|

||||||

RUN make build-vllm

|

|

||||||

|

|

||||||

# Text Generation Inference base image

|

# Text Generation Inference base image

|

||||||

FROM nvidia/cuda:11.8.0-base-ubuntu20.04 as base

|

FROM vault.habana.ai/gaudi-docker/1.13.0/ubuntu22.04/habanalabs/pytorch-installer-2.1.0:latest as base

|

||||||

|

|

||||||

# Conda env

|

|

||||||

ENV PATH=/opt/conda/bin:$PATH \

|

|

||||||

CONDA_PREFIX=/opt/conda

|

|

||||||

|

|

||||||

# Text Generation Inference base env

|

# Text Generation Inference base env

|

||||||

ENV HUGGINGFACE_HUB_CACHE=/data \

|

ENV HUGGINGFACE_HUB_CACHE=/data \

|

||||||

HF_HUB_ENABLE_HF_TRANSFER=1 \

|

HF_HUB_ENABLE_HF_TRANSFER=1 \

|

||||||

PORT=80

|

PORT=80

|

||||||

|

|

||||||

|

# libssl.so.1.1 is not installed on Ubuntu 22.04 by default, install it

|

||||||

|

RUN wget http://nz2.archive.ubuntu.com/ubuntu/pool/main/o/openssl/libssl1.1_1.1.1f-1ubuntu2_amd64.deb && \

|

||||||

|

dpkg -i ./libssl1.1_1.1.1f-1ubuntu2_amd64.deb

|

||||||

|

|

||||||

WORKDIR /usr/src

|

WORKDIR /usr/src

|

||||||

|

|

||||||

RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -y --no-install-recommends \

|

RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -y --no-install-recommends \

|

||||||

@ -161,30 +51,6 @@ RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -y --no-ins

|

|||||||

curl \

|

curl \

|

||||||

&& rm -rf /var/lib/apt/lists/*

|

&& rm -rf /var/lib/apt/lists/*

|

||||||

|

|

||||||

# Copy conda with PyTorch installed

|

|

||||||

COPY --from=pytorch-install /opt/conda /opt/conda

|

|

||||||

|

|

||||||

# Copy build artifacts from flash attention builder

|

|

||||||

COPY --from=flash-att-builder /usr/src/flash-attention/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

COPY --from=flash-att-builder /usr/src/flash-attention/csrc/layer_norm/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

COPY --from=flash-att-builder /usr/src/flash-attention/csrc/rotary/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

|

|

||||||

# Copy build artifacts from flash attention v2 builder

|

|

||||||

COPY --from=flash-att-v2-builder /usr/src/flash-attention-v2/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

|

|

||||||

# Copy build artifacts from custom kernels builder

|

|

||||||

COPY --from=custom-kernels-builder /usr/src/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

# Copy build artifacts from exllama kernels builder

|

|

||||||

COPY --from=exllama-kernels-builder /usr/src/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

# Copy build artifacts from awq kernels builder

|

|

||||||

COPY --from=awq-kernels-builder /usr/src/llm-awq/awq/kernels/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

|

|

||||||

# Copy builds artifacts from vllm builder

|

|

||||||

COPY --from=vllm-builder /usr/src/vllm/build/lib.linux-x86_64-cpython-39 /opt/conda/lib/python3.9/site-packages

|

|

||||||

|

|

||||||

# Install flash-attention dependencies

|

|

||||||

RUN pip install einops --no-cache-dir

|

|

||||||

|

|

||||||

# Install server

|

# Install server

|

||||||

COPY proto proto

|

COPY proto proto

|

||||||

COPY server server

|

COPY server server

|

||||||

@ -192,7 +58,7 @@ COPY server/Makefile server/Makefile

|

|||||||

RUN cd server && \

|

RUN cd server && \

|

||||||

make gen-server && \

|

make gen-server && \

|

||||||

pip install -r requirements.txt && \

|

pip install -r requirements.txt && \

|

||||||

pip install ".[bnb, accelerate, quantize]" --no-cache-dir

|

pip install . --no-cache-dir

|

||||||

|

|

||||||

# Install benchmarker

|

# Install benchmarker

|

||||||

COPY --from=builder /usr/src/target/release/text-generation-benchmark /usr/local/bin/text-generation-benchmark

|

COPY --from=builder /usr/src/target/release/text-generation-benchmark /usr/local/bin/text-generation-benchmark

|

||||||

@ -201,19 +67,6 @@ COPY --from=builder /usr/src/target/release/text-generation-router /usr/local/bi

|

|||||||

# Install launcher

|

# Install launcher

|

||||||

COPY --from=builder /usr/src/target/release/text-generation-launcher /usr/local/bin/text-generation-launcher

|

COPY --from=builder /usr/src/target/release/text-generation-launcher /usr/local/bin/text-generation-launcher

|

||||||

|

|

||||||

RUN apt-get update && DEBIAN_FRONTEND=noninteractive apt-get install -y --no-install-recommends \

|

|

||||||

build-essential \

|

|

||||||

g++ \

|

|

||||||

&& rm -rf /var/lib/apt/lists/*

|

|

||||||

|

|

||||||

# AWS Sagemaker compatbile image

|

|

||||||

FROM base as sagemaker

|

|

||||||

|

|

||||||

COPY sagemaker-entrypoint.sh entrypoint.sh

|

|

||||||

RUN chmod +x entrypoint.sh

|

|

||||||

|

|

||||||

ENTRYPOINT ["./entrypoint.sh"]

|

|

||||||

|

|

||||||

# Final image

|

# Final image

|

||||||

FROM base

|

FROM base

|

||||||

|

|

||||||

|

|||||||

9

Makefile

9

Makefile

@ -1,9 +1,6 @@

|

|||||||

install-server:

|

install-server:

|

||||||

cd server && make install

|

cd server && make install

|

||||||

|

|

||||||

install-custom-kernels:

|

|

||||||

if [ "$$BUILD_EXTENSIONS" = "True" ]; then cd server/custom_kernels && python setup.py install; else echo "Custom kernels are disabled, you need to set the BUILD_EXTENSIONS environment variable to 'True' in order to build them. (Please read the docs, kernels might not work on all hardware)"; fi

|

|

||||||

|

|

||||||

install-integration-tests:

|

install-integration-tests:

|

||||||

cd integration-tests && pip install -r requirements.txt

|

cd integration-tests && pip install -r requirements.txt

|

||||||

cd clients/python && pip install .

|

cd clients/python && pip install .

|

||||||

@ -45,8 +42,8 @@ python-tests: python-server-tests python-client-tests

|

|||||||

run-falcon-7b-instruct:

|

run-falcon-7b-instruct:

|

||||||

text-generation-launcher --model-id tiiuae/falcon-7b-instruct --port 8080

|

text-generation-launcher --model-id tiiuae/falcon-7b-instruct --port 8080

|

||||||

|

|

||||||

run-falcon-7b-instruct-quantize:

|

|

||||||

text-generation-launcher --model-id tiiuae/falcon-7b-instruct --quantize bitsandbytes --port 8080

|

|

||||||

|

|

||||||

clean:

|

clean:

|

||||||

rm -rf target aml

|

rm -rf target aml

|

||||||

|

|

||||||

|

debug_image_build:

|

||||||

|

docker build --no-cache --progress=plain -t debug_tgi .

|

||||||

|

|||||||

359

README.md

359

README.md

@ -1,288 +1,83 @@

|

|||||||

|

<!---

|

||||||

|

Copyright 2023 The HuggingFace Team. All rights reserved.

|

||||||

|

|

||||||

|

Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

you may not use this file except in compliance with the License.

|

||||||

|

You may obtain a copy of the License at

|

||||||

|

|

||||||

|

http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

|

||||||

|

Unless required by applicable law or agreed to in writing, software

|

||||||

|

distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

See the License for the specific language governing permissions and

|

||||||

|

limitations under the License.

|

||||||

|

-->

|

||||||

|

|

||||||

|

# Text Generation Inference on Habana Gaudi

|

||||||

|

|

||||||

|

To use [🤗 text-generation-inference](https://github.com/huggingface/text-generation-inference) on Habana Gaudi/Gaudi2, follow these steps:

|

||||||

|

|

||||||

|

1. Build the Docker image located in this folder with:

|

||||||

|

```bash

|

||||||

|

docker build -t tgi_gaudi .

|

||||||

|

```

|

||||||

|

2. Launch a local server instance on 1 Gaudi card:

|

||||||

|

```bash

|

||||||

|

model=meta-llama/Llama-2-7b-hf

|

||||||

|

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||||

|

|

||||||

|

docker run -p 8080:80 -v $volume:/data --runtime=habana -e HABANA_VISIBLE_DEVICES=all -e OMPI_MCA_btl_vader_single_copy_mechanism=none --cap-add=sys_nice --ipc=host tgi_gaudi --model-id $model

|

||||||

|

```

|

||||||

|

3. Launch a local server instance on 8 Gaudi cards:

|

||||||

|

```bash

|

||||||

|

model=meta-llama/Llama-2-70b-hf

|

||||||

|

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||||

|

|

||||||

|

docker run -p 8080:80 -v $volume:/data --runtime=habana -e PT_HPU_ENABLE_LAZY_COLLECTIVES=true -e HABANA_VISIBLE_DEVICES=all -e OMPI_MCA_btl_vader_single_copy_mechanism=none --cap-add=sys_nice --ipc=host tgi_gaudi --model-id $model --sharded true --num-shard 8

|

||||||

|

```

|

||||||

|

4. You can then send a request:

|

||||||

|

```bash

|

||||||

|

curl 127.0.0.1:8080/generate \

|

||||||

|

-X POST \

|

||||||

|

-d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":17, "do_sample": true}}' \

|

||||||

|

-H 'Content-Type: application/json'

|

||||||

|

```

|

||||||

|

> The first call will be slower as the model is compiled.

|

||||||

|

5. To run benchmark test, please refer [TGI's benchmark tool](https://github.com/huggingface/text-generation-inference/tree/main/benchmark).

|

||||||

|

|

||||||

|

To run it on the same machine, you can do the following:

|

||||||

|

* `docker exec -it <docker name> bash` , pick the docker started from step 3 or 4 using docker ps

|

||||||

|

* `text-generation-benchmark -t <model-id>` , pass the model-id from docker run command

|

||||||

|

* after the completion of tests, hit ctrl+c to see the performance data summary.

|

||||||

|

|

||||||

|

> For gated models such as [StarCoder](https://huggingface.co/bigcode/starcoder), you will have to pass `-e HUGGING_FACE_HUB_TOKEN=<token>` to the `docker run` command above with a valid Hugging Face Hub read token.

|

||||||

|

|

||||||

|

For more information and documentation about Text Generation Inference, checkout [the README](https://github.com/huggingface/text-generation-inference#text-generation-inference) of the original repo.

|

||||||

|

|

||||||

|

Not all features of TGI are currently supported as this is still a work in progress.

|

||||||

|

|

||||||

|

New changes are added for the current release:

|

||||||

|

- Sharded feature with support for DeepSpeed-inference auto tensor parallism. Also use HPU graph for performance improvement.

|

||||||

|

- Torch profile.

|

||||||

|

|

||||||

|

|

||||||

|

Enviroment Variables Added:

|

||||||

|

|

||||||

<div align="center">

|

<div align="center">

|

||||||

|

|

||||||

|

| Name | Value(s) | Default | Description | Usage |

|

||||||

|

|------------------ |:---------------|:------------|:-------------------- |:---------------------------------

|

||||||

# Text Generation Inference

|

| MAX_TOTAL_TOKENS | integer | 0 | Control the padding of input | add -e in docker run, such |

|

||||||

|

| ENABLE_HPU_GRAPH | true/false | true | Enable hpu graph or not | add -e in docker run command |

|

||||||

<a href="https://github.com/huggingface/text-generation-inference">

|

| PROF_WARMUPSTEP | integer | 0 | Enable/disable profile, control profile warmup step, 0 means disable profile | add -e in docker run command |

|

||||||

<img alt="GitHub Repo stars" src="https://img.shields.io/github/stars/huggingface/text-generation-inference?style=social">

|

| PROF_STEP | interger | 5 | Control profile step | add -e in docker run command |

|

||||||

</a>

|

| PROF_PATH | string | /root/text-generation-inference | Define profile folder | add -e in docker run command |

|

||||||

<a href="https://huggingface.github.io/text-generation-inference">

|

| LIMIT_HPU_GRAPH | True/False | False | Skip HPU graph usage for prefill to save memory | add -e in docker run command |

|

||||||

<img alt="Swagger API documentation" src="https://img.shields.io/badge/API-Swagger-informational">

|

|

||||||

</a>

|

|

||||||

|

|

||||||

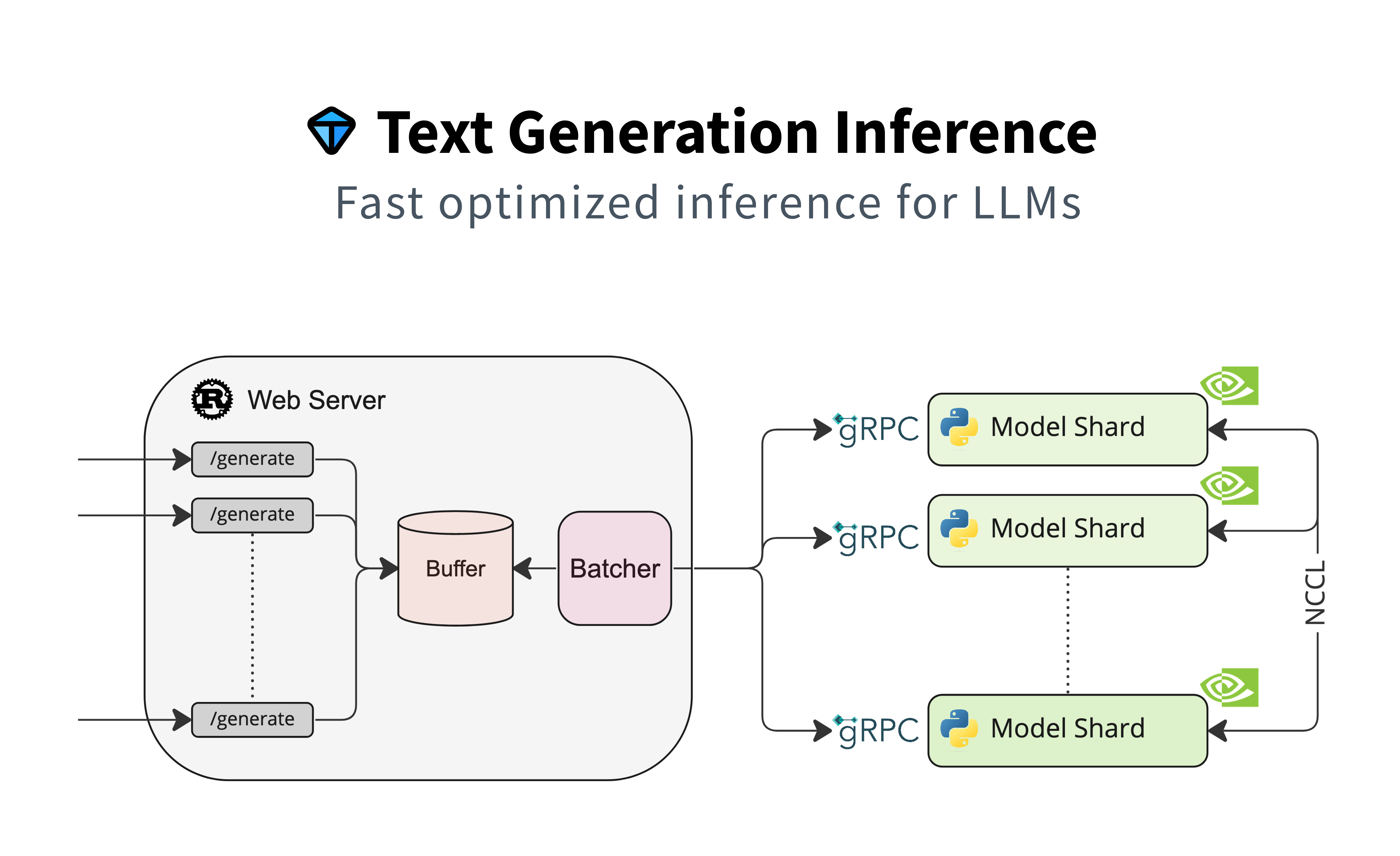

A Rust, Python and gRPC server for text generation inference. Used in production at [HuggingFace](https://huggingface.co)

|

|

||||||

to power Hugging Chat, the Inference API and Inference Endpoint.

|

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

|

|

||||||

## Table of contents

|

|

||||||

|

|

||||||

- [Features](#features)

|

|

||||||

- [Optimized Architectures](#optimized-architectures)

|

|

||||||

- [Get Started](#get-started)

|

|

||||||

- [Docker](#docker)

|

|

||||||

- [API Documentation](#api-documentation)

|

|

||||||

- [Using a private or gated model](#using-a-private-or-gated-model)

|

|

||||||

- [A note on Shared Memory](#a-note-on-shared-memory-shm)

|

|

||||||

- [Distributed Tracing](#distributed-tracing)

|

|

||||||

- [Local Install](#local-install)

|

|

||||||

- [CUDA Kernels](#cuda-kernels)

|

|

||||||

- [Run Falcon](#run-falcon)

|

|

||||||

- [Run](#run)

|

|

||||||

- [Quantization](#quantization)

|

|

||||||

- [Develop](#develop)

|

|

||||||

- [Testing](#testing)

|

|

||||||

- [Other supported hardware](#other-supported-hardware)

|

|

||||||

|

|

||||||

## Features

|

|

||||||

|

|

||||||

- Serve the most popular Large Language Models with a simple launcher

|

|

||||||

- Tensor Parallelism for faster inference on multiple GPUs

|

|

||||||

- Token streaming using Server-Sent Events (SSE)

|

|

||||||

- [Continuous batching of incoming requests](https://github.com/huggingface/text-generation-inference/tree/main/router) for increased total throughput

|

|

||||||

- Optimized transformers code for inference using [flash-attention](https://github.com/HazyResearch/flash-attention) and [Paged Attention](https://github.com/vllm-project/vllm) on the most popular architectures

|

|

||||||

- Quantization with [bitsandbytes](https://github.com/TimDettmers/bitsandbytes) and [GPT-Q](https://arxiv.org/abs/2210.17323)

|

|

||||||

- [Safetensors](https://github.com/huggingface/safetensors) weight loading

|

|

||||||

- Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

|

||||||

- Logits warper (temperature scaling, top-p, top-k, repetition penalty, more details see [transformers.LogitsProcessor](https://huggingface.co/docs/transformers/internal/generation_utils#transformers.LogitsProcessor))

|

|

||||||

- Stop sequences

|

|

||||||

- Log probabilities

|

|

||||||

- Production ready (distributed tracing with Open Telemetry, Prometheus metrics)

|

|

||||||

- Custom Prompt Generation: Easily generate text by providing custom prompts to guide the model's output.

|

|

||||||

- Fine-tuning Support: Utilize fine-tuned models for specific tasks to achieve higher accuracy and performance.

|

|

||||||

|

|

||||||

|

|

||||||

## Optimized architectures

|

|

||||||

|

|

||||||

- [BLOOM](https://huggingface.co/bigscience/bloom)

|

|

||||||

- [FLAN-T5](https://huggingface.co/google/flan-t5-xxl)

|

|

||||||

- [Galactica](https://huggingface.co/facebook/galactica-120b)

|

|

||||||

- [GPT-Neox](https://huggingface.co/EleutherAI/gpt-neox-20b)

|

|

||||||

- [Llama](https://github.com/facebookresearch/llama)

|

|

||||||

- [OPT](https://huggingface.co/facebook/opt-66b)

|

|

||||||

- [SantaCoder](https://huggingface.co/bigcode/santacoder)

|

|

||||||

- [Starcoder](https://huggingface.co/bigcode/starcoder)

|

|

||||||

- [Falcon 7B](https://huggingface.co/tiiuae/falcon-7b)

|

|

||||||

- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

|

|

||||||

- [MPT](https://huggingface.co/mosaicml/mpt-30b)

|

|

||||||

- [Llama V2](https://huggingface.co/meta-llama)

|

|

||||||

- [Code Llama](https://huggingface.co/codellama)

|

|

||||||

- [Mistral](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1)

|

|

||||||

|

|

||||||

Other architectures are supported on a best effort basis using:

|

|

||||||

|

|

||||||

`AutoModelForCausalLM.from_pretrained(<model>, device_map="auto")`

|

|

||||||

|

|

||||||

or

|

|

||||||

|

|

||||||

`AutoModelForSeq2SeqLM.from_pretrained(<model>, device_map="auto")`

|

|

||||||

|

|

||||||

## Get started

|

|

||||||

|

|

||||||

### Docker

|

|

||||||

|

|

||||||

The easiest way of getting started is using the official Docker container:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

model=tiiuae/falcon-7b-instruct

|

|

||||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

|

||||||

|

|

||||||

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.1.0 --model-id $model

|

|

||||||

```

|

|

||||||

**Note:** To use GPUs, you need to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html). We also recommend using NVIDIA drivers with CUDA version 11.8 or higher. For running the Docker container on a machine with no GPUs or CUDA support, it is enough to remove the `--gpus all` flag and add `--disable-custom-kernels`, please note CPU is not the intended platform for this project, so performance might be subpar.

|

|

||||||

|

|

||||||

To see all options to serve your models (in the [code](https://github.com/huggingface/text-generation-inference/blob/main/launcher/src/main.rs) or in the cli):

|

|

||||||

```

|

|

||||||

text-generation-launcher --help

|

|

||||||

```

|

|

||||||

|

|

||||||

You can then query the model using either the `/generate` or `/generate_stream` routes:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

curl 127.0.0.1:8080/generate \

|

|

||||||

-X POST \

|

|

||||||

-d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' \

|

|

||||||

-H 'Content-Type: application/json'

|

|

||||||

```

|

|

||||||

|

|

||||||

```shell

|

|

||||||

curl 127.0.0.1:8080/generate_stream \

|

|

||||||

-X POST \

|

|

||||||

-d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' \

|

|

||||||

-H 'Content-Type: application/json'

|

|

||||||

```

|

|

||||||

|

|

||||||

or from Python:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

pip install text-generation

|

|

||||||

```

|

|

||||||

|

|

||||||

```python

|

|

||||||

from text_generation import Client

|

|

||||||

|

|

||||||

client = Client("http://127.0.0.1:8080")

|

|

||||||

print(client.generate("What is Deep Learning?", max_new_tokens=20).generated_text)

|

|

||||||

|

|

||||||

text = ""

|

|

||||||

for response in client.generate_stream("What is Deep Learning?", max_new_tokens=20):

|

|

||||||

if not response.token.special:

|

|

||||||

text += response.token.text

|

|

||||||

print(text)

|

|

||||||

```

|

|

||||||

|

|

||||||

### API documentation

|

|

||||||

|

|

||||||

You can consult the OpenAPI documentation of the `text-generation-inference` REST API using the `/docs` route.

|

|

||||||

The Swagger UI is also available at: [https://huggingface.github.io/text-generation-inference](https://huggingface.github.io/text-generation-inference).

|

|

||||||

|

|

||||||

### Using a private or gated model

|

|

||||||

|

|

||||||

You have the option to utilize the `HUGGING_FACE_HUB_TOKEN` environment variable for configuring the token employed by

|

|

||||||

`text-generation-inference`. This allows you to gain access to protected resources.

|

|

||||||

|

|

||||||

For example, if you want to serve the gated Llama V2 model variants:

|

|

||||||

|

|

||||||

1. Go to https://huggingface.co/settings/tokens

|

|

||||||

2. Copy your cli READ token

|

|

||||||

3. Export `HUGGING_FACE_HUB_TOKEN=<your cli READ token>`

|

|

||||||

|

|

||||||

or with Docker:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

model=meta-llama/Llama-2-7b-chat-hf

|

|

||||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

|

||||||

token=<your cli READ token>

|

|

||||||

|

|

||||||

docker run --gpus all --shm-size 1g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.1.0 --model-id $model

|

|

||||||

```

|

|

||||||

|

|

||||||

### A note on Shared Memory (shm)

|

|

||||||

|

|

||||||

[`NCCL`](https://docs.nvidia.com/deeplearning/nccl/user-guide/docs/index.html) is a communication framework used by

|

|

||||||

`PyTorch` to do distributed training/inference. `text-generation-inference` make

|

|

||||||

use of `NCCL` to enable Tensor Parallelism to dramatically speed up inference for large language models.

|

|

||||||

|

|

||||||

In order to share data between the different devices of a `NCCL` group, `NCCL` might fall back to using the host memory if

|

|

||||||

peer-to-peer using NVLink or PCI is not possible.

|

|

||||||

|

|

||||||

To allow the container to use 1G of Shared Memory and support SHM sharing, we add `--shm-size 1g` on the above command.

|

|

||||||

|

|

||||||

If you are running `text-generation-inference` inside `Kubernetes`. You can also add Shared Memory to the container by

|

|

||||||

creating a volume with:

|

|

||||||

|

|

||||||

```yaml

|

|

||||||

- name: shm

|

|

||||||

emptyDir:

|

|

||||||

medium: Memory

|

|

||||||

sizeLimit: 1Gi

|

|

||||||

```

|

|

||||||

|

|

||||||

and mounting it to `/dev/shm`.

|

|

||||||

|

|

||||||

Finally, you can also disable SHM sharing by using the `NCCL_SHM_DISABLE=1` environment variable. However, note that

|

|

||||||

this will impact performance.

|

|

||||||

|

|

||||||

### Distributed Tracing

|

|

||||||

|

|

||||||

`text-generation-inference` is instrumented with distributed tracing using OpenTelemetry. You can use this feature

|

|

||||||

by setting the address to an OTLP collector with the `--otlp-endpoint` argument.

|

|

||||||

|

|

||||||

### Local install

|

|

||||||

|

|

||||||

You can also opt to install `text-generation-inference` locally.

|

|

||||||

|

|

||||||

First [install Rust](https://rustup.rs/) and create a Python virtual environment with at least

|

|

||||||

Python 3.9, e.g. using `conda`:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

|

|

||||||

|

|

||||||

conda create -n text-generation-inference python=3.9

|

|

||||||

conda activate text-generation-inference

|

|

||||||

```

|

|

||||||

|

|

||||||

You may also need to install Protoc.

|

|

||||||

|

|

||||||

On Linux:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

PROTOC_ZIP=protoc-21.12-linux-x86_64.zip

|

|

||||||

curl -OL https://github.com/protocolbuffers/protobuf/releases/download/v21.12/$PROTOC_ZIP

|

|

||||||

sudo unzip -o $PROTOC_ZIP -d /usr/local bin/protoc

|

|

||||||

sudo unzip -o $PROTOC_ZIP -d /usr/local 'include/*'

|

|

||||||

rm -f $PROTOC_ZIP

|

|

||||||

```

|

|

||||||

|

|

||||||

On MacOS, using Homebrew:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

brew install protobuf

|

|

||||||

```

|

|

||||||

|

|

||||||

Then run:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

BUILD_EXTENSIONS=True make install # Install repository and HF/transformer fork with CUDA kernels

|

|

||||||

make run-falcon-7b-instruct

|

|

||||||

```

|

|

||||||

|

|

||||||

**Note:** on some machines, you may also need the OpenSSL libraries and gcc. On Linux machines, run:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

sudo apt-get install libssl-dev gcc -y

|

|

||||||

```

|

|

||||||

|

|

||||||

### CUDA Kernels

|

|

||||||

|

|

||||||

The custom CUDA kernels are only tested on NVIDIA A100s. If you have any installation or runtime issues, you can remove

|

|

||||||

the kernels by using the `DISABLE_CUSTOM_KERNELS=True` environment variable.

|

|

||||||

|

|

||||||

Be aware that the official Docker image has them enabled by default.

|

|

||||||

|

|

||||||

## Run Falcon

|

|

||||||

|

|

||||||

### Run

|

|

||||||

|

|

||||||

```shell

|

|

||||||

make run-falcon-7b-instruct

|

|

||||||

```

|

|

||||||

|

|

||||||

### Quantization

|

|

||||||

|

|

||||||

You can also quantize the weights with bitsandbytes to reduce the VRAM requirement:

|

|

||||||

|

|

||||||

```shell

|

|

||||||

make run-falcon-7b-instruct-quantize

|

|

||||||

```

|

|

||||||

|

|

||||||

4bit quantization is available using the [NF4 and FP4 data types from bitsandbytes](https://arxiv.org/pdf/2305.14314.pdf). It can be enabled by providing `--quantize bitsandbytes-nf4` or `--quantize bitsandbytes-fp4` as a command line argument to `text-generation-launcher`.

|

|

||||||

|

|

||||||

## Develop

|

|

||||||

|

|

||||||

```shell

|

|

||||||

make server-dev

|

|

||||||

make router-dev

|

|

||||||

```

|

|

||||||

|

|

||||||

## Testing

|

|

||||||

|

|

||||||

```shell

|

|

||||||

# python

|

|

||||||

make python-server-tests

|

|

||||||

make python-client-tests

|

|

||||||

# or both server and client tests

|

|

||||||

make python-tests

|

|

||||||

# rust cargo tests

|

|

||||||

make rust-tests

|

|

||||||

# integration tests

|

|

||||||

make integration-tests

|

|

||||||

```

|

|

||||||

|

|

||||||

|

|

||||||

## Other supported hardware

|

|

||||||

|

|

||||||

TGI is also supported on the following AI hardware accelerators:

|

|

||||||

- *Habana first-gen Gaudi and Gaudi2:* checkout [here](https://github.com/huggingface/optimum-habana/tree/main/text-generation-inference) how to serve models with TGI on Gaudi and Gaudi2 with [Optimum Habana](https://huggingface.co/docs/optimum/habana/index)

|

|

||||||

|

|

||||||

|

> The license to use TGI on Habana Gaudi is the one of TGI: https://github.com/huggingface/text-generation-inference/blob/main/LICENSE

|

||||||

|

>

|

||||||

|

> Please reach out to api-enterprise@huggingface.co if you have any question.

|

||||||

|

|||||||

@ -459,7 +459,9 @@ fn shard_manager(

|

|||||||

let mut envs: Vec<(OsString, OsString)> = env::vars_os().collect();

|

let mut envs: Vec<(OsString, OsString)> = env::vars_os().collect();

|

||||||

|

|

||||||

// Torch Distributed Env vars

|

// Torch Distributed Env vars

|

||||||

envs.push(("RANK".into(), rank.to_string().into()));

|

if world_size == 1 {

|

||||||

|

envs.push(("RANK".into(), rank.to_string().into()));

|

||||||

|

}

|

||||||

envs.push(("WORLD_SIZE".into(), world_size.to_string().into()));

|

envs.push(("WORLD_SIZE".into(), world_size.to_string().into()));

|

||||||

envs.push(("MASTER_ADDR".into(), master_addr.into()));

|

envs.push(("MASTER_ADDR".into(), master_addr.into()));

|

||||||

envs.push(("MASTER_PORT".into(), master_port.to_string().into()));

|

envs.push(("MASTER_PORT".into(), master_port.to_string().into()));

|

||||||

@ -870,7 +872,7 @@ fn spawn_shards(

|

|||||||

running: Arc<AtomicBool>,

|

running: Arc<AtomicBool>,

|

||||||

) -> Result<(), LauncherError> {

|

) -> Result<(), LauncherError> {

|

||||||

// Start shard processes

|

// Start shard processes

|

||||||

for rank in 0..num_shard {

|

for rank in 0..1 {

|

||||||

let model_id = args.model_id.clone();

|

let model_id = args.model_id.clone();

|

||||||

let revision = args.revision.clone();

|

let revision = args.revision.clone();

|

||||||

let uds_path = args.shard_uds_path.clone();

|

let uds_path = args.shard_uds_path.clone();

|

||||||

@ -921,12 +923,12 @@ fn spawn_shards(

|

|||||||

drop(shutdown_sender);

|

drop(shutdown_sender);

|

||||||

|

|

||||||

// Wait for shard to start

|

// Wait for shard to start

|

||||||

let mut shard_ready = 0;

|

let mut shard_ready = 0;

|

||||||

while running.load(Ordering::SeqCst) {

|

while running.load(Ordering::SeqCst) {

|

||||||

match status_receiver.try_recv() {

|

match status_receiver.try_recv() {

|

||||||

Ok(ShardStatus::Ready) => {

|

Ok(ShardStatus::Ready) => {

|

||||||

shard_ready += 1;

|

shard_ready += 1;

|

||||||

if shard_ready == num_shard {

|

if shard_ready == 1 {

|

||||||

break;

|

break;

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

@ -16,11 +16,7 @@ gen-server:

|

|||||||

find text_generation_server/pb/ -type f -name "*.py" -print0 -exec sed -i -e 's/^\(import.*pb2\)/from . \1/g' {} \;

|

find text_generation_server/pb/ -type f -name "*.py" -print0 -exec sed -i -e 's/^\(import.*pb2\)/from . \1/g' {} \;

|

||||||

touch text_generation_server/pb/__init__.py

|

touch text_generation_server/pb/__init__.py

|

||||||

|

|

||||||

install-torch:

|

install: gen-server

|

||||||

# Install specific version of torch

|

|

||||||

pip install torch --extra-index-url https://download.pytorch.org/whl/cu118 --no-cache-dir

|

|

||||||

|

|

||||||

install: gen-server install-torch

|

|

||||||

pip install pip --upgrade

|

pip install pip --upgrade

|

||||||

pip install -r requirements.txt

|

pip install -r requirements.txt

|

||||||

pip install -e ".[bnb, accelerate]"

|

pip install -e ".[bnb, accelerate]"

|

||||||

@ -28,5 +24,12 @@ install: gen-server install-torch

|

|||||||

run-dev:

|

run-dev:

|

||||||

SAFETENSORS_FAST_GPU=1 python -m torch.distributed.run --nproc_per_node=2 text_generation_server/cli.py serve bigscience/bloom-560m --sharded

|

SAFETENSORS_FAST_GPU=1 python -m torch.distributed.run --nproc_per_node=2 text_generation_server/cli.py serve bigscience/bloom-560m --sharded

|

||||||

|

|

||||||

|

install-poetry:

|

||||||

|

curl -sSL https://install.python-poetry.org | python3 -

|

||||||

|

|

||||||

|

update-lock:

|

||||||

|

rm poetry.lock

|

||||||

|

poetry lock --no-update

|

||||||

|

|

||||||

export-requirements:

|

export-requirements:

|

||||||

poetry export -o requirements.txt -E bnb -E quantize --without-hashes

|

poetry export -f requirements.txt --without-hashes --output requirements.txt

|

||||||

|

|||||||

@ -9,52 +9,32 @@ text-generation-server = 'text_generation_server.cli:app'

|

|||||||

|

|

||||||

[tool.poetry.dependencies]

|

[tool.poetry.dependencies]

|

||||||

python = ">=3.9,<3.13"

|

python = ">=3.9,<3.13"

|

||||||

protobuf = "^4.21.7"

|

protobuf = "^3.20.3"

|

||||||

grpcio = "^1.51.1"

|

grpcio = "^1.51.1"

|

||||||

grpcio-status = "^1.51.1"

|

grpcio-status = "*"

|

||||||

grpcio-reflection = "^1.51.1"

|

grpcio-reflection = "*"

|

||||||

grpc-interceptor = "^0.15.0"

|

grpc-interceptor = "^0.15.0"

|

||||||

typer = "^0.6.1"

|

typer = "^0.6.1"

|

||||||

accelerate = { version = "^0.20.0", optional = true }

|

safetensors = "0.3.2"

|

||||||

bitsandbytes = { version = "^0.41.1", optional = true }

|

|

||||||

safetensors = "^0.3.2"

|

|

||||||

loguru = "^0.6.0"

|

loguru = "^0.6.0"

|

||||||

opentelemetry-api = "^1.15.0"

|

opentelemetry-api = "^1.15.0"

|

||||||

opentelemetry-exporter-otlp = "^1.15.0"

|

opentelemetry-exporter-otlp = "^1.15.0"

|

||||||

opentelemetry-instrumentation-grpc = "^0.36b0"

|

opentelemetry-instrumentation-grpc = "^0.36b0"

|

||||||

hf-transfer = "^0.1.2"

|

hf-transfer = "^0.1.2"

|

||||||

sentencepiece = "^0.1.97"

|

sentencepiece = "^0.1.97"

|

||||||

tokenizers = "^0.13.3"

|

tokenizers = "^0.14.1"

|

||||||

huggingface-hub = "^0.16.4"

|

huggingface-hub = "^0.16.4"

|

||||||

transformers = "^4.32.1"

|

|

||||||

einops = "^0.6.1"

|

|

||||||

texttable = { version = "^1.6.7", optional = true }

|

|

||||||

datasets = { version = "^2.14.0", optional = true }

|

|

||||||

peft = "^0.4.0"

|

peft = "^0.4.0"

|

||||||

torch = { version = "^2.0.1" }

|

deepspeed = { git = "https://github.com/HabanaAI/DeepSpeed.git", branch = "1.13.0" }

|

||||||

scipy = "^1.11.1"

|

optimum-habana = { git = "https://github.com/huggingface/optimum-habana.git", branch = "main" }

|

||||||

pillow = "^10.0.0"

|

|

||||||

|

|

||||||

[tool.poetry.extras]

|

|

||||||

accelerate = ["accelerate"]

|

|

||||||

bnb = ["bitsandbytes"]

|

|

||||||

quantize = ["texttable", "datasets", "accelerate"]

|

|

||||||

|

|

||||||

[tool.poetry.group.dev.dependencies]

|

[tool.poetry.group.dev.dependencies]

|

||||||

grpcio-tools = "^1.51.1"

|

grpcio-tools = "*"

|

||||||

pytest = "^7.3.0"

|

pytest = "^7.3.0"

|

||||||

|

|

||||||

|

|

||||||

[[tool.poetry.source]]

|

|

||||||

name = "pytorch-gpu-src"

|

|

||||||

url = "https://download.pytorch.org/whl/cu118"

|

|

||||||

priority = "explicit"

|

|

||||||

|

|

||||||

[tool.pytest.ini_options]

|

[tool.pytest.ini_options]

|

||||||

markers = ["private: marks tests as requiring an admin hf token (deselect with '-m \"not private\"')"]

|

markers = ["private: marks tests as requiring an admin hf token (deselect with '-m \"not private\"')"]

|

||||||

|

|

||||||

[build-system]

|

[build-system]

|

||||||

requires = [

|

requires = ["poetry-core>=1.0.0"]

|

||||||

"poetry-core>=1.0.0",

|

|

||||||

]

|

|

||||||

build-backend = "poetry.core.masonry.api"

|

build-backend = "poetry.core.masonry.api"

|

||||||

|

|||||||

@ -1,30 +1,32 @@

|

|||||||

accelerate==0.20.3 ; python_version >= "3.9" and python_version < "3.13"

|

accelerate>=0.22.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

aiohttp==3.8.5 ; python_version >= "3.9" and python_version < "3.13"

|

aiohttp==3.8.5 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

aiosignal==1.3.1 ; python_version >= "3.9" and python_version < "3.13"

|

aiosignal==1.3.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

async-timeout==4.0.3 ; python_version >= "3.9" and python_version < "3.13"

|

async-timeout==4.0.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

attrs==23.1.0 ; python_version >= "3.9" and python_version < "3.13"

|

attrs==23.1.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

backoff==2.2.1 ; python_version >= "3.9" and python_version < "3.13"

|

backoff==2.2.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

bitsandbytes==0.41.1 ; python_version >= "3.9" and python_version < "3.13"

|

|

||||||

certifi==2023.7.22 ; python_version >= "3.9" and python_version < "3.13"

|

certifi==2023.7.22 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

charset-normalizer==3.2.0 ; python_version >= "3.9" and python_version < "3.13"

|

charset-normalizer==3.2.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

click==8.1.7 ; python_version >= "3.9" and python_version < "3.13"

|

click==8.1.7 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

colorama==0.4.6 ; python_version >= "3.9" and python_version < "3.13" and (sys_platform == "win32" or platform_system == "Windows")

|

colorama==0.4.6 ; python_version >= "3.9" and python_version < "3.13" and (sys_platform == "win32" or platform_system == "Windows")

|

||||||

datasets==2.14.5 ; python_version >= "3.9" and python_version < "3.13"

|

coloredlogs==15.0.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

datasets==2.14.4 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

deprecated==1.2.14 ; python_version >= "3.9" and python_version < "3.13"

|

deprecated==1.2.14 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

diffusers==0.20.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

dill==0.3.7 ; python_version >= "3.9" and python_version < "3.13"

|

dill==0.3.7 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

einops==0.6.1 ; python_version >= "3.9" and python_version < "3.13"

|

filelock==3.12.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

filelock==3.12.4 ; python_version >= "3.9" and python_version < "3.13"

|

|

||||||

frozenlist==1.4.0 ; python_version >= "3.9" and python_version < "3.13"

|

frozenlist==1.4.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

fsspec==2023.6.0 ; python_version >= "3.9" and python_version < "3.13"

|

fsspec==2023.6.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

fsspec[http]==2023.6.0 ; python_version >= "3.9" and python_version < "3.13"

|

fsspec[http]==2023.6.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

googleapis-common-protos==1.60.0 ; python_version >= "3.9" and python_version < "3.13"

|

googleapis-common-protos==1.60.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

grpc-interceptor==0.15.3 ; python_version >= "3.9" and python_version < "3.13"

|

grpc-interceptor==0.15.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

grpcio-reflection==1.58.0 ; python_version >= "3.9" and python_version < "3.13"

|

grpcio-reflection==1.48.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

grpcio-status==1.58.0 ; python_version >= "3.9" and python_version < "3.13"

|

grpcio-status==1.48.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

grpcio==1.58.0 ; python_version >= "3.9" and python_version < "3.13"

|

grpcio==1.57.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

hf-transfer==0.1.3 ; python_version >= "3.9" and python_version < "3.13"

|

hf-transfer==0.1.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

huggingface-hub==0.16.4 ; python_version >= "3.9" and python_version < "3.13"

|

huggingface-hub==0.16.4 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

humanfriendly==10.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

idna==3.4 ; python_version >= "3.9" and python_version < "3.13"

|

idna==3.4 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

importlib-metadata==6.8.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

jinja2==3.1.2 ; python_version >= "3.9" and python_version < "3.13"

|

jinja2==3.1.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

loguru==0.6.0 ; python_version >= "3.9" and python_version < "3.13"

|

loguru==0.6.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

markupsafe==2.1.3 ; python_version >= "3.9" and python_version < "3.13"

|

markupsafe==2.1.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

@ -32,7 +34,7 @@ mpmath==1.3.0 ; python_version >= "3.9" and python_version < "3.13"

|

|||||||

multidict==6.0.4 ; python_version >= "3.9" and python_version < "3.13"

|

multidict==6.0.4 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

multiprocess==0.70.15 ; python_version >= "3.9" and python_version < "3.13"

|

multiprocess==0.70.15 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

networkx==3.1 ; python_version >= "3.9" and python_version < "3.13"

|

networkx==3.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

numpy==1.26.0 ; python_version >= "3.9" and python_version < "3.13"

|

numpy==1.25.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

opentelemetry-api==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

opentelemetry-api==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

opentelemetry-exporter-otlp-proto-grpc==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

opentelemetry-exporter-otlp-proto-grpc==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

opentelemetry-exporter-otlp-proto-http==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

opentelemetry-exporter-otlp-proto-http==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

@ -42,34 +44,35 @@ opentelemetry-instrumentation==0.36b0 ; python_version >= "3.9" and python_versi

|

|||||||

opentelemetry-proto==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

opentelemetry-proto==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

opentelemetry-sdk==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

opentelemetry-sdk==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

opentelemetry-semantic-conventions==0.36b0 ; python_version >= "3.9" and python_version < "3.13"

|

opentelemetry-semantic-conventions==0.36b0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

optimum==1.13.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

packaging==23.1 ; python_version >= "3.9" and python_version < "3.13"

|

packaging==23.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

pandas==2.1.1 ; python_version >= "3.9" and python_version < "3.13"

|

pandas==2.0.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

peft==0.4.0 ; python_version >= "3.9" and python_version < "3.13"

|

peft==0.4.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

pillow==10.0.1 ; python_version >= "3.9" and python_version < "3.13"

|

pillow==10.0.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

protobuf==4.24.3 ; python_version >= "3.9" and python_version < "3.13"

|

protobuf==3.20.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

psutil==5.9.5 ; python_version >= "3.9" and python_version < "3.13"

|

psutil==5.9.5 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

pyarrow==13.0.0 ; python_version >= "3.9" and python_version < "3.13"

|

pyarrow==13.0.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

pyreadline3==3.4.1 ; sys_platform == "win32" and python_version >= "3.9" and python_version < "3.13"

|

||||||

python-dateutil==2.8.2 ; python_version >= "3.9" and python_version < "3.13"

|

python-dateutil==2.8.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

pytz==2023.3.post1 ; python_version >= "3.9" and python_version < "3.13"

|

pytz==2023.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

pyyaml==6.0.1 ; python_version >= "3.9" and python_version < "3.13"

|

pyyaml==6.0.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

regex==2023.8.8 ; python_version >= "3.9" and python_version < "3.13"

|

regex==2023.8.8 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

requests==2.31.0 ; python_version >= "3.9" and python_version < "3.13"

|

requests==2.31.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

safetensors==0.3.3 ; python_version >= "3.9" and python_version < "3.13"

|

safetensors==0.3.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

scipy==1.11.2 ; python_version >= "3.9" and python_version < "3.13"

|

|

||||||

sentencepiece==0.1.99 ; python_version >= "3.9" and python_version < "3.13"

|

sentencepiece==0.1.99 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

setuptools==68.2.2 ; python_version >= "3.9" and python_version < "3.13"

|

setuptools==68.1.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

six==1.16.0 ; python_version >= "3.9" and python_version < "3.13"

|

six==1.16.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

sympy==1.12 ; python_version >= "3.9" and python_version < "3.13"

|

sympy==1.12 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

texttable==1.6.7 ; python_version >= "3.9" and python_version < "3.13"

|

tokenizers==0.14.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

tokenizers==0.13.3 ; python_version >= "3.9" and python_version < "3.13"

|

|

||||||

torch==2.0.1 ; python_version >= "3.9" and python_version < "3.13"

|

|

||||||

tqdm==4.66.1 ; python_version >= "3.9" and python_version < "3.13"

|

tqdm==4.66.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

transformers==4.33.2 ; python_version >= "3.9" and python_version < "3.13"

|

transformers==4.34.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

transformers[sentencepiece]==4.34.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

typer==0.6.1 ; python_version >= "3.9" and python_version < "3.13"

|

typer==0.6.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

typing-extensions==4.8.0 ; python_version >= "3.9" and python_version < "3.13"

|

typing-extensions==4.7.1 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

tzdata==2023.3 ; python_version >= "3.9" and python_version < "3.13"

|

tzdata==2023.3 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

urllib3==2.0.5 ; python_version >= "3.9" and python_version < "3.13"

|

urllib3==2.0.4 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

win32-setctime==1.1.0 ; python_version >= "3.9" and python_version < "3.13" and sys_platform == "win32"

|

win32-setctime==1.1.0 ; python_version >= "3.9" and python_version < "3.13" and sys_platform == "win32"

|

||||||

wrapt==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

wrapt==1.15.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

xxhash==3.3.0 ; python_version >= "3.9" and python_version < "3.13"

|

xxhash==3.3.0 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

yarl==1.9.2 ; python_version >= "3.9" and python_version < "3.13"

|

yarl==1.9.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

zipp==3.16.2 ; python_version >= "3.9" and python_version < "3.13"

|

||||||

|

|||||||

@ -6,7 +6,6 @@ from pathlib import Path

|

|||||||

from loguru import logger

|

from loguru import logger

|

||||||

from typing import Optional

|

from typing import Optional

|

||||||

from enum import Enum

|

from enum import Enum

|

||||||

from huggingface_hub import hf_hub_download

|

|

||||||

|

|

||||||

|

|

||||||

app = typer.Typer()

|

app = typer.Typer()

|

||||||

@ -14,11 +13,7 @@ app = typer.Typer()

|

|||||||

|

|

||||||

class Quantization(str, Enum):

|

class Quantization(str, Enum):

|

||||||

bitsandbytes = "bitsandbytes"

|

bitsandbytes = "bitsandbytes"

|

||||||

bitsandbytes_nf4 = "bitsandbytes-nf4"

|

|

||||||

bitsandbytes_fp4 = "bitsandbytes-fp4"

|

|

||||||

gptq = "gptq"

|

gptq = "gptq"

|

||||||

awq = "awq"

|

|

||||||

eetq = "eetq"

|

|

||||||

|

|

||||||

|

|

||||||

class Dtype(str, Enum):

|

class Dtype(str, Enum):

|

||||||

@ -40,18 +35,9 @@ def serve(

|

|||||||

otlp_endpoint: Optional[str] = None,

|

otlp_endpoint: Optional[str] = None,

|

||||||

):

|

):

|

||||||

if sharded:

|

if sharded:

|

||||||

assert (

|

assert os.getenv("WORLD_SIZE", None) is not None, "WORLD_SIZE must be set when sharded is True"

|

||||||

os.getenv("RANK", None) is not None

|

assert os.getenv("MASTER_ADDR", None) is not None, "MASTER_ADDR must be set when sharded is True"

|

||||||

), "RANK must be set when sharded is True"

|

assert os.getenv("MASTER_PORT", None) is not None, "MASTER_PORT must be set when sharded is True"

|

||||||

assert (

|

|

||||||

os.getenv("WORLD_SIZE", None) is not None

|

|

||||||

), "WORLD_SIZE must be set when sharded is True"

|

|

||||||

assert (

|

|

||||||

os.getenv("MASTER_ADDR", None) is not None

|

|

||||||

), "MASTER_ADDR must be set when sharded is True"

|

|

||||||

assert (

|

|

||||||

os.getenv("MASTER_PORT", None) is not None

|

|

||||||

), "MASTER_PORT must be set when sharded is True"

|

|

||||||

|

|

||||||

# Remove default handler

|

# Remove default handler

|

||||||

logger.remove()

|

logger.remove()

|

||||||

@ -75,14 +61,29 @@ def serve(

|

|||||||

|

|

||||||

# Downgrade enum into str for easier management later on

|

# Downgrade enum into str for easier management later on

|

||||||

quantize = None if quantize is None else quantize.value

|

quantize = None if quantize is None else quantize.value

|

||||||

dtype = None if dtype is None else dtype.value

|

dtype = "bfloat16" if dtype is None else dtype.value

|

||||||

if dtype is not None and quantize is not None:

|

|

||||||

raise RuntimeError(

|

logger.info("CLI SHARDED = {} DTYPE = {}".format(sharded, dtype))

|

||||||

"Only 1 can be set between `dtype` and `quantize`, as they both decide how goes the final model."

|

|

||||||

)

|

if sharded:

|

||||||

server.serve(

|

tgi_file = Path(__file__).resolve().parent / "tgi_service.py"

|

||||||

model_id, revision, sharded, quantize, dtype, trust_remote_code, uds_path

|

num_shard = int(os.getenv("WORLD_SIZE", "1"))

|

||||||

)

|

logger.info("CLI SHARDED = {}".format(num_shard))

|

||||||

|

import subprocess

|

||||||

|

|

||||||

|

cmd = f"deepspeed --num_nodes 1 --num_gpus {num_shard} --no_local_rank {tgi_file} --model_id {model_id} --revision {revision} --sharded {sharded} --dtype {dtype} --uds_path {uds_path}"

|

||||||

|

logger.info("CLI server start deepspeed ={} ".format(cmd))

|

||||||

|

sys.stdout.flush()

|

||||||

|

sys.stderr.flush()

|

||||||

|

with subprocess.Popen(cmd, shell=True, executable="/bin/bash") as proc:

|

||||||

|

proc.wait()

|

||||||

|

sys.stdout.flush()

|

||||||

|

sys.stderr.flush()

|

||||||

|

if proc.returncode != 0:

|

||||||

|

logger.error(f"{cmd} exited with status = {proc.returncode}")

|

||||||

|

return proc.returncode

|

||||||

|

else:

|

||||||

|

server.serve(model_id, revision, dtype, uds_path, sharded)

|

||||||

|

|

||||||

|

|

||||||

@app.command()

|

@app.command()

|

||||||

@ -93,7 +94,6 @@ def download_weights(

|

|||||||

auto_convert: bool = True,

|

auto_convert: bool = True,

|

||||||

logger_level: str = "INFO",

|

logger_level: str = "INFO",

|

||||||

json_output: bool = False,

|

json_output: bool = False,

|

||||||

trust_remote_code: bool = False,

|

|

||||||

):

|

):

|

||||||

# Remove default handler

|

# Remove default handler

|

||||||

logger.remove()

|

logger.remove()

|

||||||

@ -124,19 +124,6 @@ def download_weights(

|

|||||||

) is not None

|

) is not None

|

||||||

|

|

||||||

if not is_local_model:

|

if not is_local_model:

|

||||||

try:

|

|

||||||

adapter_config_filename = hf_hub_download(

|

|

||||||

model_id, revision=revision, filename="adapter_config.json"

|

|

||||||

)

|

|

||||||

utils.download_and_unload_peft(

|

|

||||||

model_id, revision, trust_remote_code=trust_remote_code

|

|

||||||

)

|

|

||||||

is_local_model = True

|

|

||||||

utils.weight_files(model_id, revision, extension)

|

|

||||||

return

|

|

||||||

except (utils.LocalEntryNotFoundError, utils.EntryNotFoundError):

|

|

||||||

pass

|

|

||||||

|

|

||||||

# Try to download weights from the hub

|

# Try to download weights from the hub

|

||||||

try:

|

try:

|

||||||

filenames = utils.weight_hub_files(model_id, revision, extension)

|

filenames = utils.weight_hub_files(model_id, revision, extension)

|

||||||

@ -175,30 +162,24 @@ def download_weights(

|

|||||||

)

|

)

|

||||||

|

|

||||||

# Safetensors final filenames

|

# Safetensors final filenames

|

||||||

local_st_files = [

|

local_st_files = [p.parent / f"{p.stem.lstrip('pytorch_')}.safetensors" for p in local_pt_files]

|

||||||

p.parent / f"{p.stem.lstrip('pytorch_')}.safetensors"

|

|

||||||

for p in local_pt_files

|

|

||||||

]

|

|

||||||

try:

|

try:

|

||||||

import transformers

|

import transformers

|

||||||

import json

|

from transformers import AutoConfig

|

||||||

|

|

||||||

if is_local_model:

|

config = AutoConfig.from_pretrained(

|

||||||

config_filename = os.path.join(model_id, "config.json")

|

model_id,

|

||||||

else:

|

revision=revision,

|

||||||

config_filename = hf_hub_download(

|

)

|

||||||

model_id, revision=revision, filename="config.json"

|

architecture = config.architectures[0]

|

||||||

)

|

|

||||||

with open(config_filename, "r") as f:

|

|

||||||

config = json.load(f)

|

|

||||||

architecture = config["architectures"][0]

|

|

||||||

|

|

||||||

class_ = getattr(transformers, architecture)

|

class_ = getattr(transformers, architecture)

|

||||||

|

|

||||||

# Name for this varible depends on transformers version.

|

# Name for this varible depends on transformers version.

|

||||||

discard_names = getattr(class_, "_tied_weights_keys", [])

|