mirror of

https://github.com/huggingface/text-generation-inference.git

synced 2025-09-10 20:04:52 +00:00

Merge branch 'main' into tgi-rocm

This commit is contained in:

commit

7903ad72a8

10

README.md

10

README.md

@ -1,6 +1,8 @@

|

||||

<div align="center">

|

||||

|

||||

|

||||

|

||||

<a href="https://www.youtube.com/watch?v=jlMAX2Oaht0">

|

||||

<img width=560 width=315 alt="Making TGI deployment optimal" src="https://huggingface.co/datasets/Narsil/tgi_assets/resolve/main/thumbnail.png">

|

||||

</a>

|

||||

|

||||

# Text Generation Inference

|

||||

|

||||

@ -138,6 +140,10 @@ this will impact performance.

|

||||

`text-generation-inference` is instrumented with distributed tracing using OpenTelemetry. You can use this feature

|

||||

by setting the address to an OTLP collector with the `--otlp-endpoint` argument.

|

||||

|

||||

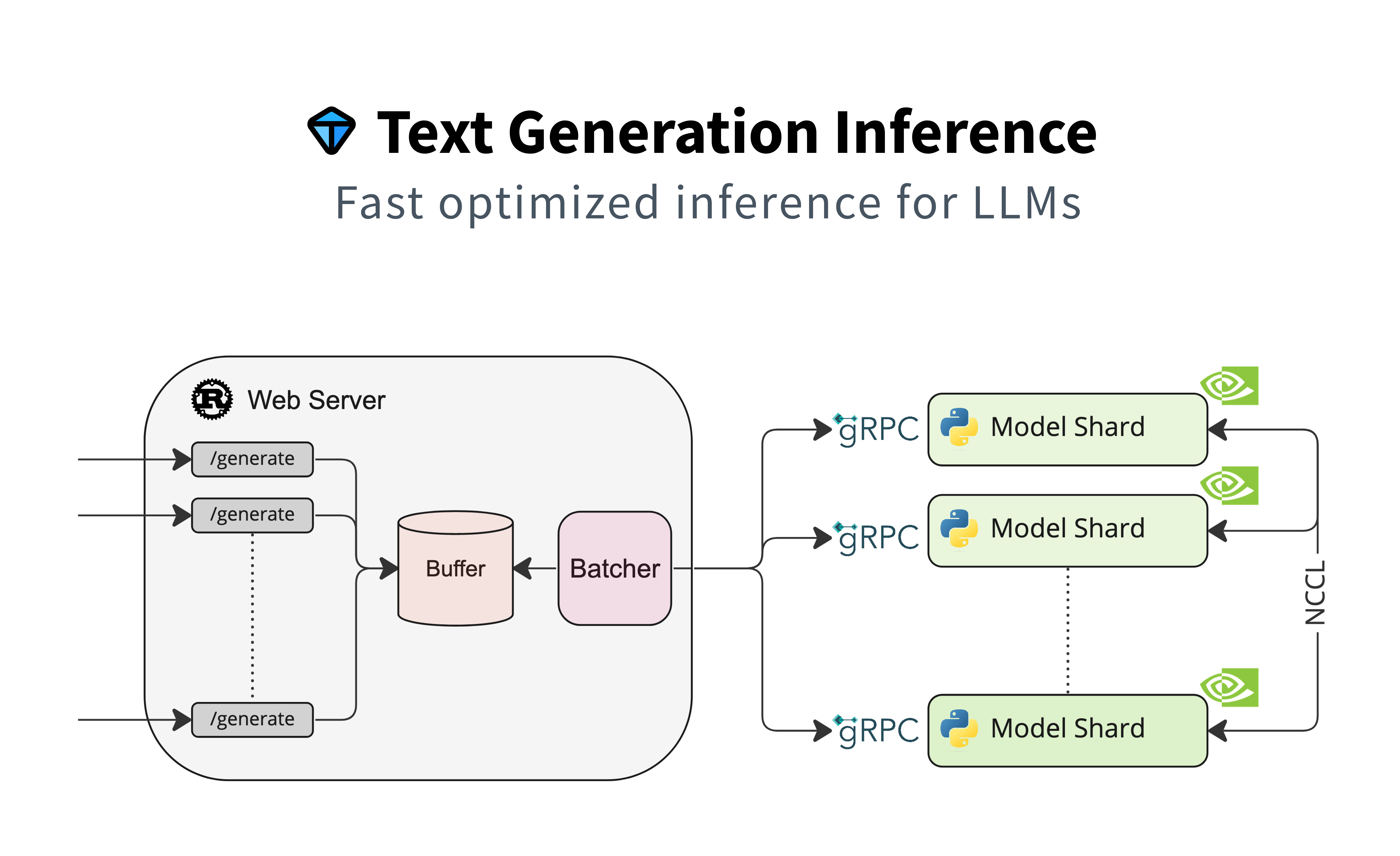

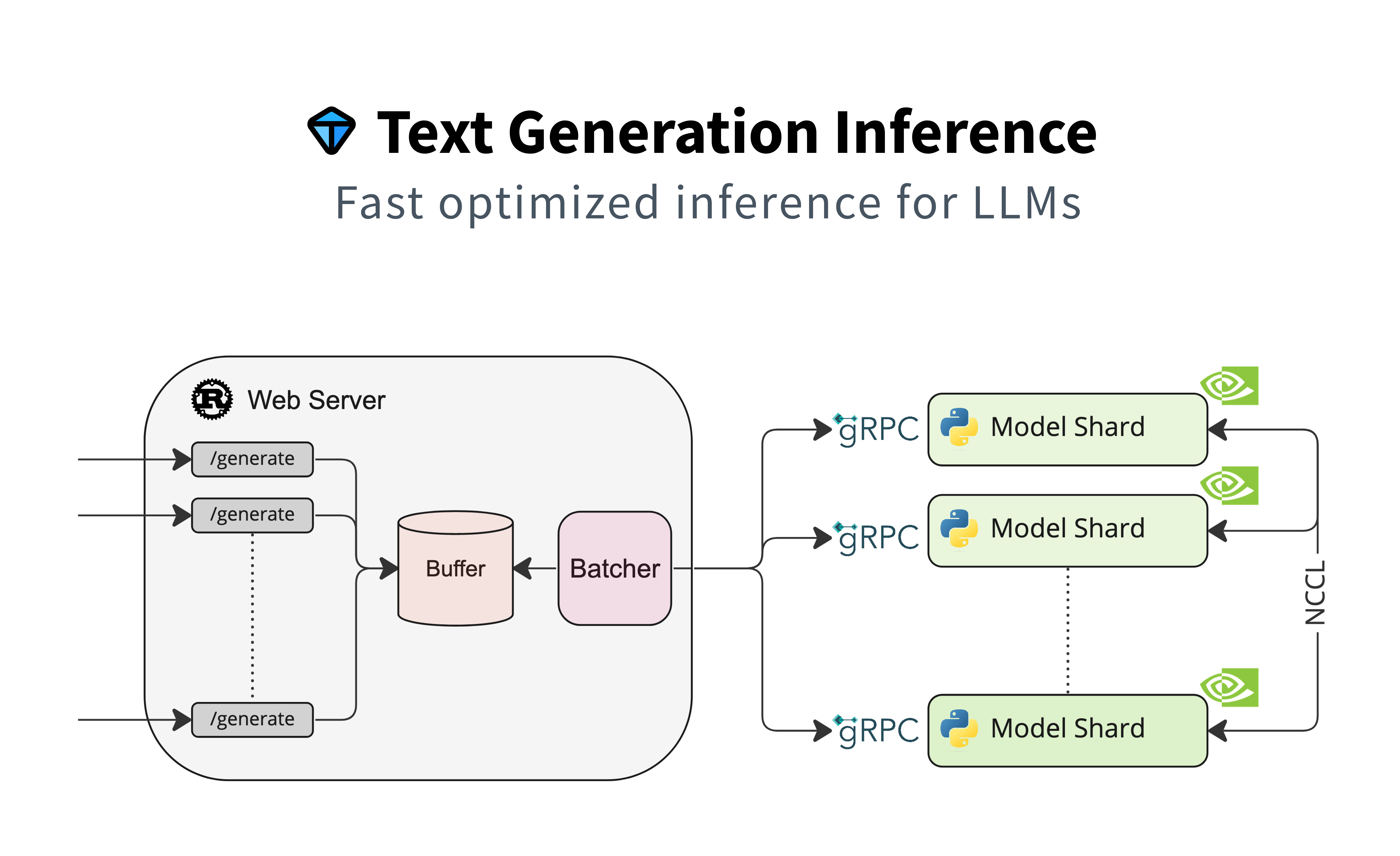

### Architecture

|

||||

|

||||

|

||||

|

||||

### Local install

|

||||

|

||||

You can also opt to install `text-generation-inference` locally.

|

||||

|

||||

@ -4,7 +4,7 @@ Text Generation Inference improves the model in several aspects.

|

||||

|

||||

## Quantization

|

||||

|

||||

TGI supports [bits-and-bytes](https://github.com/TimDettmers/bitsandbytes#bitsandbytes), [GPT-Q](https://arxiv.org/abs/2210.17323) and [AWQ](https://arxiv.org/abs/2306.00978) quantization. To speed up inference with quantization, simply set `quantize` flag to `bitsandbytes`, `gptq` or `awq` depending on the quantization technique you wish to use. When using GPT-Q quantization, you need to point to one of the models [here](https://huggingface.co/models?search=gptq) when using AWQ quantization, you need to point to one of the models [here](https://huggingface.co/models?search=awq). To get more information about quantization, please refer to [quantization guide](./../conceptual/quantization.md)

|

||||

TGI supports [bits-and-bytes](https://github.com/TimDettmers/bitsandbytes#bitsandbytes), [GPT-Q](https://arxiv.org/abs/2210.17323) and [AWQ](https://arxiv.org/abs/2306.00978) quantization. To speed up inference with quantization, simply set `quantize` flag to `bitsandbytes`, `gptq` or `awq` depending on the quantization technique you wish to use. When using GPT-Q quantization, you need to point to one of the models [here](https://huggingface.co/models?search=gptq) when using AWQ quantization, you need to point to one of the models [here](https://huggingface.co/models?search=awq). To get more information about quantization, please refer to [quantization guide](./../conceptual/quantization)

|

||||

|

||||

|

||||

## RoPE Scaling

|

||||

|

||||

@ -670,7 +670,7 @@ class FlashCausalLM(Model):

|

||||

self.device,

|

||||

)

|

||||

_, batch = self.generate_token(batch)

|

||||

except Exception as e:

|

||||

except torch.cuda.OutOfMemoryError as e:

|

||||

raise RuntimeError(

|

||||

f"Not enough memory to handle {len(batch.input_ids)} prefill tokens. "

|

||||

f"You need to decrease `--max-batch-prefill-tokens`"

|

||||

|

||||

Loading…

Reference in New Issue

Block a user