mirror of

https://github.com/huggingface/text-generation-inference.git

synced 2025-09-10 20:04:52 +00:00

Update README.md

added mission statement

This commit is contained in:

parent

e605c2a43e

commit

3062fa035d

20

README.md

20

README.md

@ -1,7 +1,27 @@

|

||||

## Motivation

|

||||

This repo aims to make the 🤗 Text Generation Inference more awesome by focussing on real world deployment scenarios that are not purely focussed on a 350M$ funded ecosystem.

|

||||

|

||||

TGI is well suited for distributed/ cloud burst/ on-demand workloads, yet HF's focus seems to be (enterprisy) long-running single model endpoints. We are aiming to change that.

|

||||

|

||||

|

||||

|

||||

|

||||

## Goals

|

||||

- Support Model loading from wherever you want (HDFS, S3, HTTPS, …)

|

||||

- Support Adapters (LORA/PEFT) without merging (possibly huge) Checkpoints and uploading them to 🤗

|

||||

- Reduce operational cost by making TGI-😑 an disposable, hot swapable workhorse

|

||||

- Get back to a truyl open source license

|

||||

- Support more core frameworks than HF products

|

||||

|

||||

`</endOfMissionStatement>`

|

||||

|

||||

<div align="center">

|

||||

|

||||

|

||||

|

||||

----

|

||||

|

||||

|

||||

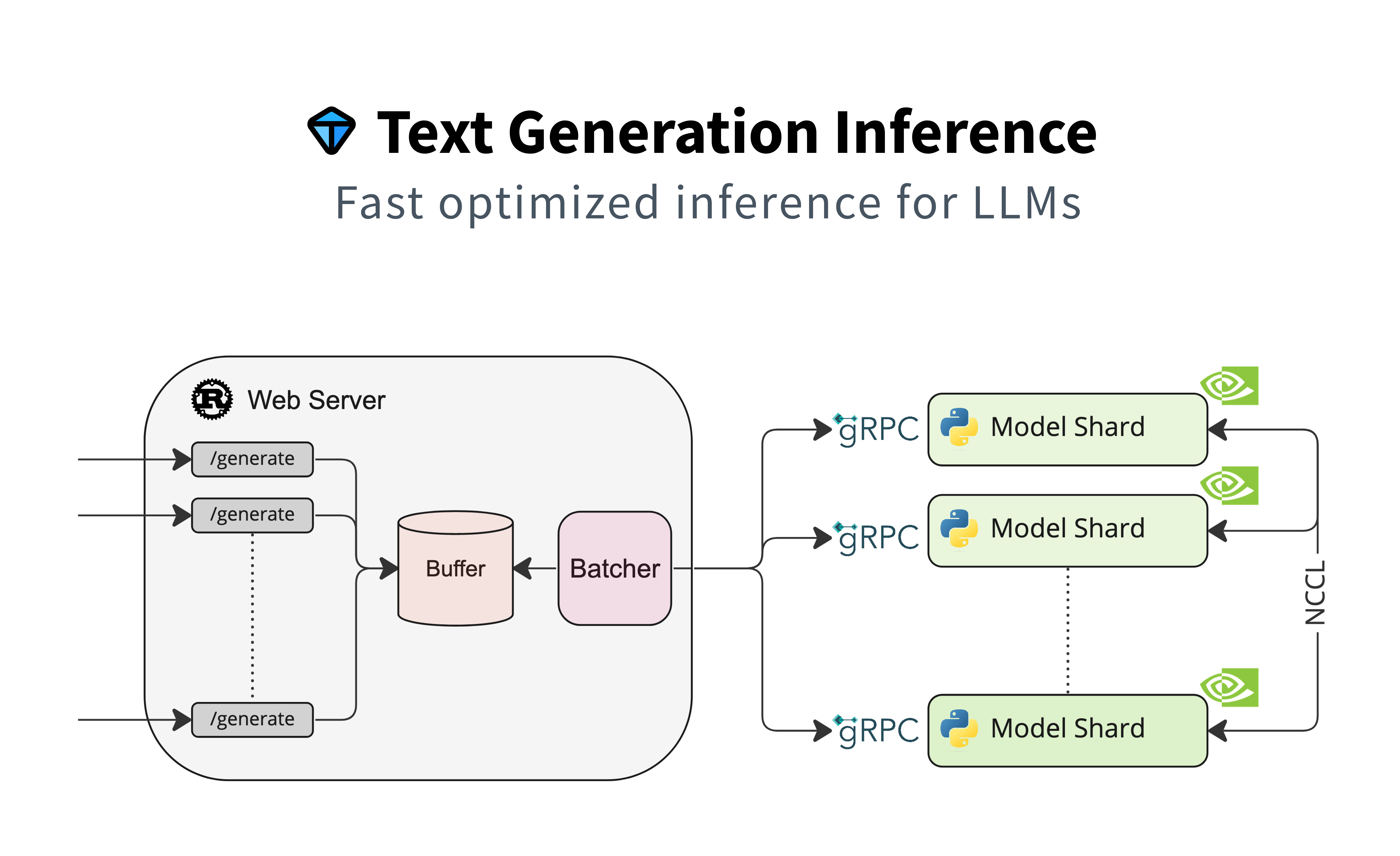

# Text Generation Inference

|

||||

|

||||

<a href="https://github.com/huggingface/text-generation-inference">

|

||||

|

||||

Loading…

Reference in New Issue

Block a user