-

+

+  +

+

# Text Generation Inference

@@ -18,116 +20,84 @@ to power Hugging Chat, the Inference API and Inference Endpoint.

## Table of contents

-- [Features](#features)

-- [Optimized Architectures](#optimized-architectures)

- [Get Started](#get-started)

- - [Docker](#docker)

- [API Documentation](#api-documentation)

- [Using a private or gated model](#using-a-private-or-gated-model)

- [A note on Shared Memory](#a-note-on-shared-memory-shm)

- [Distributed Tracing](#distributed-tracing)

- [Local Install](#local-install)

- [CUDA Kernels](#cuda-kernels)

-- [Run Falcon](#run-falcon)

+- [Optimized architectures](#optimized-architectures)

+- [Run Mistral](#run-a-model)

- [Run](#run)

- [Quantization](#quantization)

- [Develop](#develop)

- [Testing](#testing)

-- [Other supported hardware](#other-supported-hardware)

-## Features

+Text Generation Inference (TGI) is a toolkit for deploying and serving Large Language Models (LLMs). TGI enables high-performance text generation for the most popular open-source LLMs, including Llama, Falcon, StarCoder, BLOOM, GPT-NeoX, and [more](https://huggingface.co/docs/text-generation-inference/supported_models). TGI implements many features, such as:

-- Serve the most popular Large Language Models with a simple launcher

+- Simple launcher to serve most popular LLMs

+- Production ready (distributed tracing with Open Telemetry, Prometheus metrics)

- Tensor Parallelism for faster inference on multiple GPUs

- Token streaming using Server-Sent Events (SSE)

-- [Continuous batching of incoming requests](https://github.com/huggingface/text-generation-inference/tree/main/router) for increased total throughput

-- Optimized transformers code for inference using [flash-attention](https://github.com/HazyResearch/flash-attention) and [Paged Attention](https://github.com/vllm-project/vllm) on the most popular architectures

-- Quantization with [bitsandbytes](https://github.com/TimDettmers/bitsandbytes) and [GPT-Q](https://arxiv.org/abs/2210.17323)

+- Continuous batching of incoming requests for increased total throughput

+- Optimized transformers code for inference using [Flash Attention](https://github.com/HazyResearch/flash-attention) and [Paged Attention](https://github.com/vllm-project/vllm) on the most popular architectures

+- Quantization with :

+ - [bitsandbytes](https://github.com/TimDettmers/bitsandbytes)

+ - [GPT-Q](https://arxiv.org/abs/2210.17323)

+ - [EETQ](https://github.com/NetEase-FuXi/EETQ)

+ - [AWQ](https://github.com/casper-hansen/AutoAWQ)

- [Safetensors](https://github.com/huggingface/safetensors) weight loading

- Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

- Logits warper (temperature scaling, top-p, top-k, repetition penalty, more details see [transformers.LogitsProcessor](https://huggingface.co/docs/transformers/internal/generation_utils#transformers.LogitsProcessor))

- Stop sequences

- Log probabilities

-- Production ready (distributed tracing with Open Telemetry, Prometheus metrics)

-- Custom Prompt Generation: Easily generate text by providing custom prompts to guide the model's output.

-- Fine-tuning Support: Utilize fine-tuned models for specific tasks to achieve higher accuracy and performance.

+- [Speculation](https://huggingface.co/docs/text-generation-inference/conceptual/speculation) ~2x latency

+- [Guidance/JSON](https://huggingface.co/docs/text-generation-inference/conceptual/guidance). Specify output format to speed up inference and make sure the output is valid according to some specs..

+- Custom Prompt Generation: Easily generate text by providing custom prompts to guide the model's output

+- Fine-tuning Support: Utilize fine-tuned models for specific tasks to achieve higher accuracy and performance

+

+### Hardware support

+

+- [Nvidia](https://github.com/huggingface/text-generation-inference/pkgs/container/text-generation-inference)

+- [AMD](https://github.com/huggingface/text-generation-inference/pkgs/container/text-generation-inference) (-rocm)

+- [Inferentia](https://github.com/huggingface/optimum-neuron/tree/main/text-generation-inference)

+- [Intel GPU](https://github.com/huggingface/text-generation-inference/pull/1475)

+- [Gaudi](https://github.com/huggingface/tgi-gaudi)

+- [Google TPU](https://huggingface.co/docs/optimum-tpu/howto/serving)

-## Optimized architectures

-

-- [BLOOM](https://huggingface.co/bigscience/bloom)

-- [FLAN-T5](https://huggingface.co/google/flan-t5-xxl)

-- [Galactica](https://huggingface.co/facebook/galactica-120b)

-- [GPT-Neox](https://huggingface.co/EleutherAI/gpt-neox-20b)

-- [Llama](https://github.com/facebookresearch/llama)

-- [OPT](https://huggingface.co/facebook/opt-66b)

-- [SantaCoder](https://huggingface.co/bigcode/santacoder)

-- [Starcoder](https://huggingface.co/bigcode/starcoder)

-- [Falcon 7B](https://huggingface.co/tiiuae/falcon-7b)

-- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

-- [MPT](https://huggingface.co/mosaicml/mpt-30b)

-- [Llama V2](https://huggingface.co/meta-llama)

-

-Other architectures are supported on a best effort basis using:

-

-`AutoModelForCausalLM.from_pretrained(

, device_map="auto")`

-

-or

-

-`AutoModelForSeq2SeqLM.from_pretrained(, device_map="auto")`

-

-## Get started

+## Get Started

### Docker

-The easiest way of getting started is using the official Docker container:

+For a detailed starting guide, please see the [Quick Tour](https://huggingface.co/docs/text-generation-inference/quicktour). The easiest way of getting started is using the official Docker container:

```shell

-model=tiiuae/falcon-7b-instruct

-volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

+model=HuggingFaceH4/zephyr-7b-beta

+# share a volume with the Docker container to avoid downloading weights every run

+volume=$PWD/data

-docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.2 --model-id $model

-```

-**Note:** To use GPUs, you need to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html). We also recommend using NVIDIA drivers with CUDA version 11.8 or higher. For running the Docker container on a machine with no GPUs or CUDA support, it is enough to remove the `--gpus all` flag and add `--disable-custom-kernels`, please note CPU is not the intended platform for this project, so performance might be subpar.

-

-To see all options to serve your models (in the [code](https://github.com/huggingface/text-generation-inference/blob/main/launcher/src/main.rs) or in the cli):

-```

-text-generation-launcher --help

+docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data \

+ ghcr.io/huggingface/text-generation-inference:2.1.1 --model-id $model

```

-You can then query the model using either the `/generate` or `/generate_stream` routes:

+And then you can make requests like

-```shell

-curl 127.0.0.1:8080/generate \

- -X POST \

- -d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' \

- -H 'Content-Type: application/json'

-```

-

-```shell

+```bash

curl 127.0.0.1:8080/generate_stream \

-X POST \

-d '{"inputs":"What is Deep Learning?","parameters":{"max_new_tokens":20}}' \

-H 'Content-Type: application/json'

```

-or from Python:

+**Note:** To use NVIDIA GPUs, you need to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html). We also recommend using NVIDIA drivers with CUDA version 12.2 or higher. For running the Docker container on a machine with no GPUs or CUDA support, it is enough to remove the `--gpus all` flag and add `--disable-custom-kernels`, please note CPU is not the intended platform for this project, so performance might be subpar.

-```shell

-pip install text-generation

+**Note:** TGI supports AMD Instinct MI210 and MI250 GPUs. Details can be found in the [Supported Hardware documentation](https://huggingface.co/docs/text-generation-inference/supported_models#supported-hardware). To use AMD GPUs, please use `docker run --device /dev/kfd --device /dev/dri --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:2.1.1-rocm --model-id $model` instead of the command above.

+

+To see all options to serve your models (in the [code](https://github.com/huggingface/text-generation-inference/blob/main/launcher/src/main.rs) or in the cli):

```

-

-```python

-from text_generation import Client

-

-client = Client("http://127.0.0.1:8080")

-print(client.generate("What is Deep Learning?", max_new_tokens=20).generated_text)

-

-text = ""

-for response in client.generate_stream("What is Deep Learning?", max_new_tokens=20):

- if not response.token.special:

- text += response.token.text

-print(text)

+text-generation-launcher --help

```

### API documentation

@@ -137,14 +107,14 @@ The Swagger UI is also available at: [https://huggingface.github.io/text-generat

### Using a private or gated model

-You have the option to utilize the `HUGGING_FACE_HUB_TOKEN` environment variable for configuring the token employed by

+You have the option to utilize the `HF_TOKEN` environment variable for configuring the token employed by

`text-generation-inference`. This allows you to gain access to protected resources.

For example, if you want to serve the gated Llama V2 model variants:

1. Go to https://huggingface.co/settings/tokens

2. Copy your cli READ token

-3. Export `HUGGING_FACE_HUB_TOKEN=`

+3. Export `HF_TOKEN=`

or with Docker:

@@ -153,7 +123,7 @@ model=meta-llama/Llama-2-7b-chat-hf

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

token=

-docker run --gpus all --shm-size 1g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.2 --model-id $model

+docker run --gpus all --shm-size 1g -e HF_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:2.0 --model-id $model

```

### A note on Shared Memory (shm)

@@ -185,7 +155,12 @@ this will impact performance.

### Distributed Tracing

`text-generation-inference` is instrumented with distributed tracing using OpenTelemetry. You can use this feature

-by setting the address to an OTLP collector with the `--otlp-endpoint` argument.

+by setting the address to an OTLP collector with the `--otlp-endpoint` argument. The default service name can be

+overridden with the `--otlp-service-name` argument

+

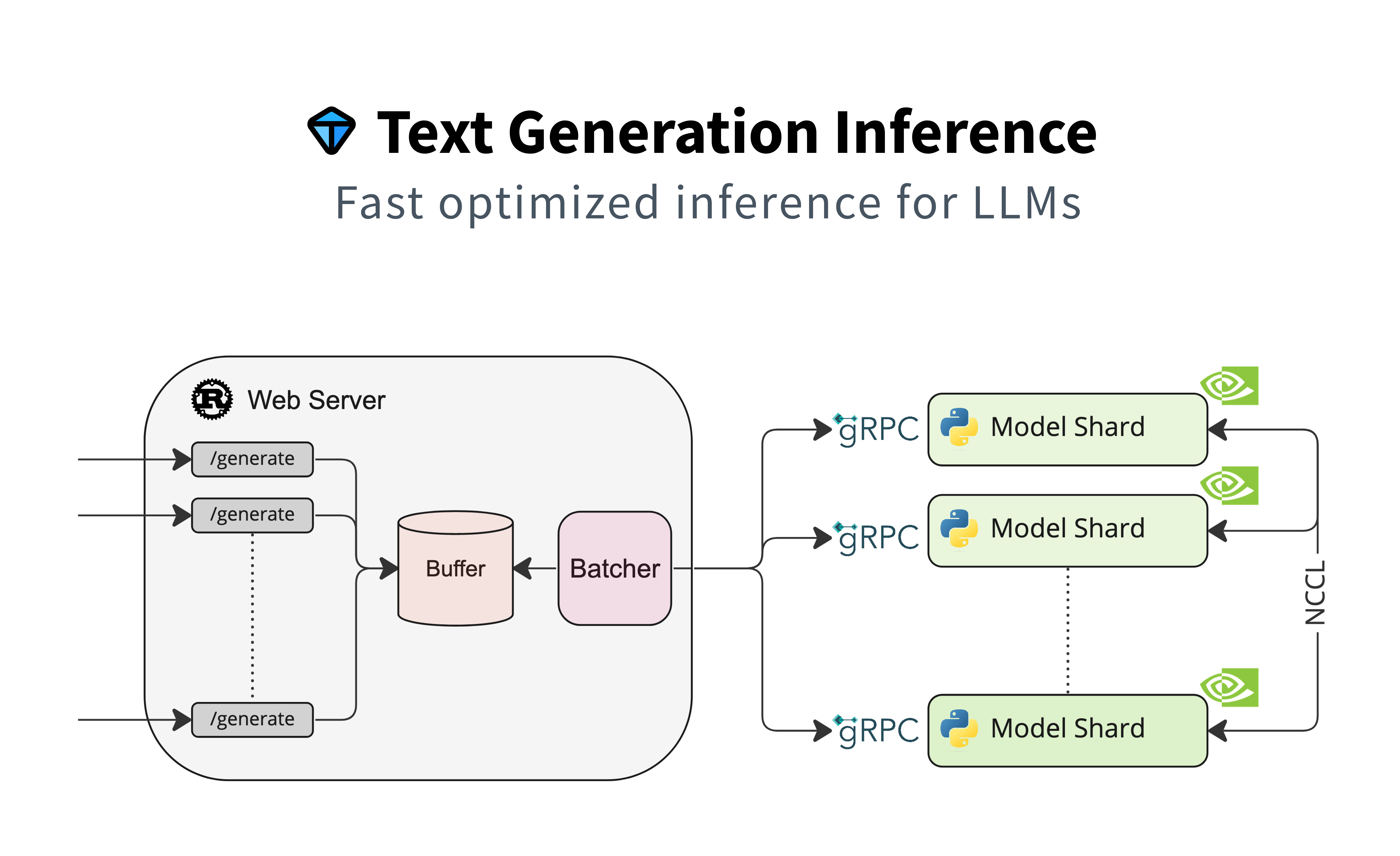

+### Architecture

+

+

### Local install

@@ -197,7 +172,7 @@ Python 3.9, e.g. using `conda`:

```shell

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

-conda create -n text-generation-inference python=3.9

+conda create -n text-generation-inference python=3.11

conda activate text-generation-inference

```

@@ -223,7 +198,7 @@ Then run:

```shell

BUILD_EXTENSIONS=True make install # Install repository and HF/transformer fork with CUDA kernels

-make run-falcon-7b-instruct

+text-generation-launcher --model-id mistralai/Mistral-7B-Instruct-v0.2

```

**Note:** on some machines, you may also need the OpenSSL libraries and gcc. On Linux machines, run:

@@ -232,19 +207,26 @@ make run-falcon-7b-instruct

sudo apt-get install libssl-dev gcc -y

```

-### CUDA Kernels

+## Optimized architectures

-The custom CUDA kernels are only tested on NVIDIA A100s. If you have any installation or runtime issues, you can remove

-the kernels by using the `DISABLE_CUSTOM_KERNELS=True` environment variable.

+TGI works out of the box to serve optimized models for all modern models. They can be found in [this list](https://huggingface.co/docs/text-generation-inference/supported_models).

-Be aware that the official Docker image has them enabled by default.

+Other architectures are supported on a best-effort basis using:

-## Run Falcon

+`AutoModelForCausalLM.from_pretrained(, device_map="auto")`

+

+or

+

+`AutoModelForSeq2SeqLM.from_pretrained(, device_map="auto")`

+

+

+

+## Run locally

### Run

```shell

-make run-falcon-7b-instruct

+text-generation-launcher --model-id mistralai/Mistral-7B-Instruct-v0.2

```

### Quantization

@@ -252,7 +234,7 @@ make run-falcon-7b-instruct

You can also quantize the weights with bitsandbytes to reduce the VRAM requirement:

```shell

-make run-falcon-7b-instruct-quantize

+text-generation-launcher --model-id mistralai/Mistral-7B-Instruct-v0.2 --quantize

```

4bit quantization is available using the [NF4 and FP4 data types from bitsandbytes](https://arxiv.org/pdf/2305.14314.pdf). It can be enabled by providing `--quantize bitsandbytes-nf4` or `--quantize bitsandbytes-fp4` as a command line argument to `text-generation-launcher`.

@@ -277,10 +259,3 @@ make rust-tests

# integration tests

make integration-tests

```

-

-

-## Other supported hardware

-

-TGI is also supported on the following AI hardware accelerators:

-- *Habana first-gen Gaudi and Gaudi2:* checkout [here](https://github.com/huggingface/optimum-habana/tree/main/text-generation-inference) how to serve models with TGI on Gaudi and Gaudi2 with [Optimum Habana](https://huggingface.co/docs/optimum/habana/index)

-

diff --git a/assets/architecture.jpg b/assets/architecture.jpg

deleted file mode 100644

index c4a511c9..00000000

Binary files a/assets/architecture.jpg and /dev/null differ

diff --git a/assets/architecture.png b/assets/architecture.png

new file mode 100644

index 00000000..1bcd1283

Binary files /dev/null and b/assets/architecture.png differ

diff --git a/assets/tgi_grafana.json b/assets/tgi_grafana.json

new file mode 100644

index 00000000..5f5a74ad

--- /dev/null

+++ b/assets/tgi_grafana.json

@@ -0,0 +1,3999 @@

+{

+ "__inputs": [

+ {

+ "name": "DS_PROMETHEUS_EKS API INFERENCE PROD",

+ "label": "Prometheus EKS API Inference Prod",

+ "description": "",

+ "type": "datasource",

+ "pluginId": "prometheus",

+ "pluginName": "Prometheus"

+ }

+ ],

+ "__elements": {},

+ "__requires": [

+ {

+ "type": "panel",

+ "id": "gauge",

+ "name": "Gauge",

+ "version": ""

+ },

+ {

+ "type": "grafana",

+ "id": "grafana",

+ "name": "Grafana",

+ "version": "10.0.2"

+ },

+ {

+ "type": "panel",

+ "id": "heatmap",

+ "name": "Heatmap",

+ "version": ""

+ },

+ {

+ "type": "datasource",

+ "id": "prometheus",

+ "name": "Prometheus",

+ "version": "1.0.0"

+ },

+ {

+ "type": "panel",

+ "id": "timeseries",

+ "name": "Time series",

+ "version": ""

+ }

+ ],

+ "annotations": {

+ "list": [

+ {

+ "builtIn": 1,

+ "datasource": {

+ "type": "grafana",

+ "uid": "-- Grafana --"

+ },

+ "enable": true,

+ "hide": true,

+ "iconColor": "rgba(0, 211, 255, 1)",

+ "name": "Annotations & Alerts",

+ "target": {

+ "limit": 100,

+ "matchAny": false,

+ "tags": [],

+ "type": "dashboard"

+ },

+ "type": "dashboard"

+ }

+ ]

+ },

+ "editable": true,

+ "fiscalYearStartMonth": 0,

+ "graphTooltip": 2,

+ "id": 551,

+ "links": [],

+ "liveNow": false,

+ "panels": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "thresholds"

+ },

+ "fieldMinMax": false,

+ "mappings": [],

+ "min": 0,

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 1000

+ }

+ ]

+ },

+ "unit": "ms"

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 7,

+ "w": 8,

+ "x": 0,

+ "y": 0

+ },

+ "id": 49,

+ "options": {

+ "colorMode": "value",

+ "graphMode": "area",

+ "justifyMode": "auto",

+ "orientation": "auto",

+ "reduceOptions": {

+ "calcs": [

+ "mean"

+ ],

+ "fields": "",

+ "values": false

+ },

+ "showPercentChange": false,

+ "textMode": "auto",

+ "wideLayout": true

+ },

+ "pluginVersion": "10.4.2",

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "(histogram_quantile(0.5, sum by (le) (rate(tgi_request_queue_duration_bucket{container=\"$service\"}[10m]))) * 1000) > 0",

+ "hide": true,

+ "instant": false,

+ "legendFormat": "__auto",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "(histogram_quantile(0.5, sum by (le) (rate(tgi_batch_inference_duration_bucket{method=\"prefill\", container=\"$service\"}[10m]))) * 1000) > 0",

+ "hide": true,

+ "instant": false,

+ "legendFormat": "__auto",

+ "range": true,

+ "refId": "C"

+ },

+ {

+ "datasource": {

+ "name": "Expression",

+ "type": "__expr__",

+ "uid": "__expr__"

+ },

+ "expression": "$B + $C",

+ "hide": false,

+ "refId": "D",

+ "type": "math"

+ }

+ ],

+ "title": "Time to first token",

+ "type": "stat"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "thresholds"

+ },

+ "mappings": [],

+ "min": 0,

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "ms"

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 7,

+ "w": 8,

+ "x": 9,

+ "y": 0

+ },

+ "id": 44,

+ "options": {

+ "colorMode": "value",

+ "graphMode": "area",

+ "justifyMode": "auto",

+ "orientation": "auto",

+ "reduceOptions": {

+ "calcs": [

+ "mean"

+ ],

+ "fields": "",

+ "values": false

+ },

+ "showPercentChange": false,

+ "textMode": "auto",

+ "wideLayout": true

+ },

+ "pluginVersion": "10.4.2",

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "(histogram_quantile(0.5, sum by (le) (rate(tgi_batch_forward_duration_bucket{method=\"decode\", container=\"$service\"}[10m]))) * 1000)>0",

+ "instant": false,

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Decode per-token latency",

+ "type": "stat"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "thresholds"

+ },

+ "mappings": [],

+ "min": 0,

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ }

+ ]

+ },

+ "unit": "short"

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 7,

+ "w": 7,

+ "x": 17,

+ "y": 0

+ },

+ "id": 45,

+ "options": {

+ "colorMode": "value",

+ "graphMode": "area",

+ "justifyMode": "auto",

+ "orientation": "auto",

+ "reduceOptions": {

+ "calcs": [

+ "mean"

+ ],

+ "fields": "",

+ "values": false

+ },

+ "showPercentChange": false,

+ "textMode": "auto",

+ "wideLayout": true

+ },

+ "pluginVersion": "10.4.2",

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "sum((rate(tgi_request_generated_tokens_sum{container=\"$service\"}[10m]) / rate(tgi_request_generated_tokens_count{container=\"$service\"}[10m]))>0)",

+ "instant": false,

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Throughput (generated tok/s)",

+ "type": "stat"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "none"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 8,

+ "w": 12,

+ "x": 0,

+ "y": 7

+ },

+ "id": 48,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_request_input_length_bucket{container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_request_input_length_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_request_input_length_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Number of tokens per prompt",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "none"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 8,

+ "w": 12,

+ "x": 12,

+ "y": 7

+ },

+ "id": 30,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_request_generated_tokens_bucket{container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_request_generated_tokens_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_request_generated_tokens_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Number of generated tokens per request",

+ "type": "timeseries"

+ },

+ {

+ "collapsed": false,

+ "gridPos": {

+ "h": 1,

+ "w": 24,

+ "x": 0,

+ "y": 15

+ },

+ "id": 20,

+ "panels": [],

+ "title": "General",

+ "type": "row"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 30,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 8,

+ "w": 6,

+ "x": 0,

+ "y": 16

+ },

+ "id": 4,

+ "maxDataPoints": 100,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "sum(increase(tgi_request_success{container=\"$service\"}[1m]))",

+ "legendFormat": "Success",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "sum(increase(tgi_request_failure{container=\"$service\"}[1m])) by (err)",

+ "hide": false,

+ "legendFormat": "Error: {{err}}",

+ "range": true,

+ "refId": "B"

+ }

+ ],

+ "title": "Requests",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "s"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 13,

+ "w": 9,

+ "x": 6,

+ "y": 16

+ },

+ "id": 6,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_request_mean_time_per_token_duration_bucket{container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_request_mean_time_per_token_duration_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_request_mean_time_per_token_duration_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Mean Time Per Token quantiles",

+ "type": "timeseries"

+ },

+ {

+ "cards": {},

+ "color": {

+ "cardColor": "#5794F2",

+ "colorScale": "linear",

+ "colorScheme": "interpolateSpectral",

+ "exponent": 0.5,

+ "min": 0,

+ "mode": "opacity"

+ },

+ "dataFormat": "tsbuckets",

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "custom": {

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "scaleDistribution": {

+ "type": "linear"

+ }

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 13,

+ "w": 9,

+ "x": 15,

+ "y": 16

+ },

+ "heatmap": {},

+ "hideZeroBuckets": false,

+ "highlightCards": true,

+ "id": 13,

+ "legend": {

+ "show": false

+ },

+ "maxDataPoints": 25,

+ "options": {

+ "calculate": false,

+ "calculation": {},

+ "cellGap": 2,

+ "cellValues": {},

+ "color": {

+ "exponent": 0.5,

+ "fill": "#5794F2",

+ "min": 0,

+ "mode": "scheme",

+ "reverse": false,

+ "scale": "exponential",

+ "scheme": "Spectral",

+ "steps": 128

+ },

+ "exemplars": {

+ "color": "rgba(255,0,255,0.7)"

+ },

+ "filterValues": {

+ "le": 1e-9

+ },

+ "legend": {

+ "show": false

+ },

+ "rowsFrame": {

+ "layout": "auto"

+ },

+ "showValue": "never",

+ "tooltip": {

+ "mode": "single",

+ "showColorScale": false,

+ "yHistogram": false

+ },

+ "yAxis": {

+ "axisPlacement": "left",

+ "decimals": 1,

+ "reverse": false,

+ "unit": "s"

+ }

+ },

+ "pluginVersion": "10.4.2",

+ "reverseYBuckets": false,

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "exemplar": true,

+ "expr": "sum(increase(tgi_request_mean_time_per_token_duration_bucket{container=\"$service\"}[5m])) by (le)",

+ "format": "heatmap",

+ "interval": "",

+ "legendFormat": "{{ le }}",

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Mean Time Per Token",

+ "tooltip": {

+ "show": true,

+ "showHistogram": false

+ },

+ "type": "heatmap",

+ "xAxis": {

+ "show": true

+ },

+ "yAxis": {

+ "decimals": 1,

+ "format": "s",

+ "logBase": 1,

+ "show": true

+ },

+ "yBucketBound": "auto"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "auto",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "percentage",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "orange",

+ "value": 70

+ },

+ {

+ "color": "red",

+ "value": 85

+ }

+ ]

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 5,

+ "w": 3,

+ "x": 0,

+ "y": 24

+ },

+ "id": 18,

+ "options": {

+ "legend": {

+ "calcs": [],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": false

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "pluginVersion": "9.1.0",

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "count(tgi_request_count{container=\"$service\"})",

+ "legendFormat": "Replicas",

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Number of replicas",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "mappings": [],

+ "thresholds": {

+ "mode": "percentage",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "orange",

+ "value": 70

+ },

+ {

+ "color": "red",

+ "value": 85

+ }

+ ]

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 5,

+ "w": 3,

+ "x": 3,

+ "y": 24

+ },

+ "id": 32,

+ "options": {

+ "minVizHeight": 75,

+ "minVizWidth": 75,

+ "orientation": "auto",

+ "reduceOptions": {

+ "calcs": [

+ "lastNotNull"

+ ],

+ "fields": "",

+ "values": false

+ },

+ "showThresholdLabels": false,

+ "showThresholdMarkers": true,

+ "sizing": "auto"

+ },

+ "pluginVersion": "10.4.2",

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "sum(tgi_queue_size{container=\"$service\"})",

+ "legendFormat": "__auto",

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Queue Size",

+ "type": "gauge"

+ },

+ {

+ "collapsed": false,

+ "gridPos": {

+ "h": 1,

+ "w": 24,

+ "x": 0,

+ "y": 29

+ },

+ "id": 26,

+ "panels": [],

+ "title": "Batching",

+ "type": "row"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "bars",

+ "fillOpacity": 50,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "normal"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 5,

+ "w": 6,

+ "x": 0,

+ "y": 30

+ },

+ "id": 29,

+ "maxDataPoints": 40,

+ "options": {

+ "legend": {

+ "calcs": [],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": false

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "pluginVersion": "9.1.0",

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "avg(tgi_batch_current_max_tokens{container=\"$service\"})",

+ "legendFormat": "{{ pod }}",

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Max tokens per batch",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "none"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 9,

+ "w": 4,

+ "x": 6,

+ "y": 30

+ },

+ "id": 33,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_request_skipped_tokens_bucket{container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_request_skipped_tokens_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_request_skipped_tokens_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Speculated Tokens",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "none"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 9,

+ "w": 5,

+ "x": 10,

+ "y": 30

+ },

+ "id": 46,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_request_input_length_bucket{container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_request_input_length_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_request_input_length_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Prompt Tokens",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "s"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 9,

+ "w": 9,

+ "x": 15,

+ "y": 30

+ },

+ "id": 8,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_request_duration_bucket{container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_request_duration_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_request_duration_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Latency quantiles",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "bars",

+ "fillOpacity": 50,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "normal"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 4,

+ "w": 6,

+ "x": 0,

+ "y": 35

+ },

+ "id": 27,

+ "maxDataPoints": 40,

+ "options": {

+ "legend": {

+ "calcs": [],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": false

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "pluginVersion": "9.1.0",

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "avg(tgi_batch_current_size{container=\"$service\"})",

+ "legendFormat": "{{ pod }}",

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Batch Size",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 30,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 9,

+ "w": 6,

+ "x": 0,

+ "y": 39

+ },

+ "id": 28,

+ "maxDataPoints": 100,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "sum(increase(tgi_batch_concat{container=\"$service\"}[1m])) by (reason)",

+ "hide": false,

+ "legendFormat": "Reason: {{ reason }}",

+ "range": true,

+ "refId": "B"

+ }

+ ],

+ "title": "Concatenates",

+ "type": "timeseries"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "s"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 9,

+ "w": 9,

+ "x": 6,

+ "y": 39

+ },

+ "id": 31,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_request_queue_duration_bucket{container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_request_queue_duration_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_request_queue_duration_bucket{container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Queue quantiles",

+ "type": "timeseries"

+ },

+ {

+ "collapsed": false,

+ "gridPos": {

+ "h": 1,

+ "w": 24,

+ "x": 0,

+ "y": 48

+ },

+ "id": 22,

+ "panels": [],

+ "title": "Prefill",

+ "type": "row"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "s"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 11,

+ "w": 12,

+ "x": 0,

+ "y": 49

+ },

+ "id": 7,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_batch_inference_duration_bucket{method=\"prefill\", container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_batch_inference_duration_bucket{method=\"prefill\", container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_batch_inference_duration_bucket{method=\"prefill\", container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Prefill Quantiles",

+ "type": "timeseries"

+ },

+ {

+ "cards": {},

+ "color": {

+ "cardColor": "#5794F2",

+ "colorScale": "linear",

+ "colorScheme": "interpolateSpectral",

+ "exponent": 0.5,

+ "min": 0,

+ "mode": "opacity"

+ },

+ "dataFormat": "tsbuckets",

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "custom": {

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "scaleDistribution": {

+ "type": "linear"

+ }

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 11,

+ "w": 12,

+ "x": 12,

+ "y": 49

+ },

+ "heatmap": {},

+ "hideZeroBuckets": false,

+ "highlightCards": true,

+ "id": 14,

+ "legend": {

+ "show": false

+ },

+ "maxDataPoints": 25,

+ "options": {

+ "calculate": false,

+ "calculation": {},

+ "cellGap": 2,

+ "cellValues": {},

+ "color": {

+ "exponent": 0.5,

+ "fill": "#5794F2",

+ "min": 0,

+ "mode": "scheme",

+ "reverse": false,

+ "scale": "exponential",

+ "scheme": "Spectral",

+ "steps": 128

+ },

+ "exemplars": {

+ "color": "rgba(255,0,255,0.7)"

+ },

+ "filterValues": {

+ "le": 1e-9

+ },

+ "legend": {

+ "show": false

+ },

+ "rowsFrame": {

+ "layout": "auto"

+ },

+ "showValue": "never",

+ "tooltip": {

+ "mode": "single",

+ "showColorScale": false,

+ "yHistogram": false

+ },

+ "yAxis": {

+ "axisPlacement": "left",

+ "decimals": 1,

+ "reverse": false,

+ "unit": "s"

+ }

+ },

+ "pluginVersion": "10.4.2",

+ "reverseYBuckets": false,

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "exemplar": true,

+ "expr": "sum(increase(tgi_batch_inference_duration_bucket{method=\"prefill\", container=\"$service\"}[5m])) by (le)",

+ "format": "heatmap",

+ "interval": "",

+ "legendFormat": "{{ le }}",

+ "range": true,

+ "refId": "A"

+ }

+ ],

+ "title": "Prefill Latency",

+ "tooltip": {

+ "show": true,

+ "showHistogram": false

+ },

+ "type": "heatmap",

+ "xAxis": {

+ "show": true

+ },

+ "yAxis": {

+ "decimals": 1,

+ "format": "s",

+ "logBase": 1,

+ "show": true

+ },

+ "yBucketBound": "auto"

+ },

+ {

+ "collapsed": false,

+ "gridPos": {

+ "h": 1,

+ "w": 24,

+ "x": 0,

+ "y": 60

+ },

+ "id": 24,

+ "panels": [],

+ "title": "Decode",

+ "type": "row"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "color": {

+ "mode": "palette-classic"

+ },

+ "custom": {

+ "axisBorderShow": false,

+ "axisCenteredZero": false,

+ "axisColorMode": "text",

+ "axisLabel": "",

+ "axisPlacement": "auto",

+ "barAlignment": 0,

+ "drawStyle": "line",

+ "fillOpacity": 0,

+ "gradientMode": "none",

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "insertNulls": false,

+ "lineInterpolation": "linear",

+ "lineWidth": 1,

+ "pointSize": 5,

+ "scaleDistribution": {

+ "type": "linear"

+ },

+ "showPoints": "never",

+ "spanNulls": false,

+ "stacking": {

+ "group": "A",

+ "mode": "none"

+ },

+ "thresholdsStyle": {

+ "mode": "off"

+ }

+ },

+ "mappings": [],

+ "thresholds": {

+ "mode": "absolute",

+ "steps": [

+ {

+ "color": "green",

+ "value": null

+ },

+ {

+ "color": "red",

+ "value": 80

+ }

+ ]

+ },

+ "unit": "s"

+ },

+ "overrides": [

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p50"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "green",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p90"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "orange",

+ "mode": "fixed"

+ }

+ }

+ ]

+ },

+ {

+ "matcher": {

+ "id": "byName",

+ "options": "p99"

+ },

+ "properties": [

+ {

+ "id": "color",

+ "value": {

+ "fixedColor": "red",

+ "mode": "fixed"

+ }

+ }

+ ]

+ }

+ ]

+ },

+ "gridPos": {

+ "h": 11,

+ "w": 12,

+ "x": 0,

+ "y": 61

+ },

+ "id": 11,

+ "options": {

+ "legend": {

+ "calcs": [

+ "min",

+ "max"

+ ],

+ "displayMode": "list",

+ "placement": "bottom",

+ "showLegend": true

+ },

+ "tooltip": {

+ "mode": "single",

+ "sort": "none"

+ }

+ },

+ "targets": [

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.5, sum by (le) (rate(tgi_batch_inference_duration_bucket{method=\"decode\", container=\"$service\"}[10m])))",

+ "legendFormat": "p50",

+ "range": true,

+ "refId": "A"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.9, sum by (le) (rate(tgi_batch_inference_duration_bucket{method=\"decode\", container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p90",

+ "range": true,

+ "refId": "B"

+ },

+ {

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "editorMode": "code",

+ "expr": "histogram_quantile(0.99, sum by (le) (rate(tgi_batch_inference_duration_bucket{method=\"decode\", container=\"$service\"}[10m])))",

+ "hide": false,

+ "legendFormat": "p99",

+ "range": true,

+ "refId": "C"

+ }

+ ],

+ "title": "Decode quantiles",

+ "type": "timeseries"

+ },

+ {

+ "cards": {},

+ "color": {

+ "cardColor": "#5794F2",

+ "colorScale": "linear",

+ "colorScheme": "interpolateSpectral",

+ "exponent": 0.5,

+ "min": 0,

+ "mode": "opacity"

+ },

+ "dataFormat": "tsbuckets",

+ "datasource": {

+ "type": "prometheus",

+ "uid": "${DS_PROMETHEUS_EKS API INFERENCE PROD}"

+ },

+ "fieldConfig": {

+ "defaults": {

+ "custom": {

+ "hideFrom": {

+ "legend": false,

+ "tooltip": false,

+ "viz": false

+ },

+ "scaleDistribution": {

+ "type": "linear"

+ }

+ }

+ },

+ "overrides": []

+ },

+ "gridPos": {

+ "h": 11,

+ "w": 12,

+ "x": 12,

+ "y": 61

+ },

+ "heatmap": {},

+ "hideZeroBuckets": false,

+ "highlightCards": true,

+ "id": 15,

+ "legend": {

+ "show": false

+ },

+ "maxDataPoints": 25,

+ "options": {

+ "calculate": false,

+ "calculation": {},

+ "cellGap": 2,

+ "cellValues": {},

+ "color": {

+ "exponent": 0.5,

+ "fill": "#5794F2",

+ "min": 0,

+ "mode": "scheme",

+ "reverse": false,

+ "scale": "exponential",

+ "scheme": "Spectral",

+ "steps": 128

+ },

+ "exemplars": {

+ "color": "rgba(255,0,255,0.7)"

+ },

+ "filterValues": {

+ "le": 1e-9

+ },

+ "legend": {

+ "show": false

+ },

+ "rowsFrame": {

+ "layout": "auto"

+ },

+ "showValue": "never",

+ "tooltip": {

+ "mode": "single",

+ "showColorScale": false,

+ "yHistogram": false

+ },

+ "yAxis": {