diff --git a/docs/source/basic_tutorials/consuming_tgi.md b/docs/source/basic_tutorials/consuming_tgi.md

index fda01e2a..60b0d203 100644

--- a/docs/source/basic_tutorials/consuming_tgi.md

+++ b/docs/source/basic_tutorials/consuming_tgi.md

@@ -101,7 +101,7 @@ gr.ChatInterface(

inference,

chatbot=gr.Chatbot(height=300),

textbox=gr.Textbox(placeholder="Chat with me!", container=False, scale=7),

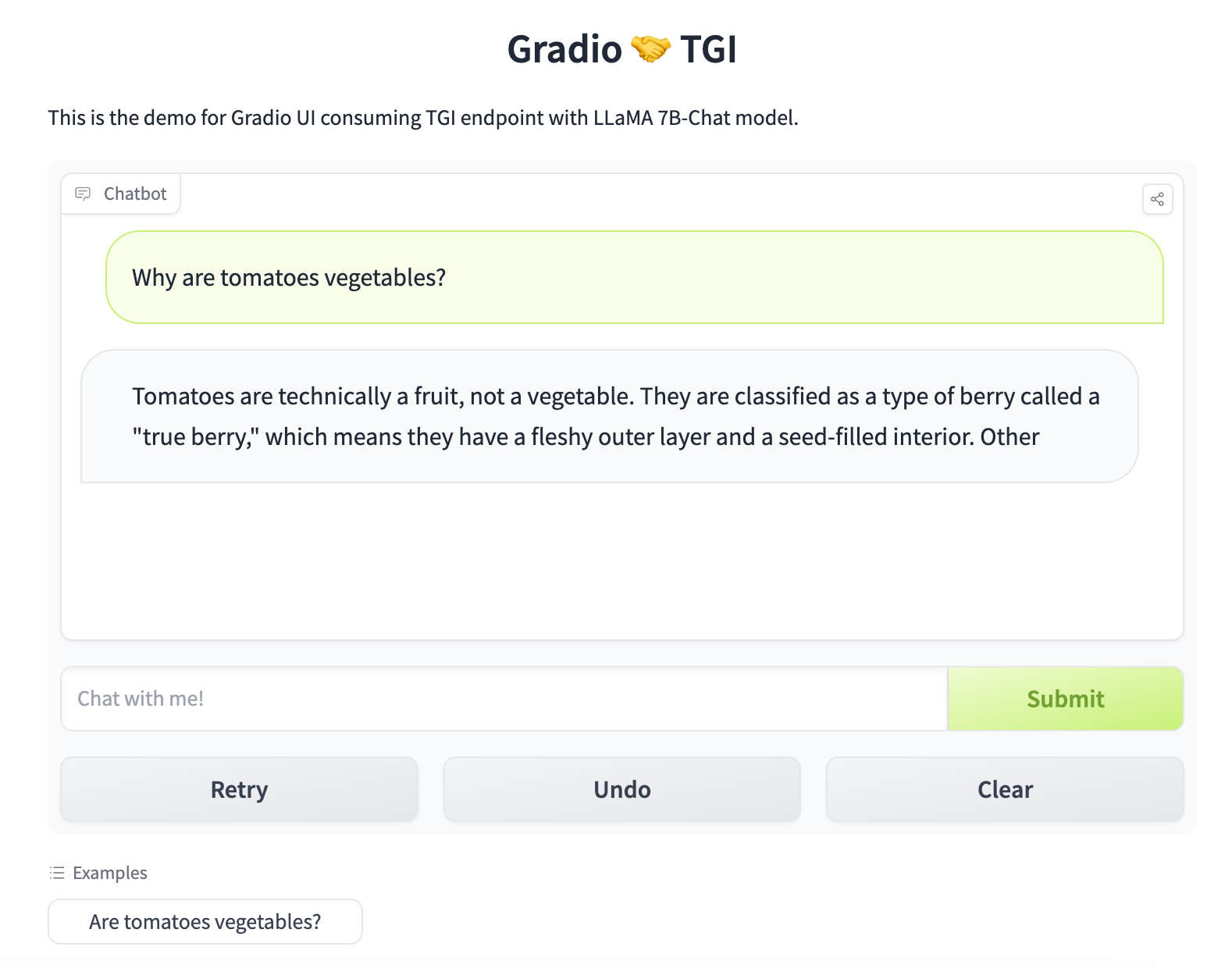

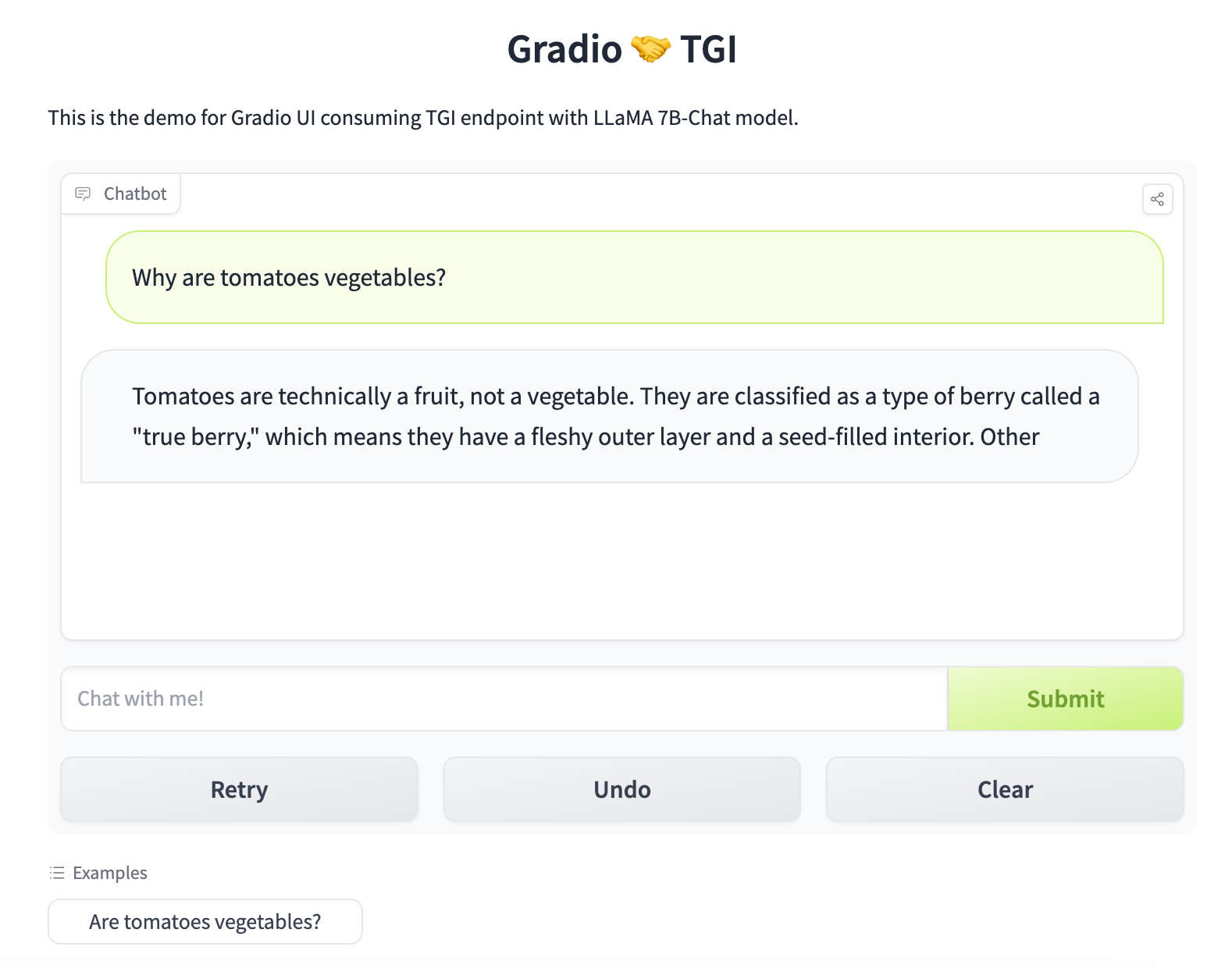

- description="This is the demo for Gradio UI consuming TGI endpoint with Falcon model.",

+ description="This is the demo for Gradio UI consuming TGI endpoint with LLaMA 2 7B-Chat model.",

title="Gradio 🤝 TGI",

examples=["Are tomatoes vegetables?"],

retry_btn=None,

@@ -119,10 +119,15 @@ The UI looks like this 👇

/>

+You can try the demo directly here 👇

+

+

+

+

You can disable streaming mode using `return` instead of `yield` in your inference function.

You can read more about how to customize a `ChatInterface` [here](https://www.gradio.app/guides/creating-a-chatbot-fast).

+You can try the demo directly here 👇

+

+

+

+

You can disable streaming mode using `return` instead of `yield` in your inference function.

You can read more about how to customize a `ChatInterface` [here](https://www.gradio.app/guides/creating-a-chatbot-fast).

+You can try the demo directly here 👇

+

+

+You can try the demo directly here 👇

+

+