mirror of

https://github.com/huggingface/text-generation-inference.git

synced 2025-09-10 20:04:52 +00:00

Merge branch 'main' into safetensors_docs

This commit is contained in:

commit

0ef535e77e

@ -8,7 +8,7 @@ members = [

|

|||||||

]

|

]

|

||||||

|

|

||||||

[workspace.package]

|

[workspace.package]

|

||||||

version = "1.0.1"

|

version = "1.0.3"

|

||||||

edition = "2021"

|

edition = "2021"

|

||||||

authors = ["Olivier Dehaene"]

|

authors = ["Olivier Dehaene"]

|

||||||

homepage = "https://github.com/huggingface/text-generation-inference"

|

homepage = "https://github.com/huggingface/text-generation-inference"

|

||||||

|

|||||||

@ -67,6 +67,7 @@ to power Hugging Chat, the Inference API and Inference Endpoint.

|

|||||||

- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

|

- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

|

||||||

- [MPT](https://huggingface.co/mosaicml/mpt-30b)

|

- [MPT](https://huggingface.co/mosaicml/mpt-30b)

|

||||||

- [Llama V2](https://huggingface.co/meta-llama)

|

- [Llama V2](https://huggingface.co/meta-llama)

|

||||||

|

- [Code Llama](https://huggingface.co/codellama)

|

||||||

|

|

||||||

Other architectures are supported on a best effort basis using:

|

Other architectures are supported on a best effort basis using:

|

||||||

|

|

||||||

@ -86,7 +87,7 @@ The easiest way of getting started is using the official Docker container:

|

|||||||

model=tiiuae/falcon-7b-instruct

|

model=tiiuae/falcon-7b-instruct

|

||||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||||

|

|

||||||

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.1 --model-id $model

|

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.3 --model-id $model

|

||||||

```

|

```

|

||||||

**Note:** To use GPUs, you need to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html). We also recommend using NVIDIA drivers with CUDA version 11.8 or higher. For running the Docker container on a machine with no GPUs or CUDA support, it is enough to remove the `--gpus all` flag and add `--disable-custom-kernels`, please note CPU is not the intended platform for this project, so performance might be subpar.

|

**Note:** To use GPUs, you need to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html). We also recommend using NVIDIA drivers with CUDA version 11.8 or higher. For running the Docker container on a machine with no GPUs or CUDA support, it is enough to remove the `--gpus all` flag and add `--disable-custom-kernels`, please note CPU is not the intended platform for this project, so performance might be subpar.

|

||||||

|

|

||||||

@ -153,7 +154,7 @@ model=meta-llama/Llama-2-7b-chat-hf

|

|||||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||||

token=<your cli READ token>

|

token=<your cli READ token>

|

||||||

|

|

||||||

docker run --gpus all --shm-size 1g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.1 --model-id $model

|

docker run --gpus all --shm-size 1g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.3 --model-id $model

|

||||||

```

|

```

|

||||||

|

|

||||||

### A note on Shared Memory (shm)

|

### A note on Shared Memory (shm)

|

||||||

|

|||||||

@ -37,6 +37,7 @@ pub(crate) async fn generation_task(

|

|||||||

batch_size: Vec<u32>,

|

batch_size: Vec<u32>,

|

||||||

sequence_length: u32,

|

sequence_length: u32,

|

||||||

decode_length: u32,

|

decode_length: u32,

|

||||||

|

top_n_tokens: Option<u32>,

|

||||||

n_runs: usize,

|

n_runs: usize,

|

||||||

warmups: usize,

|

warmups: usize,

|

||||||

parameters: NextTokenChooserParameters,

|

parameters: NextTokenChooserParameters,

|

||||||

@ -48,7 +49,7 @@ pub(crate) async fn generation_task(

|

|||||||

// End task if a message is received on shutdown_receiver

|

// End task if a message is received on shutdown_receiver

|

||||||

// _shutdown_guard_sender will be dropped once the task is finished

|

// _shutdown_guard_sender will be dropped once the task is finished

|

||||||

tokio::select! {

|

tokio::select! {

|

||||||

res = generate_runs(tokenizer, batch_size, sequence_length, decode_length, n_runs, warmups, parameters, client, run_sender.clone()) => {

|

res = generate_runs(tokenizer, batch_size, sequence_length, decode_length, top_n_tokens, n_runs, warmups, parameters, client, run_sender.clone()) => {

|

||||||

if let Err(err) = res {

|

if let Err(err) = res {

|

||||||

run_sender.send(Err(err)).await.unwrap_or(());

|

run_sender.send(Err(err)).await.unwrap_or(());

|

||||||

}

|

}

|

||||||

@ -64,6 +65,7 @@ async fn generate_runs(

|

|||||||

batch_size: Vec<u32>,

|

batch_size: Vec<u32>,

|

||||||

sequence_length: u32,

|

sequence_length: u32,

|

||||||

decode_length: u32,

|

decode_length: u32,

|

||||||

|

top_n_tokens: Option<u32>,

|

||||||

n_runs: usize,

|

n_runs: usize,

|

||||||

warmups: usize,

|

warmups: usize,

|

||||||

parameters: NextTokenChooserParameters,

|

parameters: NextTokenChooserParameters,

|

||||||

@ -82,6 +84,7 @@ async fn generate_runs(

|

|||||||

b,

|

b,

|

||||||

decode_length,

|

decode_length,

|

||||||

parameters.clone(),

|

parameters.clone(),

|

||||||

|

top_n_tokens,

|

||||||

&mut client,

|

&mut client,

|

||||||

)

|

)

|

||||||

.await?;

|

.await?;

|

||||||

@ -97,6 +100,7 @@ async fn generate_runs(

|

|||||||

b,

|

b,

|

||||||

decode_length,

|

decode_length,

|

||||||

parameters.clone(),

|

parameters.clone(),

|

||||||

|

top_n_tokens,

|

||||||

&mut client,

|

&mut client,

|

||||||

)

|

)

|

||||||

.await?;

|

.await?;

|

||||||

@ -130,6 +134,7 @@ async fn prefill(

|

|||||||

batch_size: u32,

|

batch_size: u32,

|

||||||

decode_length: u32,

|

decode_length: u32,

|

||||||

parameters: NextTokenChooserParameters,

|

parameters: NextTokenChooserParameters,

|

||||||

|

top_n_tokens: Option<u32>,

|

||||||

client: &mut ShardedClient,

|

client: &mut ShardedClient,

|

||||||

) -> Result<(Prefill, CachedBatch), ClientError> {

|

) -> Result<(Prefill, CachedBatch), ClientError> {

|

||||||

// Create requests

|

// Create requests

|

||||||

@ -145,6 +150,7 @@ async fn prefill(

|

|||||||

stop_sequences: vec![],

|

stop_sequences: vec![],

|

||||||

ignore_eos_token: true, // Will not stop even if a eos token is generated

|

ignore_eos_token: true, // Will not stop even if a eos token is generated

|

||||||

}),

|

}),

|

||||||

|

top_n_tokens: top_n_tokens.unwrap_or(0),

|

||||||

})

|

})

|

||||||

.collect();

|

.collect();

|

||||||

|

|

||||||

|

|||||||

@ -22,6 +22,7 @@ pub async fn run(

|

|||||||

batch_size: Vec<u32>,

|

batch_size: Vec<u32>,

|

||||||

sequence_length: u32,

|

sequence_length: u32,

|

||||||

decode_length: u32,

|

decode_length: u32,

|

||||||

|

top_n_tokens: Option<u32>,

|

||||||

n_runs: usize,

|

n_runs: usize,

|

||||||

warmups: usize,

|

warmups: usize,

|

||||||

temperature: Option<f32>,

|

temperature: Option<f32>,

|

||||||

@ -70,6 +71,7 @@ pub async fn run(

|

|||||||

batch_size.clone(),

|

batch_size.clone(),

|

||||||

sequence_length,

|

sequence_length,

|

||||||

decode_length,

|

decode_length,

|

||||||

|

top_n_tokens,

|

||||||

n_runs,

|

n_runs,

|

||||||

warmups,

|

warmups,

|

||||||

parameters,

|

parameters,

|

||||||

@ -130,6 +132,7 @@ pub async fn run(

|

|||||||

tokenizer_name,

|

tokenizer_name,

|

||||||

sequence_length,

|

sequence_length,

|

||||||

decode_length,

|

decode_length,

|

||||||

|

top_n_tokens,

|

||||||

n_runs,

|

n_runs,

|

||||||

warmups,

|

warmups,

|

||||||

temperature,

|

temperature,

|

||||||

|

|||||||

@ -93,6 +93,11 @@ struct Args {

|

|||||||

/// decoding strategies, for full doc refer to the `text-generation-server`

|

/// decoding strategies, for full doc refer to the `text-generation-server`

|

||||||

#[clap(long, env)]

|

#[clap(long, env)]

|

||||||

do_sample: bool,

|

do_sample: bool,

|

||||||

|

|

||||||

|

/// Generation parameter in case you want to specifically test/debug particular

|

||||||

|

/// decoding strategies, for full doc refer to the `text-generation-server`

|

||||||

|

#[clap(long, env)]

|

||||||

|

top_n_tokens: Option<u32>,

|

||||||

}

|

}

|

||||||

|

|

||||||

fn main() -> Result<(), Box<dyn std::error::Error>> {

|

fn main() -> Result<(), Box<dyn std::error::Error>> {

|

||||||

@ -117,6 +122,7 @@ fn main() -> Result<(), Box<dyn std::error::Error>> {

|

|||||||

watermark,

|

watermark,

|

||||||

do_sample,

|

do_sample,

|

||||||

master_shard_uds_path,

|

master_shard_uds_path,

|

||||||

|

top_n_tokens,

|

||||||

} = args;

|

} = args;

|

||||||

|

|

||||||

let batch_size = batch_size.unwrap_or(vec![1, 2, 4, 8, 16, 32]);

|

let batch_size = batch_size.unwrap_or(vec![1, 2, 4, 8, 16, 32]);

|

||||||

@ -173,6 +179,7 @@ fn main() -> Result<(), Box<dyn std::error::Error>> {

|

|||||||

batch_size,

|

batch_size,

|

||||||

sequence_length,

|

sequence_length,

|

||||||

decode_length,

|

decode_length,

|

||||||

|

top_n_tokens,

|

||||||

runs,

|

runs,

|

||||||

warmups,

|

warmups,

|

||||||

temperature,

|

temperature,

|

||||||

|

|||||||

@ -7,6 +7,7 @@ pub(crate) fn parameters_table(

|

|||||||

tokenizer_name: String,

|

tokenizer_name: String,

|

||||||

sequence_length: u32,

|

sequence_length: u32,

|

||||||

decode_length: u32,

|

decode_length: u32,

|

||||||

|

top_n_tokens: Option<u32>,

|

||||||

n_runs: usize,

|

n_runs: usize,

|

||||||

warmups: usize,

|

warmups: usize,

|

||||||

temperature: Option<f32>,

|

temperature: Option<f32>,

|

||||||

@ -24,6 +25,7 @@ pub(crate) fn parameters_table(

|

|||||||

builder.push_record(["Model", &tokenizer_name]);

|

builder.push_record(["Model", &tokenizer_name]);

|

||||||

builder.push_record(["Sequence Length", &sequence_length.to_string()]);

|

builder.push_record(["Sequence Length", &sequence_length.to_string()]);

|

||||||

builder.push_record(["Decode Length", &decode_length.to_string()]);

|

builder.push_record(["Decode Length", &decode_length.to_string()]);

|

||||||

|

builder.push_record(["Top N Tokens", &format!("{top_n_tokens:?}")]);

|

||||||

builder.push_record(["N Runs", &n_runs.to_string()]);

|

builder.push_record(["N Runs", &n_runs.to_string()]);

|

||||||

builder.push_record(["Warmups", &warmups.to_string()]);

|

builder.push_record(["Warmups", &warmups.to_string()]);

|

||||||

builder.push_record(["Temperature", &format!("{temperature:?}")]);

|

builder.push_record(["Temperature", &format!("{temperature:?}")]);

|

||||||

|

|||||||

@ -12,7 +12,7 @@ repository = "https://github.com/huggingface/text-generation-inference"

|

|||||||

|

|

||||||

[tool.poetry.dependencies]

|

[tool.poetry.dependencies]

|

||||||

python = "^3.7"

|

python = "^3.7"

|

||||||

pydantic = "^1.10"

|

pydantic = "> 1.10, < 3"

|

||||||

aiohttp = "^3.8"

|

aiohttp = "^3.8"

|

||||||

huggingface-hub = ">= 0.12, < 1.0"

|

huggingface-hub = ">= 0.12, < 1.0"

|

||||||

|

|

||||||

|

|||||||

@ -12,7 +12,7 @@

|

|||||||

# See the License for the specific language governing permissions and

|

# See the License for the specific language governing permissions and

|

||||||

# limitations under the License.

|

# limitations under the License.

|

||||||

|

|

||||||

__version__ = "0.3.0"

|

__version__ = "0.6.0"

|

||||||

|

|

||||||

from text_generation.client import Client, AsyncClient

|

from text_generation.client import Client, AsyncClient

|

||||||

from text_generation.inference_api import InferenceAPIClient, InferenceAPIAsyncClient

|

from text_generation.inference_api import InferenceAPIClient, InferenceAPIAsyncClient

|

||||||

|

|||||||

@ -75,6 +75,7 @@ class Client:

|

|||||||

typical_p: Optional[float] = None,

|

typical_p: Optional[float] = None,

|

||||||

watermark: bool = False,

|

watermark: bool = False,

|

||||||

decoder_input_details: bool = False,

|

decoder_input_details: bool = False,

|

||||||

|

top_n_tokens: Optional[int] = None,

|

||||||

) -> Response:

|

) -> Response:

|

||||||

"""

|

"""

|

||||||

Given a prompt, generate the following text

|

Given a prompt, generate the following text

|

||||||

@ -113,6 +114,8 @@ class Client:

|

|||||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||||

decoder_input_details (`bool`):

|

decoder_input_details (`bool`):

|

||||||

Return the decoder input token logprobs and ids

|

Return the decoder input token logprobs and ids

|

||||||

|

top_n_tokens (`int`):

|

||||||

|

Return the `n` most likely tokens at each step

|

||||||

|

|

||||||

Returns:

|

Returns:

|

||||||

Response: generated response

|

Response: generated response

|

||||||

@ -134,6 +137,7 @@ class Client:

|

|||||||

typical_p=typical_p,

|

typical_p=typical_p,

|

||||||

watermark=watermark,

|

watermark=watermark,

|

||||||

decoder_input_details=decoder_input_details,

|

decoder_input_details=decoder_input_details,

|

||||||

|

top_n_tokens=top_n_tokens

|

||||||

)

|

)

|

||||||

request = Request(inputs=prompt, stream=False, parameters=parameters)

|

request = Request(inputs=prompt, stream=False, parameters=parameters)

|

||||||

|

|

||||||

@ -164,6 +168,7 @@ class Client:

|

|||||||

truncate: Optional[int] = None,

|

truncate: Optional[int] = None,

|

||||||

typical_p: Optional[float] = None,

|

typical_p: Optional[float] = None,

|

||||||

watermark: bool = False,

|

watermark: bool = False,

|

||||||

|

top_n_tokens: Optional[int] = None,

|

||||||

) -> Iterator[StreamResponse]:

|

) -> Iterator[StreamResponse]:

|

||||||

"""

|

"""

|

||||||

Given a prompt, generate the following stream of tokens

|

Given a prompt, generate the following stream of tokens

|

||||||

@ -198,6 +203,8 @@ class Client:

|

|||||||

See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

||||||

watermark (`bool`):

|

watermark (`bool`):

|

||||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||||

|

top_n_tokens (`int`):

|

||||||

|

Return the `n` most likely tokens at each step

|

||||||

|

|

||||||

Returns:

|

Returns:

|

||||||

Iterator[StreamResponse]: stream of generated tokens

|

Iterator[StreamResponse]: stream of generated tokens

|

||||||

@ -219,6 +226,7 @@ class Client:

|

|||||||

truncate=truncate,

|

truncate=truncate,

|

||||||

typical_p=typical_p,

|

typical_p=typical_p,

|

||||||

watermark=watermark,

|

watermark=watermark,

|

||||||

|

top_n_tokens=top_n_tokens,

|

||||||

)

|

)

|

||||||

request = Request(inputs=prompt, stream=True, parameters=parameters)

|

request = Request(inputs=prompt, stream=True, parameters=parameters)

|

||||||

|

|

||||||

@ -317,6 +325,7 @@ class AsyncClient:

|

|||||||

typical_p: Optional[float] = None,

|

typical_p: Optional[float] = None,

|

||||||

watermark: bool = False,

|

watermark: bool = False,

|

||||||

decoder_input_details: bool = False,

|

decoder_input_details: bool = False,

|

||||||

|

top_n_tokens: Optional[int] = None,

|

||||||

) -> Response:

|

) -> Response:

|

||||||

"""

|

"""

|

||||||

Given a prompt, generate the following text asynchronously

|

Given a prompt, generate the following text asynchronously

|

||||||

@ -355,6 +364,8 @@ class AsyncClient:

|

|||||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||||

decoder_input_details (`bool`):

|

decoder_input_details (`bool`):

|

||||||

Return the decoder input token logprobs and ids

|

Return the decoder input token logprobs and ids

|

||||||

|

top_n_tokens (`int`):

|

||||||

|

Return the `n` most likely tokens at each step

|

||||||

|

|

||||||

Returns:

|

Returns:

|

||||||

Response: generated response

|

Response: generated response

|

||||||

@ -376,6 +387,7 @@ class AsyncClient:

|

|||||||

truncate=truncate,

|

truncate=truncate,

|

||||||

typical_p=typical_p,

|

typical_p=typical_p,

|

||||||

watermark=watermark,

|

watermark=watermark,

|

||||||

|

top_n_tokens=top_n_tokens,

|

||||||

)

|

)

|

||||||

request = Request(inputs=prompt, stream=False, parameters=parameters)

|

request = Request(inputs=prompt, stream=False, parameters=parameters)

|

||||||

|

|

||||||

@ -404,6 +416,7 @@ class AsyncClient:

|

|||||||

truncate: Optional[int] = None,

|

truncate: Optional[int] = None,

|

||||||

typical_p: Optional[float] = None,

|

typical_p: Optional[float] = None,

|

||||||

watermark: bool = False,

|

watermark: bool = False,

|

||||||

|

top_n_tokens: Optional[int] = None,

|

||||||

) -> AsyncIterator[StreamResponse]:

|

) -> AsyncIterator[StreamResponse]:

|

||||||

"""

|

"""

|

||||||

Given a prompt, generate the following stream of tokens asynchronously

|

Given a prompt, generate the following stream of tokens asynchronously

|

||||||

@ -438,6 +451,8 @@ class AsyncClient:

|

|||||||

See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

||||||

watermark (`bool`):

|

watermark (`bool`):

|

||||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||||

|

top_n_tokens (`int`):

|

||||||

|

Return the `n` most likely tokens at each step

|

||||||

|

|

||||||

Returns:

|

Returns:

|

||||||

AsyncIterator[StreamResponse]: stream of generated tokens

|

AsyncIterator[StreamResponse]: stream of generated tokens

|

||||||

@ -459,6 +474,7 @@ class AsyncClient:

|

|||||||

truncate=truncate,

|

truncate=truncate,

|

||||||

typical_p=typical_p,

|

typical_p=typical_p,

|

||||||

watermark=watermark,

|

watermark=watermark,

|

||||||

|

top_n_tokens=top_n_tokens,

|

||||||

)

|

)

|

||||||

request = Request(inputs=prompt, stream=True, parameters=parameters)

|

request = Request(inputs=prompt, stream=True, parameters=parameters)

|

||||||

|

|

||||||

|

|||||||

@ -18,27 +18,29 @@ class Parameters(BaseModel):

|

|||||||

# Stop generating tokens if a member of `stop_sequences` is generated

|

# Stop generating tokens if a member of `stop_sequences` is generated

|

||||||

stop: List[str] = []

|

stop: List[str] = []

|

||||||

# Random sampling seed

|

# Random sampling seed

|

||||||

seed: Optional[int]

|

seed: Optional[int] = None

|

||||||

# The value used to module the logits distribution.

|

# The value used to module the logits distribution.

|

||||||

temperature: Optional[float]

|

temperature: Optional[float] = None

|

||||||

# The number of highest probability vocabulary tokens to keep for top-k-filtering.

|

# The number of highest probability vocabulary tokens to keep for top-k-filtering.

|

||||||

top_k: Optional[int]

|

top_k: Optional[int] = None

|

||||||

# If set to < 1, only the smallest set of most probable tokens with probabilities that add up to `top_p` or

|

# If set to < 1, only the smallest set of most probable tokens with probabilities that add up to `top_p` or

|

||||||

# higher are kept for generation.

|

# higher are kept for generation.

|

||||||

top_p: Optional[float]

|

top_p: Optional[float] = None

|

||||||

# truncate inputs tokens to the given size

|

# truncate inputs tokens to the given size

|

||||||

truncate: Optional[int]

|

truncate: Optional[int] = None

|

||||||

# Typical Decoding mass

|

# Typical Decoding mass

|

||||||

# See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

# See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

||||||

typical_p: Optional[float]

|

typical_p: Optional[float] = None

|

||||||

# Generate best_of sequences and return the one if the highest token logprobs

|

# Generate best_of sequences and return the one if the highest token logprobs

|

||||||

best_of: Optional[int]

|

best_of: Optional[int] = None

|

||||||

# Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

# Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||||

watermark: bool = False

|

watermark: bool = False

|

||||||

# Get generation details

|

# Get generation details

|

||||||

details: bool = False

|

details: bool = False

|

||||||

# Get decoder input token logprobs and ids

|

# Get decoder input token logprobs and ids

|

||||||

decoder_input_details: bool = False

|

decoder_input_details: bool = False

|

||||||

|

# Return the N most likely tokens at each step

|

||||||

|

top_n_tokens: Optional[int]

|

||||||

|

|

||||||

@validator("best_of")

|

@validator("best_of")

|

||||||

def valid_best_of(cls, field_value, values):

|

def valid_best_of(cls, field_value, values):

|

||||||

@ -101,12 +103,18 @@ class Parameters(BaseModel):

|

|||||||

raise ValidationError("`typical_p` must be > 0.0 and < 1.0")

|

raise ValidationError("`typical_p` must be > 0.0 and < 1.0")

|

||||||

return v

|

return v

|

||||||

|

|

||||||

|

@validator("top_n_tokens")

|

||||||

|

def valid_top_n_tokens(cls, v):

|

||||||

|

if v is not None and v <= 0:

|

||||||

|

raise ValidationError("`top_n_tokens` must be strictly positive")

|

||||||

|

return v

|

||||||

|

|

||||||

|

|

||||||

class Request(BaseModel):

|

class Request(BaseModel):

|

||||||

# Prompt

|

# Prompt

|

||||||

inputs: str

|

inputs: str

|

||||||

# Generation parameters

|

# Generation parameters

|

||||||

parameters: Optional[Parameters]

|

parameters: Optional[Parameters] = None

|

||||||

# Whether to stream output tokens

|

# Whether to stream output tokens

|

||||||

stream: bool = False

|

stream: bool = False

|

||||||

|

|

||||||

@ -125,9 +133,7 @@ class Request(BaseModel):

|

|||||||

and parameters.best_of > 1

|

and parameters.best_of > 1

|

||||||

and field_value

|

and field_value

|

||||||

):

|

):

|

||||||

raise ValidationError(

|

raise ValidationError("`best_of` != 1 is not supported when `stream` == True")

|

||||||

"`best_of` != 1 is not supported when `stream` == True"

|

|

||||||

)

|

|

||||||

return field_value

|

return field_value

|

||||||

|

|

||||||

|

|

||||||

@ -139,7 +145,7 @@ class InputToken(BaseModel):

|

|||||||

text: str

|

text: str

|

||||||

# Logprob

|

# Logprob

|

||||||

# Optional since the logprob of the first token cannot be computed

|

# Optional since the logprob of the first token cannot be computed

|

||||||

logprob: Optional[float]

|

logprob: Optional[float] = None

|

||||||

|

|

||||||

|

|

||||||

# Generated tokens

|

# Generated tokens

|

||||||

@ -174,11 +180,13 @@ class BestOfSequence(BaseModel):

|

|||||||

# Number of generated tokens

|

# Number of generated tokens

|

||||||

generated_tokens: int

|

generated_tokens: int

|

||||||

# Sampling seed if sampling was activated

|

# Sampling seed if sampling was activated

|

||||||

seed: Optional[int]

|

seed: Optional[int] = None

|

||||||

# Decoder input tokens, empty if decoder_input_details is False

|

# Decoder input tokens, empty if decoder_input_details is False

|

||||||

prefill: List[InputToken]

|

prefill: List[InputToken]

|

||||||

# Generated tokens

|

# Generated tokens

|

||||||

tokens: List[Token]

|

tokens: List[Token]

|

||||||

|

# Most likely tokens

|

||||||

|

top_tokens: Optional[List[List[Token]]]

|

||||||

|

|

||||||

|

|

||||||

# `generate` details

|

# `generate` details

|

||||||

@ -188,13 +196,15 @@ class Details(BaseModel):

|

|||||||

# Number of generated tokens

|

# Number of generated tokens

|

||||||

generated_tokens: int

|

generated_tokens: int

|

||||||

# Sampling seed if sampling was activated

|

# Sampling seed if sampling was activated

|

||||||

seed: Optional[int]

|

seed: Optional[int] = None

|

||||||

# Decoder input tokens, empty if decoder_input_details is False

|

# Decoder input tokens, empty if decoder_input_details is False

|

||||||

prefill: List[InputToken]

|

prefill: List[InputToken]

|

||||||

# Generated tokens

|

# Generated tokens

|

||||||

tokens: List[Token]

|

tokens: List[Token]

|

||||||

|

# Most likely tokens

|

||||||

|

top_tokens: Optional[List[List[Token]]]

|

||||||

# Additional sequences when using the `best_of` parameter

|

# Additional sequences when using the `best_of` parameter

|

||||||

best_of_sequences: Optional[List[BestOfSequence]]

|

best_of_sequences: Optional[List[BestOfSequence]] = None

|

||||||

|

|

||||||

|

|

||||||

# `generate` return value

|

# `generate` return value

|

||||||

@ -212,19 +222,21 @@ class StreamDetails(BaseModel):

|

|||||||

# Number of generated tokens

|

# Number of generated tokens

|

||||||

generated_tokens: int

|

generated_tokens: int

|

||||||

# Sampling seed if sampling was activated

|

# Sampling seed if sampling was activated

|

||||||

seed: Optional[int]

|

seed: Optional[int] = None

|

||||||

|

|

||||||

|

|

||||||

# `generate_stream` return value

|

# `generate_stream` return value

|

||||||

class StreamResponse(BaseModel):

|

class StreamResponse(BaseModel):

|

||||||

# Generated token

|

# Generated token

|

||||||

token: Token

|

token: Token

|

||||||

|

# Most likely tokens

|

||||||

|

top_tokens: Optional[List[Token]]

|

||||||

# Complete generated text

|

# Complete generated text

|

||||||

# Only available when the generation is finished

|

# Only available when the generation is finished

|

||||||

generated_text: Optional[str]

|

generated_text: Optional[str] = None

|

||||||

# Generation details

|

# Generation details

|

||||||

# Only available when the generation is finished

|

# Only available when the generation is finished

|

||||||

details: Optional[StreamDetails]

|

details: Optional[StreamDetails] = None

|

||||||

|

|

||||||

|

|

||||||

# Inference API currently deployed model

|

# Inference API currently deployed model

|

||||||

|

|||||||

@ -10,7 +10,7 @@

|

|||||||

"name": "Apache 2.0",

|

"name": "Apache 2.0",

|

||||||

"url": "https://www.apache.org/licenses/LICENSE-2.0"

|

"url": "https://www.apache.org/licenses/LICENSE-2.0"

|

||||||

},

|

},

|

||||||

"version": "1.0.1"

|

"version": "1.0.3"

|

||||||

},

|

},

|

||||||

"paths": {

|

"paths": {

|

||||||

"/": {

|

"/": {

|

||||||

|

|||||||

@ -23,4 +23,6 @@

|

|||||||

title: Streaming

|

title: Streaming

|

||||||

- local: conceptual/safetensors

|

- local: conceptual/safetensors

|

||||||

title: Safetensors

|

title: Safetensors

|

||||||

|

- local: conceptual/flash_attention

|

||||||

|

title: Flash Attention

|

||||||

title: Conceptual Guides

|

title: Conceptual Guides

|

||||||

|

|||||||

@ -75,6 +75,81 @@ To serve both ChatUI and TGI in same environment, simply add your own endpoints

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## Gradio

|

||||||

|

|

||||||

|

Gradio is a Python library that helps you build web applications for your machine learning models with a few lines of code. It has a `ChatInterface` wrapper that helps create neat UIs for chatbots. Let's take a look at how to create a chatbot with streaming mode using TGI and Gradio. Let's install Gradio and Hub Python library first.

|

||||||

|

|

||||||

|

```bash

|

||||||

|

pip install huggingface-hub gradio

|

||||||

|

```

|

||||||

|

|

||||||

|

Assume you are serving your model on port 8080, we will query through [InferenceClient](consuming_tgi#inference-client).

|

||||||

|

|

||||||

|

```python

|

||||||

|

import gradio as gr

|

||||||

|

from huggingface_hub import InferenceClient

|

||||||

|

|

||||||

|

client = InferenceClient(model="http://127.0.0.1:8080")

|

||||||

|

|

||||||

|

def inference(message, history):

|

||||||

|

partial_message = ""

|

||||||

|

for token in client.text_generation(message, max_new_tokens=20, stream=True):

|

||||||

|

partial_message += token

|

||||||

|

yield partial_message

|

||||||

|

|

||||||

|

gr.ChatInterface(

|

||||||

|

inference,

|

||||||

|

chatbot=gr.Chatbot(height=300),

|

||||||

|

textbox=gr.Textbox(placeholder="Chat with me!", container=False, scale=7),

|

||||||

|

description="This is the demo for Gradio UI consuming TGI endpoint with LLaMA 7B-Chat model.",

|

||||||

|

title="Gradio 🤝 TGI",

|

||||||

|

examples=["Are tomatoes vegetables?"],

|

||||||

|

retry_btn="Retry",

|

||||||

|

undo_btn="Undo",

|

||||||

|

clear_btn="Clear",

|

||||||

|

).queue().launch()

|

||||||

|

```

|

||||||

|

|

||||||

|

The UI looks like this 👇

|

||||||

|

|

||||||

|

<div class="flex justify-center">

|

||||||

|

<img

|

||||||

|

class="block dark:hidden"

|

||||||

|

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/tgi/gradio-tgi.png"

|

||||||

|

/>

|

||||||

|

<img

|

||||||

|

class="hidden dark:block"

|

||||||

|

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/tgi/gradio-tgi-dark.png"

|

||||||

|

/>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

You can try the demo directly here 👇

|

||||||

|

|

||||||

|

<div class="block dark:hidden">

|

||||||

|

<iframe

|

||||||

|

src="https://merve-gradio-tgi-2.hf.space?__theme=light"

|

||||||

|

width="850"

|

||||||

|

height="750"

|

||||||

|

></iframe>

|

||||||

|

</div>

|

||||||

|

<div class="hidden dark:block">

|

||||||

|

<iframe

|

||||||

|

src="https://merve-gradio-tgi-2.hf.space?__theme=dark"

|

||||||

|

width="850"

|

||||||

|

height="750"

|

||||||

|

></iframe>

|

||||||

|

</div>

|

||||||

|

|

||||||

|

|

||||||

|

You can disable streaming mode using `return` instead of `yield` in your inference function, like below.

|

||||||

|

|

||||||

|

```python

|

||||||

|

def inference(message, history):

|

||||||

|

return client.text_generation(message, max_new_tokens=20)

|

||||||

|

```

|

||||||

|

|

||||||

|

You can read more about how to customize a `ChatInterface` [here](https://www.gradio.app/guides/creating-a-chatbot-fast).

|

||||||

|

|

||||||

## API documentation

|

## API documentation

|

||||||

|

|

||||||

You can consult the OpenAPI documentation of the `text-generation-inference` REST API using the `/docs` route. The Swagger UI is also available [here](https://huggingface.github.io/text-generation-inference).

|

You can consult the OpenAPI documentation of the `text-generation-inference` REST API using the `/docs` route. The Swagger UI is also available [here](https://huggingface.github.io/text-generation-inference).

|

||||||

|

|||||||

12

docs/source/conceptual/flash_attention.md

Normal file

12

docs/source/conceptual/flash_attention.md

Normal file

@ -0,0 +1,12 @@

|

|||||||

|

# Flash Attention

|

||||||

|

|

||||||

|

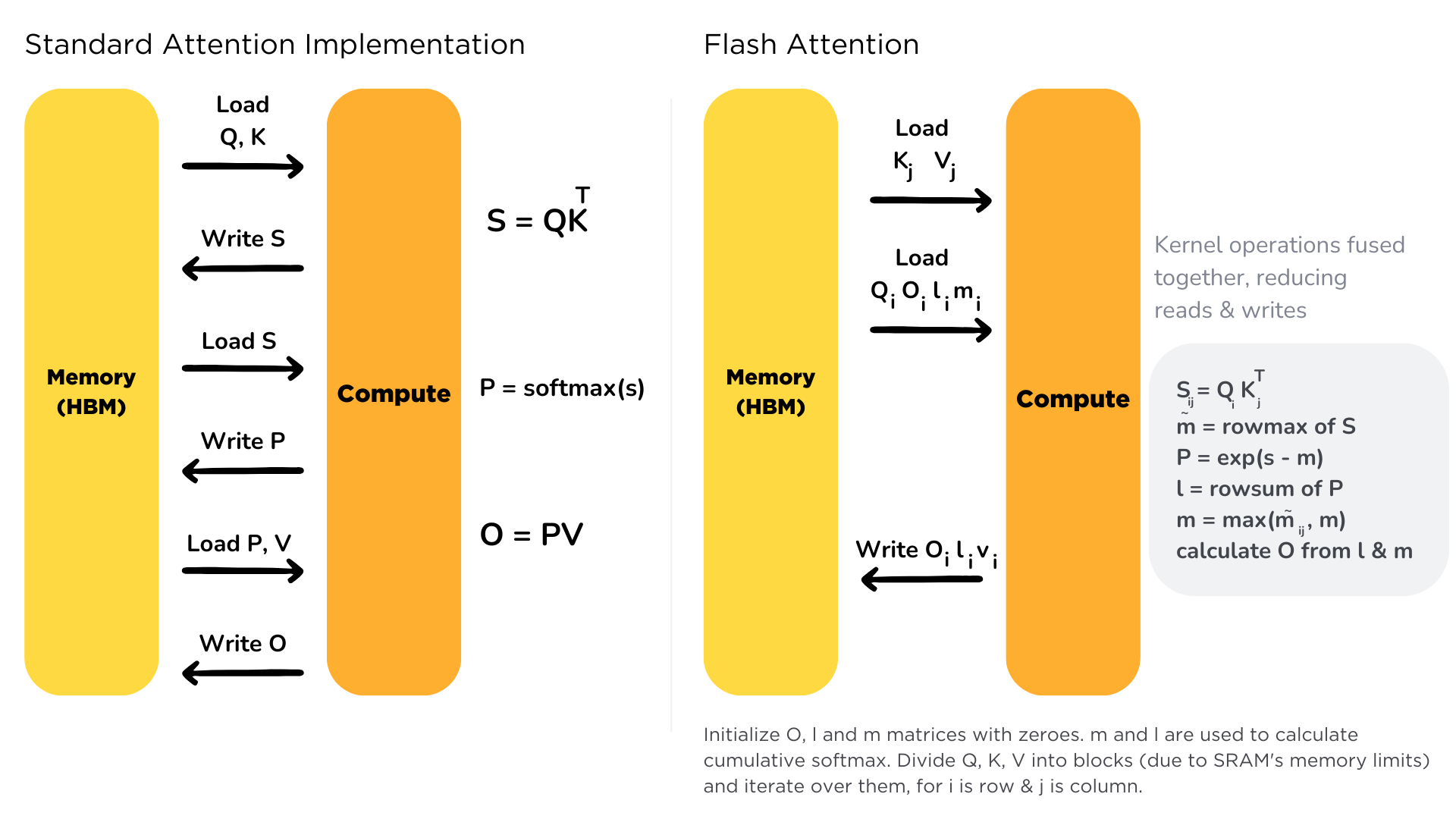

Scaling the transformer architecture is heavily bottlenecked by the self-attention mechanism, which has quadratic time and memory complexity. Recent developments in accelerator hardware mainly focus on enhancing compute capacities and not memory and transferring data between hardware. This results in attention operation having a memory bottleneck. **Flash Attention** is an attention algorithm used to reduce this problem and scale transformer-based models more efficiently, enabling faster training and inference.

|

||||||

|

|

||||||

|

Standard attention mechanism uses High Bandwidth Memory (HBM) to store, read and write keys, queries and values. HBM is large in memory, but slow in processing, meanwhile SRAM is smaller in memory, but faster in operations. In the standard attention implementation, the cost of loading and writing keys, queries, and values from HBM is high. It loads keys, queries, and values from HBM to GPU on-chip SRAM, performs a single step of the attention mechanism, writes it back to HBM, and repeats this for every single attention step. Instead, Flash Attention loads keys, queries, and values once, fuses the operations of the attention mechanism, and writes them back.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

It is implemented for supported models. You can check out the complete list of models that support Flash Attention [here](https://github.com/huggingface/text-generation-inference/tree/main/server/text_generation_server/models), for models with flash prefix.

|

||||||

|

|

||||||

|

You can learn more about Flash Attention by reading the paper in this [link](https://arxiv.org/abs/2205.14135).

|

||||||

|

|

||||||

@ -121,9 +121,9 @@ If you're using the free Inference API, you can use `HfInference`. If you're usi

|

|||||||

We can create a `HfInferenceEndpoint` providing our endpoint URL and credential.

|

We can create a `HfInferenceEndpoint` providing our endpoint URL and credential.

|

||||||

|

|

||||||

```js

|

```js

|

||||||

import { HfInference } from '@huggingface/inference'

|

import { HfInferenceEndpoint } from '@huggingface/inference'

|

||||||

|

|

||||||

const hf = new HfInference('https://YOUR_ENDPOINT.endpoints.huggingface.cloud', 'hf_YOUR_TOKEN')

|

const hf = new HfInferenceEndpoint('https://YOUR_ENDPOINT.endpoints.huggingface.cloud', 'hf_YOUR_TOKEN')

|

||||||

|

|

||||||

// prompt

|

// prompt

|

||||||

const prompt = 'What can you do in Nuremberg, Germany? Give me 3 Tips'

|

const prompt = 'What can you do in Nuremberg, Germany? Give me 3 Tips'

|

||||||

@ -143,6 +143,4 @@ SSEs are different than:

|

|||||||

* Polling: where the client keeps calling the server to get data. This means that the server might return empty responses and cause overhead.

|

* Polling: where the client keeps calling the server to get data. This means that the server might return empty responses and cause overhead.

|

||||||

* Webhooks: where there is a bi-directional connection. The server can send information to the client, but the client can also send data to the server after the first request. Webhooks are more complex to operate as they don’t only use HTTP.

|

* Webhooks: where there is a bi-directional connection. The server can send information to the client, but the client can also send data to the server after the first request. Webhooks are more complex to operate as they don’t only use HTTP.

|

||||||

|

|

||||||

One of the limitations of Server-Sent Events is that they limit how many concurrent requests can handle by the server. Instead of timing out when there are too many SSE connections, TGI returns a HTTP Error with an `overloaded` error type (`huggingface_hub` returns `OverloadedError`). This allows the client to manage the overloaded server (e.g. it could display a busy error to the user or it could retry with a new request). To configure the maximum number of concurrent requests, you can specify `--max_concurrent_requests`, allowing to handle backpressure.

|

If there are too many requests at the same time, TGI returns an HTTP Error with an `overloaded` error type (`huggingface_hub` returns `OverloadedError`). This allows the client to manage the overloaded server (e.g., it could display a busy error to the user or retry with a new request). To configure the maximum number of concurrent requests, you can specify `--max_concurrent_requests`, allowing clients to handle backpressure.

|

||||||

|

|

||||||

One of the limitations of Server-Sent Events is that they limit how many concurrent requests can handle by the server. Instead of timing out when there are too many SSE connections, TGI returns an HTTP Error with an `overloaded` error type (`huggingface_hub` returns `OverloadedError`). This allows the client to manage the overloaded server (e.g., it could display a busy error to the user or retry with a new request). To configure the maximum number of concurrent requests, you can specify `--max_concurrent_requests`, allowing clients to handle backpressure.

|

|

||||||

|

|||||||

@ -8,7 +8,7 @@ Let's say you want to deploy [Falcon-7B Instruct](https://huggingface.co/tiiuae/

|

|||||||

model=tiiuae/falcon-7b-instruct

|

model=tiiuae/falcon-7b-instruct

|

||||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||||

|

|

||||||

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.1 --model-id $model

|

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.3 --model-id $model

|

||||||

```

|

```

|

||||||

|

|

||||||

<Tip warning={true}>

|

<Tip warning={true}>

|

||||||

@ -85,7 +85,7 @@ curl 127.0.0.1:8080/generate \

|

|||||||

To see all possible deploy flags and options, you can use the `--help` flag. It's possible to configure the number of shards, quantization, generation parameters, and more.

|

To see all possible deploy flags and options, you can use the `--help` flag. It's possible to configure the number of shards, quantization, generation parameters, and more.

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

docker run ghcr.io/huggingface/text-generation-inference:1.0.1 --help

|

docker run ghcr.io/huggingface/text-generation-inference:1.0.3 --help

|

||||||

```

|

```

|

||||||

|

|

||||||

</Tip>

|

</Tip>

|

||||||

|

|||||||

@ -11,52 +11,52 @@

|

|||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 4911,

|

"id": 4911,

|

||||||

"logprob": -5.3632812,

|

"logprob": -5.7773438,

|

||||||

"text": "User"

|

"text": "User"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 29901,

|

"id": 29901,

|

||||||

"logprob": -0.00762558,

|

"logprob": -0.0069999695,

|

||||||

"text": ":"

|

"text": ":"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 32000,

|

"id": 32000,

|

||||||

"logprob": -0.7739258,

|

"logprob": -0.8125,

|

||||||

"text": "<fake_token_around_image>"

|

"text": "<fake_token_around_image>"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 32001,

|

"id": 32001,

|

||||||

"logprob": -9.775162e-05,

|

"logprob": -6.651878e-05,

|

||||||

"text": "<image>"

|

"text": "<image>"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 32000,

|

"id": 32000,

|

||||||

"logprob": -1.1920929e-07,

|

"logprob": -3.5762787e-07,

|

||||||

"text": "<fake_token_around_image>"

|

"text": "<fake_token_around_image>"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 1815,

|

"id": 1815,

|

||||||

"logprob": -4.4140625,

|

"logprob": -4.2265625,

|

||||||

"text": "Can"

|

"text": "Can"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 366,

|

"id": 366,

|

||||||

"logprob": -0.01436615,

|

"logprob": -0.013977051,

|

||||||

"text": "you"

|

"text": "you"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 2649,

|

"id": 2649,

|

||||||

"logprob": -4.9414062,

|

"logprob": -4.4375,

|

||||||

"text": "tell"

|

"text": "tell"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 592,

|

"id": 592,

|

||||||

"logprob": -0.3005371,

|

"logprob": -0.29077148,

|

||||||

"text": "me"

|

"text": "me"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 263,

|

"id": 263,

|

||||||

"logprob": -3.5703125,

|

"logprob": -4.2109375,

|

||||||

"text": "a"

|

"text": "a"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

@ -66,37 +66,37 @@

|

|||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 3273,

|

"id": 3273,

|

||||||

"logprob": -1.9111328,

|

"logprob": -1.8671875,

|

||||||

"text": "short"

|

"text": "short"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 5828,

|

"id": 5828,

|

||||||

"logprob": -0.28881836,

|

"logprob": -0.26586914,

|

||||||

"text": "story"

|

"text": "story"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 2729,

|

"id": 2729,

|

||||||

"logprob": -3.4179688,

|

"logprob": -3.7460938,

|

||||||

"text": "based"

|

"text": "based"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 373,

|

"id": 373,

|

||||||

"logprob": -0.00056886673,

|

"logprob": -0.0005350113,

|

||||||

"text": "on"

|

"text": "on"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 278,

|

"id": 278,

|

||||||

"logprob": -0.14123535,

|

"logprob": -0.13867188,

|

||||||

"text": "the"

|

"text": "the"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 1967,

|

"id": 1967,

|

||||||

"logprob": -0.053985596,

|

"logprob": -0.06842041,

|

||||||

"text": "image"

|

"text": "image"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 29973,

|

"id": 29973,

|

||||||

"logprob": -0.15771484,

|

"logprob": -0.15319824,

|

||||||

"text": "?"

|

"text": "?"

|

||||||

}

|

}

|

||||||

],

|

],

|

||||||

@ -104,25 +104,25 @@

|

|||||||

"tokens": [

|

"tokens": [

|

||||||

{

|

{

|

||||||

"id": 32002,

|

"id": 32002,

|

||||||

"logprob": -0.004295349,

|

"logprob": -0.0019445419,

|

||||||

"special": true,

|

"special": true,

|

||||||

"text": "<end_of_utterance>"

|

"text": "<end_of_utterance>"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 29871,

|

"id": 29871,

|

||||||

"logprob": -7.43866e-05,

|

"logprob": -8.404255e-05,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": " "

|

"text": " "

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 13,

|

"id": 13,

|

||||||

"logprob": -2.3126602e-05,

|

"logprob": -1.7881393e-05,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": "\n"

|

"text": "\n"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 7900,

|

"id": 7900,

|

||||||

"logprob": -3.9339066e-06,

|

"logprob": -3.0994415e-06,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": "Ass"

|

"text": "Ass"

|

||||||

},

|

},

|

||||||

@ -134,35 +134,36 @@

|

|||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 29901,

|

"id": 29901,

|

||||||

"logprob": -2.6226044e-06,

|

"logprob": -3.2186508e-06,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": ":"

|

"text": ":"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 319,

|

"id": 319,

|

||||||

"logprob": -0.87841797,

|

"logprob": -0.9057617,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": " A"

|

"text": " A"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 521,

|

"id": 696,

|

||||||

"logprob": -1.3837891,

|

"logprob": -1.2314453,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": " ch"

|

"text": " ro"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 21475,

|

"id": 15664,

|

||||||

"logprob": -0.00051641464,

|

"logprob": -0.00024914742,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": "icken"

|

"text": "oster"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"id": 338,

|

"id": 15028,

|

||||||

"logprob": -1.1435547,

|

"logprob": -1.1621094,

|

||||||

"special": false,

|

"special": false,

|

||||||

"text": " is"

|

"text": " stands"

|

||||||

}

|

}

|

||||||

]

|

],

|

||||||

|

"top_tokens": null

|

||||||

},

|

},

|

||||||

"generated_text": "\nAssistant: A chicken is"

|

"generated_text": "\nAssistant: A rooster stands"

|

||||||

}

|

}

|

||||||

|

|||||||

File diff suppressed because it is too large

Load Diff

982

integration-tests/poetry.lock

generated

Normal file

982

integration-tests/poetry.lock

generated

Normal file

@ -0,0 +1,982 @@

|

|||||||

|

# This file is automatically @generated by Poetry 1.5.1 and should not be changed by hand.

|

||||||

|

|

||||||

|

[[package]]

|

||||||

|

name = "aiohttp"

|

||||||

|

version = "3.8.5"

|

||||||

|

description = "Async http client/server framework (asyncio)"

|

||||||

|

optional = false

|

||||||

|

python-versions = ">=3.6"

|

||||||

|

files = [

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-macosx_10_9_universal2.whl", hash = "sha256:a94159871304770da4dd371f4291b20cac04e8c94f11bdea1c3478e557fbe0d8"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-macosx_10_9_x86_64.whl", hash = "sha256:13bf85afc99ce6f9ee3567b04501f18f9f8dbbb2ea11ed1a2e079670403a7c84"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-macosx_11_0_arm64.whl", hash = "sha256:2ce2ac5708501afc4847221a521f7e4b245abf5178cf5ddae9d5b3856ddb2f3a"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:96943e5dcc37a6529d18766597c491798b7eb7a61d48878611298afc1fca946c"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:2ad5c3c4590bb3cc28b4382f031f3783f25ec223557124c68754a2231d989e2b"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:0c413c633d0512df4dc7fd2373ec06cc6a815b7b6d6c2f208ada7e9e93a5061d"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:df72ac063b97837a80d80dec8d54c241af059cc9bb42c4de68bd5b61ceb37caa"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:c48c5c0271149cfe467c0ff8eb941279fd6e3f65c9a388c984e0e6cf57538e14"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_aarch64.whl", hash = "sha256:368a42363c4d70ab52c2c6420a57f190ed3dfaca6a1b19afda8165ee16416a82"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_i686.whl", hash = "sha256:7607ec3ce4993464368505888af5beb446845a014bc676d349efec0e05085905"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_ppc64le.whl", hash = "sha256:0d21c684808288a98914e5aaf2a7c6a3179d4df11d249799c32d1808e79503b5"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_s390x.whl", hash = "sha256:312fcfbacc7880a8da0ae8b6abc6cc7d752e9caa0051a53d217a650b25e9a691"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_x86_64.whl", hash = "sha256:ad093e823df03bb3fd37e7dec9d4670c34f9e24aeace76808fc20a507cace825"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-win32.whl", hash = "sha256:33279701c04351a2914e1100b62b2a7fdb9a25995c4a104259f9a5ead7ed4802"},

|

||||||

|

{file = "aiohttp-3.8.5-cp310-cp310-win_amd64.whl", hash = "sha256:6e4a280e4b975a2e7745573e3fc9c9ba0d1194a3738ce1cbaa80626cc9b4f4df"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-macosx_10_9_universal2.whl", hash = "sha256:ae871a964e1987a943d83d6709d20ec6103ca1eaf52f7e0d36ee1b5bebb8b9b9"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-macosx_10_9_x86_64.whl", hash = "sha256:461908b2578955045efde733719d62f2b649c404189a09a632d245b445c9c975"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-macosx_11_0_arm64.whl", hash = "sha256:72a860c215e26192379f57cae5ab12b168b75db8271f111019509a1196dfc780"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:cc14be025665dba6202b6a71cfcdb53210cc498e50068bc088076624471f8bb9"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:8af740fc2711ad85f1a5c034a435782fbd5b5f8314c9a3ef071424a8158d7f6b"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:841cd8233cbd2111a0ef0a522ce016357c5e3aff8a8ce92bcfa14cef890d698f"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:5ed1c46fb119f1b59304b5ec89f834f07124cd23ae5b74288e364477641060ff"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:84f8ae3e09a34f35c18fa57f015cc394bd1389bce02503fb30c394d04ee6b938"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_aarch64.whl", hash = "sha256:62360cb771707cb70a6fd114b9871d20d7dd2163a0feafe43fd115cfe4fe845e"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_i686.whl", hash = "sha256:23fb25a9f0a1ca1f24c0a371523546366bb642397c94ab45ad3aedf2941cec6a"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_ppc64le.whl", hash = "sha256:b0ba0d15164eae3d878260d4c4df859bbdc6466e9e6689c344a13334f988bb53"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_s390x.whl", hash = "sha256:5d20003b635fc6ae3f96d7260281dfaf1894fc3aa24d1888a9b2628e97c241e5"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_x86_64.whl", hash = "sha256:0175d745d9e85c40dcc51c8f88c74bfbaef9e7afeeeb9d03c37977270303064c"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-win32.whl", hash = "sha256:2e1b1e51b0774408f091d268648e3d57f7260c1682e7d3a63cb00d22d71bb945"},

|

||||||

|

{file = "aiohttp-3.8.5-cp311-cp311-win_amd64.whl", hash = "sha256:043d2299f6dfdc92f0ac5e995dfc56668e1587cea7f9aa9d8a78a1b6554e5755"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-macosx_10_9_x86_64.whl", hash = "sha256:cae533195e8122584ec87531d6df000ad07737eaa3c81209e85c928854d2195c"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:4f21e83f355643c345177a5d1d8079f9f28b5133bcd154193b799d380331d5d3"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:a7a75ef35f2df54ad55dbf4b73fe1da96f370e51b10c91f08b19603c64004acc"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:2e2e9839e14dd5308ee773c97115f1e0a1cb1d75cbeeee9f33824fa5144c7634"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:c44e65da1de4403d0576473e2344828ef9c4c6244d65cf4b75549bb46d40b8dd"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:78d847e4cde6ecc19125ccbc9bfac4a7ab37c234dd88fbb3c5c524e8e14da543"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_aarch64.whl", hash = "sha256:c7a815258e5895d8900aec4454f38dca9aed71085f227537208057853f9d13f2"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_i686.whl", hash = "sha256:8b929b9bd7cd7c3939f8bcfffa92fae7480bd1aa425279d51a89327d600c704d"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_ppc64le.whl", hash = "sha256:5db3a5b833764280ed7618393832e0853e40f3d3e9aa128ac0ba0f8278d08649"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_s390x.whl", hash = "sha256:a0215ce6041d501f3155dc219712bc41252d0ab76474615b9700d63d4d9292af"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_x86_64.whl", hash = "sha256:fd1ed388ea7fbed22c4968dd64bab0198de60750a25fe8c0c9d4bef5abe13824"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-win32.whl", hash = "sha256:6e6783bcc45f397fdebc118d772103d751b54cddf5b60fbcc958382d7dd64f3e"},

|

||||||

|

{file = "aiohttp-3.8.5-cp36-cp36m-win_amd64.whl", hash = "sha256:b5411d82cddd212644cf9360879eb5080f0d5f7d809d03262c50dad02f01421a"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-macosx_10_9_x86_64.whl", hash = "sha256:01d4c0c874aa4ddfb8098e85d10b5e875a70adc63db91f1ae65a4b04d3344cda"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:e5980a746d547a6ba173fd5ee85ce9077e72d118758db05d229044b469d9029a"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:2a482e6da906d5e6e653be079b29bc173a48e381600161c9932d89dfae5942ef"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:80bd372b8d0715c66c974cf57fe363621a02f359f1ec81cba97366948c7fc873"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:c1161b345c0a444ebcf46bf0a740ba5dcf50612fd3d0528883fdc0eff578006a"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:cd56db019015b6acfaaf92e1ac40eb8434847d9bf88b4be4efe5bfd260aee692"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_aarch64.whl", hash = "sha256:153c2549f6c004d2754cc60603d4668899c9895b8a89397444a9c4efa282aaf4"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_i686.whl", hash = "sha256:4a01951fabc4ce26ab791da5f3f24dca6d9a6f24121746eb19756416ff2d881b"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_ppc64le.whl", hash = "sha256:bfb9162dcf01f615462b995a516ba03e769de0789de1cadc0f916265c257e5d8"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_s390x.whl", hash = "sha256:7dde0009408969a43b04c16cbbe252c4f5ef4574ac226bc8815cd7342d2028b6"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_x86_64.whl", hash = "sha256:4149d34c32f9638f38f544b3977a4c24052042affa895352d3636fa8bffd030a"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-win32.whl", hash = "sha256:68c5a82c8779bdfc6367c967a4a1b2aa52cd3595388bf5961a62158ee8a59e22"},

|

||||||

|

{file = "aiohttp-3.8.5-cp37-cp37m-win_amd64.whl", hash = "sha256:2cf57fb50be5f52bda004b8893e63b48530ed9f0d6c96c84620dc92fe3cd9b9d"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-macosx_10_9_universal2.whl", hash = "sha256:eca4bf3734c541dc4f374ad6010a68ff6c6748f00451707f39857f429ca36ced"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-macosx_10_9_x86_64.whl", hash = "sha256:1274477e4c71ce8cfe6c1ec2f806d57c015ebf84d83373676036e256bc55d690"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-macosx_11_0_arm64.whl", hash = "sha256:28c543e54710d6158fc6f439296c7865b29e0b616629767e685a7185fab4a6b9"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:910bec0c49637d213f5d9877105d26e0c4a4de2f8b1b29405ff37e9fc0ad52b8"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:5443910d662db951b2e58eb70b0fbe6b6e2ae613477129a5805d0b66c54b6cb7"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:2e460be6978fc24e3df83193dc0cc4de46c9909ed92dd47d349a452ef49325b7"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:fb1558def481d84f03b45888473fc5a1f35747b5f334ef4e7a571bc0dfcb11f8"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:34dd0c107799dcbbf7d48b53be761a013c0adf5571bf50c4ecad5643fe9cfcd0"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_aarch64.whl", hash = "sha256:aa1990247f02a54185dc0dff92a6904521172a22664c863a03ff64c42f9b5410"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_i686.whl", hash = "sha256:0e584a10f204a617d71d359fe383406305a4b595b333721fa50b867b4a0a1548"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_ppc64le.whl", hash = "sha256:a3cf433f127efa43fee6b90ea4c6edf6c4a17109d1d037d1a52abec84d8f2e42"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_s390x.whl", hash = "sha256:c11f5b099adafb18e65c2c997d57108b5bbeaa9eeee64a84302c0978b1ec948b"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_x86_64.whl", hash = "sha256:84de26ddf621d7ac4c975dbea4c945860e08cccde492269db4e1538a6a6f3c35"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-win32.whl", hash = "sha256:ab88bafedc57dd0aab55fa728ea10c1911f7e4d8b43e1d838a1739f33712921c"},

|

||||||

|

{file = "aiohttp-3.8.5-cp38-cp38-win_amd64.whl", hash = "sha256:5798a9aad1879f626589f3df0f8b79b3608a92e9beab10e5fda02c8a2c60db2e"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-macosx_10_9_universal2.whl", hash = "sha256:a6ce61195c6a19c785df04e71a4537e29eaa2c50fe745b732aa937c0c77169f3"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-macosx_10_9_x86_64.whl", hash = "sha256:773dd01706d4db536335fcfae6ea2440a70ceb03dd3e7378f3e815b03c97ab51"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-macosx_11_0_arm64.whl", hash = "sha256:f83a552443a526ea38d064588613aca983d0ee0038801bc93c0c916428310c28"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:1f7372f7341fcc16f57b2caded43e81ddd18df53320b6f9f042acad41f8e049a"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:ea353162f249c8097ea63c2169dd1aa55de1e8fecbe63412a9bc50816e87b761"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:e5d47ae48db0b2dcf70bc8a3bc72b3de86e2a590fc299fdbbb15af320d2659de"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:d827176898a2b0b09694fbd1088c7a31836d1a505c243811c87ae53a3f6273c1"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:3562b06567c06439d8b447037bb655ef69786c590b1de86c7ab81efe1c9c15d8"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_aarch64.whl", hash = "sha256:4e874cbf8caf8959d2adf572a78bba17cb0e9d7e51bb83d86a3697b686a0ab4d"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_i686.whl", hash = "sha256:6809a00deaf3810e38c628e9a33271892f815b853605a936e2e9e5129762356c"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_ppc64le.whl", hash = "sha256:33776e945d89b29251b33a7e7d006ce86447b2cfd66db5e5ded4e5cd0340585c"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_s390x.whl", hash = "sha256:eaeed7abfb5d64c539e2db173f63631455f1196c37d9d8d873fc316470dfbacd"},

|

||||||

|

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_x86_64.whl", hash = "sha256:e91d635961bec2d8f19dfeb41a539eb94bd073f075ca6dae6c8dc0ee89ad6f91"},

|

||||||

|