mirror of

https://github.com/huggingface/text-generation-inference.git

synced 2025-09-10 20:04:52 +00:00

Merge branch 'main' into safetensors_docs

This commit is contained in:

commit

0ef535e77e

@ -8,7 +8,7 @@ members = [

|

||||

]

|

||||

|

||||

[workspace.package]

|

||||

version = "1.0.1"

|

||||

version = "1.0.3"

|

||||

edition = "2021"

|

||||

authors = ["Olivier Dehaene"]

|

||||

homepage = "https://github.com/huggingface/text-generation-inference"

|

||||

|

||||

@ -67,6 +67,7 @@ to power Hugging Chat, the Inference API and Inference Endpoint.

|

||||

- [Falcon 40B](https://huggingface.co/tiiuae/falcon-40b)

|

||||

- [MPT](https://huggingface.co/mosaicml/mpt-30b)

|

||||

- [Llama V2](https://huggingface.co/meta-llama)

|

||||

- [Code Llama](https://huggingface.co/codellama)

|

||||

|

||||

Other architectures are supported on a best effort basis using:

|

||||

|

||||

@ -86,7 +87,7 @@ The easiest way of getting started is using the official Docker container:

|

||||

model=tiiuae/falcon-7b-instruct

|

||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||

|

||||

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.1 --model-id $model

|

||||

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.3 --model-id $model

|

||||

```

|

||||

**Note:** To use GPUs, you need to install the [NVIDIA Container Toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html). We also recommend using NVIDIA drivers with CUDA version 11.8 or higher. For running the Docker container on a machine with no GPUs or CUDA support, it is enough to remove the `--gpus all` flag and add `--disable-custom-kernels`, please note CPU is not the intended platform for this project, so performance might be subpar.

|

||||

|

||||

@ -153,7 +154,7 @@ model=meta-llama/Llama-2-7b-chat-hf

|

||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||

token=<your cli READ token>

|

||||

|

||||

docker run --gpus all --shm-size 1g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.1 --model-id $model

|

||||

docker run --gpus all --shm-size 1g -e HUGGING_FACE_HUB_TOKEN=$token -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.3 --model-id $model

|

||||

```

|

||||

|

||||

### A note on Shared Memory (shm)

|

||||

|

||||

@ -37,6 +37,7 @@ pub(crate) async fn generation_task(

|

||||

batch_size: Vec<u32>,

|

||||

sequence_length: u32,

|

||||

decode_length: u32,

|

||||

top_n_tokens: Option<u32>,

|

||||

n_runs: usize,

|

||||

warmups: usize,

|

||||

parameters: NextTokenChooserParameters,

|

||||

@ -48,7 +49,7 @@ pub(crate) async fn generation_task(

|

||||

// End task if a message is received on shutdown_receiver

|

||||

// _shutdown_guard_sender will be dropped once the task is finished

|

||||

tokio::select! {

|

||||

res = generate_runs(tokenizer, batch_size, sequence_length, decode_length, n_runs, warmups, parameters, client, run_sender.clone()) => {

|

||||

res = generate_runs(tokenizer, batch_size, sequence_length, decode_length, top_n_tokens, n_runs, warmups, parameters, client, run_sender.clone()) => {

|

||||

if let Err(err) = res {

|

||||

run_sender.send(Err(err)).await.unwrap_or(());

|

||||

}

|

||||

@ -64,6 +65,7 @@ async fn generate_runs(

|

||||

batch_size: Vec<u32>,

|

||||

sequence_length: u32,

|

||||

decode_length: u32,

|

||||

top_n_tokens: Option<u32>,

|

||||

n_runs: usize,

|

||||

warmups: usize,

|

||||

parameters: NextTokenChooserParameters,

|

||||

@ -82,6 +84,7 @@ async fn generate_runs(

|

||||

b,

|

||||

decode_length,

|

||||

parameters.clone(),

|

||||

top_n_tokens,

|

||||

&mut client,

|

||||

)

|

||||

.await?;

|

||||

@ -97,6 +100,7 @@ async fn generate_runs(

|

||||

b,

|

||||

decode_length,

|

||||

parameters.clone(),

|

||||

top_n_tokens,

|

||||

&mut client,

|

||||

)

|

||||

.await?;

|

||||

@ -130,6 +134,7 @@ async fn prefill(

|

||||

batch_size: u32,

|

||||

decode_length: u32,

|

||||

parameters: NextTokenChooserParameters,

|

||||

top_n_tokens: Option<u32>,

|

||||

client: &mut ShardedClient,

|

||||

) -> Result<(Prefill, CachedBatch), ClientError> {

|

||||

// Create requests

|

||||

@ -145,6 +150,7 @@ async fn prefill(

|

||||

stop_sequences: vec![],

|

||||

ignore_eos_token: true, // Will not stop even if a eos token is generated

|

||||

}),

|

||||

top_n_tokens: top_n_tokens.unwrap_or(0),

|

||||

})

|

||||

.collect();

|

||||

|

||||

|

||||

@ -22,6 +22,7 @@ pub async fn run(

|

||||

batch_size: Vec<u32>,

|

||||

sequence_length: u32,

|

||||

decode_length: u32,

|

||||

top_n_tokens: Option<u32>,

|

||||

n_runs: usize,

|

||||

warmups: usize,

|

||||

temperature: Option<f32>,

|

||||

@ -70,6 +71,7 @@ pub async fn run(

|

||||

batch_size.clone(),

|

||||

sequence_length,

|

||||

decode_length,

|

||||

top_n_tokens,

|

||||

n_runs,

|

||||

warmups,

|

||||

parameters,

|

||||

@ -130,6 +132,7 @@ pub async fn run(

|

||||

tokenizer_name,

|

||||

sequence_length,

|

||||

decode_length,

|

||||

top_n_tokens,

|

||||

n_runs,

|

||||

warmups,

|

||||

temperature,

|

||||

|

||||

@ -93,6 +93,11 @@ struct Args {

|

||||

/// decoding strategies, for full doc refer to the `text-generation-server`

|

||||

#[clap(long, env)]

|

||||

do_sample: bool,

|

||||

|

||||

/// Generation parameter in case you want to specifically test/debug particular

|

||||

/// decoding strategies, for full doc refer to the `text-generation-server`

|

||||

#[clap(long, env)]

|

||||

top_n_tokens: Option<u32>,

|

||||

}

|

||||

|

||||

fn main() -> Result<(), Box<dyn std::error::Error>> {

|

||||

@ -117,6 +122,7 @@ fn main() -> Result<(), Box<dyn std::error::Error>> {

|

||||

watermark,

|

||||

do_sample,

|

||||

master_shard_uds_path,

|

||||

top_n_tokens,

|

||||

} = args;

|

||||

|

||||

let batch_size = batch_size.unwrap_or(vec![1, 2, 4, 8, 16, 32]);

|

||||

@ -173,6 +179,7 @@ fn main() -> Result<(), Box<dyn std::error::Error>> {

|

||||

batch_size,

|

||||

sequence_length,

|

||||

decode_length,

|

||||

top_n_tokens,

|

||||

runs,

|

||||

warmups,

|

||||

temperature,

|

||||

|

||||

@ -7,6 +7,7 @@ pub(crate) fn parameters_table(

|

||||

tokenizer_name: String,

|

||||

sequence_length: u32,

|

||||

decode_length: u32,

|

||||

top_n_tokens: Option<u32>,

|

||||

n_runs: usize,

|

||||

warmups: usize,

|

||||

temperature: Option<f32>,

|

||||

@ -24,6 +25,7 @@ pub(crate) fn parameters_table(

|

||||

builder.push_record(["Model", &tokenizer_name]);

|

||||

builder.push_record(["Sequence Length", &sequence_length.to_string()]);

|

||||

builder.push_record(["Decode Length", &decode_length.to_string()]);

|

||||

builder.push_record(["Top N Tokens", &format!("{top_n_tokens:?}")]);

|

||||

builder.push_record(["N Runs", &n_runs.to_string()]);

|

||||

builder.push_record(["Warmups", &warmups.to_string()]);

|

||||

builder.push_record(["Temperature", &format!("{temperature:?}")]);

|

||||

|

||||

@ -12,7 +12,7 @@ repository = "https://github.com/huggingface/text-generation-inference"

|

||||

|

||||

[tool.poetry.dependencies]

|

||||

python = "^3.7"

|

||||

pydantic = "^1.10"

|

||||

pydantic = "> 1.10, < 3"

|

||||

aiohttp = "^3.8"

|

||||

huggingface-hub = ">= 0.12, < 1.0"

|

||||

|

||||

|

||||

@ -12,7 +12,7 @@

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

__version__ = "0.3.0"

|

||||

__version__ = "0.6.0"

|

||||

|

||||

from text_generation.client import Client, AsyncClient

|

||||

from text_generation.inference_api import InferenceAPIClient, InferenceAPIAsyncClient

|

||||

|

||||

@ -75,6 +75,7 @@ class Client:

|

||||

typical_p: Optional[float] = None,

|

||||

watermark: bool = False,

|

||||

decoder_input_details: bool = False,

|

||||

top_n_tokens: Optional[int] = None,

|

||||

) -> Response:

|

||||

"""

|

||||

Given a prompt, generate the following text

|

||||

@ -113,6 +114,8 @@ class Client:

|

||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||

decoder_input_details (`bool`):

|

||||

Return the decoder input token logprobs and ids

|

||||

top_n_tokens (`int`):

|

||||

Return the `n` most likely tokens at each step

|

||||

|

||||

Returns:

|

||||

Response: generated response

|

||||

@ -134,6 +137,7 @@ class Client:

|

||||

typical_p=typical_p,

|

||||

watermark=watermark,

|

||||

decoder_input_details=decoder_input_details,

|

||||

top_n_tokens=top_n_tokens

|

||||

)

|

||||

request = Request(inputs=prompt, stream=False, parameters=parameters)

|

||||

|

||||

@ -164,6 +168,7 @@ class Client:

|

||||

truncate: Optional[int] = None,

|

||||

typical_p: Optional[float] = None,

|

||||

watermark: bool = False,

|

||||

top_n_tokens: Optional[int] = None,

|

||||

) -> Iterator[StreamResponse]:

|

||||

"""

|

||||

Given a prompt, generate the following stream of tokens

|

||||

@ -198,6 +203,8 @@ class Client:

|

||||

See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

||||

watermark (`bool`):

|

||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||

top_n_tokens (`int`):

|

||||

Return the `n` most likely tokens at each step

|

||||

|

||||

Returns:

|

||||

Iterator[StreamResponse]: stream of generated tokens

|

||||

@ -219,6 +226,7 @@ class Client:

|

||||

truncate=truncate,

|

||||

typical_p=typical_p,

|

||||

watermark=watermark,

|

||||

top_n_tokens=top_n_tokens,

|

||||

)

|

||||

request = Request(inputs=prompt, stream=True, parameters=parameters)

|

||||

|

||||

@ -317,6 +325,7 @@ class AsyncClient:

|

||||

typical_p: Optional[float] = None,

|

||||

watermark: bool = False,

|

||||

decoder_input_details: bool = False,

|

||||

top_n_tokens: Optional[int] = None,

|

||||

) -> Response:

|

||||

"""

|

||||

Given a prompt, generate the following text asynchronously

|

||||

@ -355,6 +364,8 @@ class AsyncClient:

|

||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||

decoder_input_details (`bool`):

|

||||

Return the decoder input token logprobs and ids

|

||||

top_n_tokens (`int`):

|

||||

Return the `n` most likely tokens at each step

|

||||

|

||||

Returns:

|

||||

Response: generated response

|

||||

@ -376,6 +387,7 @@ class AsyncClient:

|

||||

truncate=truncate,

|

||||

typical_p=typical_p,

|

||||

watermark=watermark,

|

||||

top_n_tokens=top_n_tokens,

|

||||

)

|

||||

request = Request(inputs=prompt, stream=False, parameters=parameters)

|

||||

|

||||

@ -404,6 +416,7 @@ class AsyncClient:

|

||||

truncate: Optional[int] = None,

|

||||

typical_p: Optional[float] = None,

|

||||

watermark: bool = False,

|

||||

top_n_tokens: Optional[int] = None,

|

||||

) -> AsyncIterator[StreamResponse]:

|

||||

"""

|

||||

Given a prompt, generate the following stream of tokens asynchronously

|

||||

@ -438,6 +451,8 @@ class AsyncClient:

|

||||

See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

||||

watermark (`bool`):

|

||||

Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||

top_n_tokens (`int`):

|

||||

Return the `n` most likely tokens at each step

|

||||

|

||||

Returns:

|

||||

AsyncIterator[StreamResponse]: stream of generated tokens

|

||||

@ -459,6 +474,7 @@ class AsyncClient:

|

||||

truncate=truncate,

|

||||

typical_p=typical_p,

|

||||

watermark=watermark,

|

||||

top_n_tokens=top_n_tokens,

|

||||

)

|

||||

request = Request(inputs=prompt, stream=True, parameters=parameters)

|

||||

|

||||

|

||||

@ -18,27 +18,29 @@ class Parameters(BaseModel):

|

||||

# Stop generating tokens if a member of `stop_sequences` is generated

|

||||

stop: List[str] = []

|

||||

# Random sampling seed

|

||||

seed: Optional[int]

|

||||

seed: Optional[int] = None

|

||||

# The value used to module the logits distribution.

|

||||

temperature: Optional[float]

|

||||

temperature: Optional[float] = None

|

||||

# The number of highest probability vocabulary tokens to keep for top-k-filtering.

|

||||

top_k: Optional[int]

|

||||

top_k: Optional[int] = None

|

||||

# If set to < 1, only the smallest set of most probable tokens with probabilities that add up to `top_p` or

|

||||

# higher are kept for generation.

|

||||

top_p: Optional[float]

|

||||

top_p: Optional[float] = None

|

||||

# truncate inputs tokens to the given size

|

||||

truncate: Optional[int]

|

||||

truncate: Optional[int] = None

|

||||

# Typical Decoding mass

|

||||

# See [Typical Decoding for Natural Language Generation](https://arxiv.org/abs/2202.00666) for more information

|

||||

typical_p: Optional[float]

|

||||

typical_p: Optional[float] = None

|

||||

# Generate best_of sequences and return the one if the highest token logprobs

|

||||

best_of: Optional[int]

|

||||

best_of: Optional[int] = None

|

||||

# Watermarking with [A Watermark for Large Language Models](https://arxiv.org/abs/2301.10226)

|

||||

watermark: bool = False

|

||||

# Get generation details

|

||||

details: bool = False

|

||||

# Get decoder input token logprobs and ids

|

||||

decoder_input_details: bool = False

|

||||

# Return the N most likely tokens at each step

|

||||

top_n_tokens: Optional[int]

|

||||

|

||||

@validator("best_of")

|

||||

def valid_best_of(cls, field_value, values):

|

||||

@ -101,12 +103,18 @@ class Parameters(BaseModel):

|

||||

raise ValidationError("`typical_p` must be > 0.0 and < 1.0")

|

||||

return v

|

||||

|

||||

@validator("top_n_tokens")

|

||||

def valid_top_n_tokens(cls, v):

|

||||

if v is not None and v <= 0:

|

||||

raise ValidationError("`top_n_tokens` must be strictly positive")

|

||||

return v

|

||||

|

||||

|

||||

class Request(BaseModel):

|

||||

# Prompt

|

||||

inputs: str

|

||||

# Generation parameters

|

||||

parameters: Optional[Parameters]

|

||||

parameters: Optional[Parameters] = None

|

||||

# Whether to stream output tokens

|

||||

stream: bool = False

|

||||

|

||||

@ -125,9 +133,7 @@ class Request(BaseModel):

|

||||

and parameters.best_of > 1

|

||||

and field_value

|

||||

):

|

||||

raise ValidationError(

|

||||

"`best_of` != 1 is not supported when `stream` == True"

|

||||

)

|

||||

raise ValidationError("`best_of` != 1 is not supported when `stream` == True")

|

||||

return field_value

|

||||

|

||||

|

||||

@ -139,7 +145,7 @@ class InputToken(BaseModel):

|

||||

text: str

|

||||

# Logprob

|

||||

# Optional since the logprob of the first token cannot be computed

|

||||

logprob: Optional[float]

|

||||

logprob: Optional[float] = None

|

||||

|

||||

|

||||

# Generated tokens

|

||||

@ -174,11 +180,13 @@ class BestOfSequence(BaseModel):

|

||||

# Number of generated tokens

|

||||

generated_tokens: int

|

||||

# Sampling seed if sampling was activated

|

||||

seed: Optional[int]

|

||||

seed: Optional[int] = None

|

||||

# Decoder input tokens, empty if decoder_input_details is False

|

||||

prefill: List[InputToken]

|

||||

# Generated tokens

|

||||

tokens: List[Token]

|

||||

# Most likely tokens

|

||||

top_tokens: Optional[List[List[Token]]]

|

||||

|

||||

|

||||

# `generate` details

|

||||

@ -188,13 +196,15 @@ class Details(BaseModel):

|

||||

# Number of generated tokens

|

||||

generated_tokens: int

|

||||

# Sampling seed if sampling was activated

|

||||

seed: Optional[int]

|

||||

seed: Optional[int] = None

|

||||

# Decoder input tokens, empty if decoder_input_details is False

|

||||

prefill: List[InputToken]

|

||||

# Generated tokens

|

||||

tokens: List[Token]

|

||||

# Most likely tokens

|

||||

top_tokens: Optional[List[List[Token]]]

|

||||

# Additional sequences when using the `best_of` parameter

|

||||

best_of_sequences: Optional[List[BestOfSequence]]

|

||||

best_of_sequences: Optional[List[BestOfSequence]] = None

|

||||

|

||||

|

||||

# `generate` return value

|

||||

@ -212,19 +222,21 @@ class StreamDetails(BaseModel):

|

||||

# Number of generated tokens

|

||||

generated_tokens: int

|

||||

# Sampling seed if sampling was activated

|

||||

seed: Optional[int]

|

||||

seed: Optional[int] = None

|

||||

|

||||

|

||||

# `generate_stream` return value

|

||||

class StreamResponse(BaseModel):

|

||||

# Generated token

|

||||

token: Token

|

||||

# Most likely tokens

|

||||

top_tokens: Optional[List[Token]]

|

||||

# Complete generated text

|

||||

# Only available when the generation is finished

|

||||

generated_text: Optional[str]

|

||||

generated_text: Optional[str] = None

|

||||

# Generation details

|

||||

# Only available when the generation is finished

|

||||

details: Optional[StreamDetails]

|

||||

details: Optional[StreamDetails] = None

|

||||

|

||||

|

||||

# Inference API currently deployed model

|

||||

|

||||

@ -10,7 +10,7 @@

|

||||

"name": "Apache 2.0",

|

||||

"url": "https://www.apache.org/licenses/LICENSE-2.0"

|

||||

},

|

||||

"version": "1.0.1"

|

||||

"version": "1.0.3"

|

||||

},

|

||||

"paths": {

|

||||

"/": {

|

||||

|

||||

@ -23,4 +23,6 @@

|

||||

title: Streaming

|

||||

- local: conceptual/safetensors

|

||||

title: Safetensors

|

||||

- local: conceptual/flash_attention

|

||||

title: Flash Attention

|

||||

title: Conceptual Guides

|

||||

|

||||

@ -75,6 +75,81 @@ To serve both ChatUI and TGI in same environment, simply add your own endpoints

|

||||

|

||||

|

||||

|

||||

## Gradio

|

||||

|

||||

Gradio is a Python library that helps you build web applications for your machine learning models with a few lines of code. It has a `ChatInterface` wrapper that helps create neat UIs for chatbots. Let's take a look at how to create a chatbot with streaming mode using TGI and Gradio. Let's install Gradio and Hub Python library first.

|

||||

|

||||

```bash

|

||||

pip install huggingface-hub gradio

|

||||

```

|

||||

|

||||

Assume you are serving your model on port 8080, we will query through [InferenceClient](consuming_tgi#inference-client).

|

||||

|

||||

```python

|

||||

import gradio as gr

|

||||

from huggingface_hub import InferenceClient

|

||||

|

||||

client = InferenceClient(model="http://127.0.0.1:8080")

|

||||

|

||||

def inference(message, history):

|

||||

partial_message = ""

|

||||

for token in client.text_generation(message, max_new_tokens=20, stream=True):

|

||||

partial_message += token

|

||||

yield partial_message

|

||||

|

||||

gr.ChatInterface(

|

||||

inference,

|

||||

chatbot=gr.Chatbot(height=300),

|

||||

textbox=gr.Textbox(placeholder="Chat with me!", container=False, scale=7),

|

||||

description="This is the demo for Gradio UI consuming TGI endpoint with LLaMA 7B-Chat model.",

|

||||

title="Gradio 🤝 TGI",

|

||||

examples=["Are tomatoes vegetables?"],

|

||||

retry_btn="Retry",

|

||||

undo_btn="Undo",

|

||||

clear_btn="Clear",

|

||||

).queue().launch()

|

||||

```

|

||||

|

||||

The UI looks like this 👇

|

||||

|

||||

<div class="flex justify-center">

|

||||

<img

|

||||

class="block dark:hidden"

|

||||

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/tgi/gradio-tgi.png"

|

||||

/>

|

||||

<img

|

||||

class="hidden dark:block"

|

||||

src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/tgi/gradio-tgi-dark.png"

|

||||

/>

|

||||

</div>

|

||||

|

||||

You can try the demo directly here 👇

|

||||

|

||||

<div class="block dark:hidden">

|

||||

<iframe

|

||||

src="https://merve-gradio-tgi-2.hf.space?__theme=light"

|

||||

width="850"

|

||||

height="750"

|

||||

></iframe>

|

||||

</div>

|

||||

<div class="hidden dark:block">

|

||||

<iframe

|

||||

src="https://merve-gradio-tgi-2.hf.space?__theme=dark"

|

||||

width="850"

|

||||

height="750"

|

||||

></iframe>

|

||||

</div>

|

||||

|

||||

|

||||

You can disable streaming mode using `return` instead of `yield` in your inference function, like below.

|

||||

|

||||

```python

|

||||

def inference(message, history):

|

||||

return client.text_generation(message, max_new_tokens=20)

|

||||

```

|

||||

|

||||

You can read more about how to customize a `ChatInterface` [here](https://www.gradio.app/guides/creating-a-chatbot-fast).

|

||||

|

||||

## API documentation

|

||||

|

||||

You can consult the OpenAPI documentation of the `text-generation-inference` REST API using the `/docs` route. The Swagger UI is also available [here](https://huggingface.github.io/text-generation-inference).

|

||||

|

||||

12

docs/source/conceptual/flash_attention.md

Normal file

12

docs/source/conceptual/flash_attention.md

Normal file

@ -0,0 +1,12 @@

|

||||

# Flash Attention

|

||||

|

||||

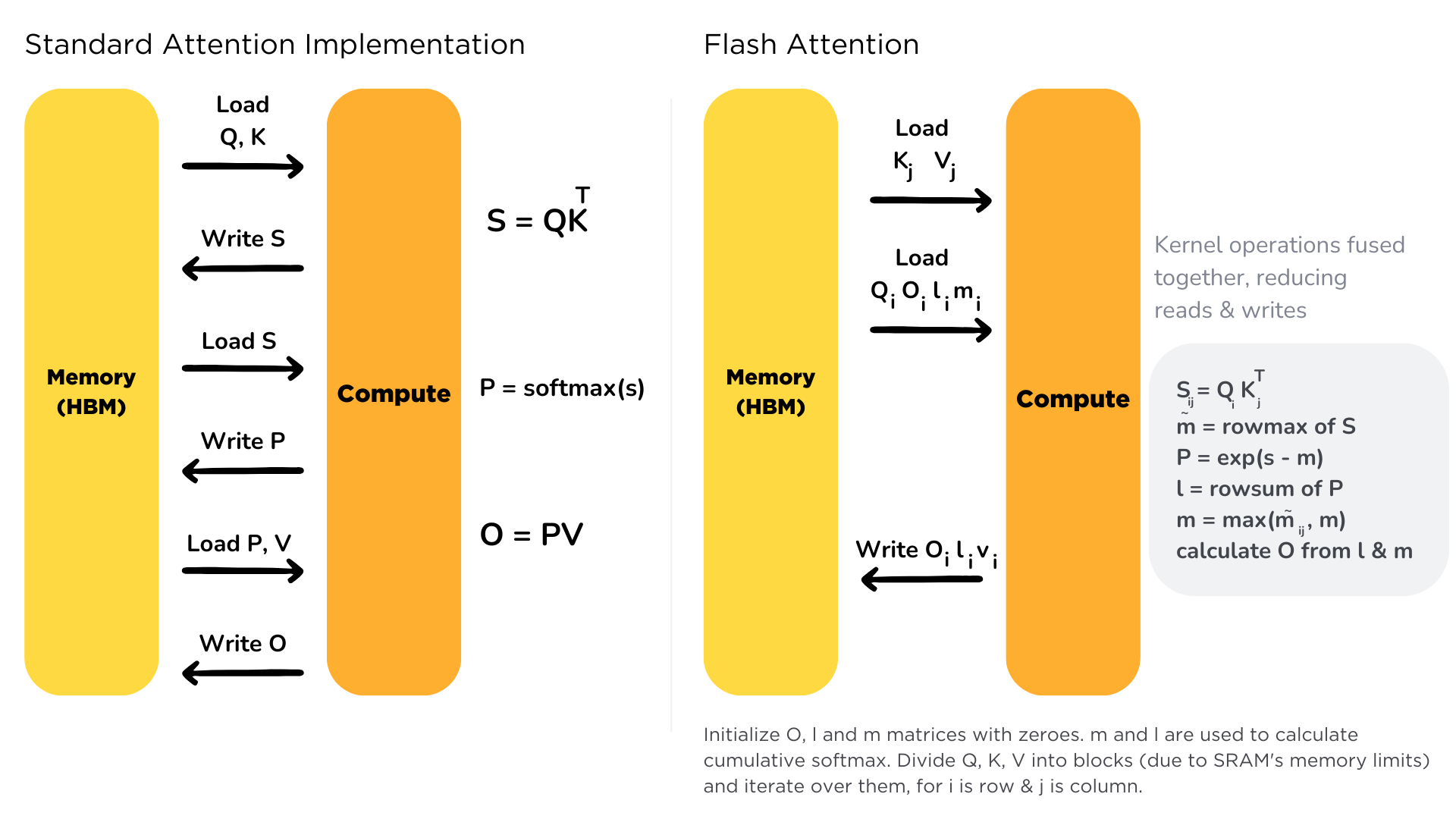

Scaling the transformer architecture is heavily bottlenecked by the self-attention mechanism, which has quadratic time and memory complexity. Recent developments in accelerator hardware mainly focus on enhancing compute capacities and not memory and transferring data between hardware. This results in attention operation having a memory bottleneck. **Flash Attention** is an attention algorithm used to reduce this problem and scale transformer-based models more efficiently, enabling faster training and inference.

|

||||

|

||||

Standard attention mechanism uses High Bandwidth Memory (HBM) to store, read and write keys, queries and values. HBM is large in memory, but slow in processing, meanwhile SRAM is smaller in memory, but faster in operations. In the standard attention implementation, the cost of loading and writing keys, queries, and values from HBM is high. It loads keys, queries, and values from HBM to GPU on-chip SRAM, performs a single step of the attention mechanism, writes it back to HBM, and repeats this for every single attention step. Instead, Flash Attention loads keys, queries, and values once, fuses the operations of the attention mechanism, and writes them back.

|

||||

|

||||

|

||||

|

||||

It is implemented for supported models. You can check out the complete list of models that support Flash Attention [here](https://github.com/huggingface/text-generation-inference/tree/main/server/text_generation_server/models), for models with flash prefix.

|

||||

|

||||

You can learn more about Flash Attention by reading the paper in this [link](https://arxiv.org/abs/2205.14135).

|

||||

|

||||

@ -121,9 +121,9 @@ If you're using the free Inference API, you can use `HfInference`. If you're usi

|

||||

We can create a `HfInferenceEndpoint` providing our endpoint URL and credential.

|

||||

|

||||

```js

|

||||

import { HfInference } from '@huggingface/inference'

|

||||

import { HfInferenceEndpoint } from '@huggingface/inference'

|

||||

|

||||

const hf = new HfInference('https://YOUR_ENDPOINT.endpoints.huggingface.cloud', 'hf_YOUR_TOKEN')

|

||||

const hf = new HfInferenceEndpoint('https://YOUR_ENDPOINT.endpoints.huggingface.cloud', 'hf_YOUR_TOKEN')

|

||||

|

||||

// prompt

|

||||

const prompt = 'What can you do in Nuremberg, Germany? Give me 3 Tips'

|

||||

@ -143,6 +143,4 @@ SSEs are different than:

|

||||

* Polling: where the client keeps calling the server to get data. This means that the server might return empty responses and cause overhead.

|

||||

* Webhooks: where there is a bi-directional connection. The server can send information to the client, but the client can also send data to the server after the first request. Webhooks are more complex to operate as they don’t only use HTTP.

|

||||

|

||||

One of the limitations of Server-Sent Events is that they limit how many concurrent requests can handle by the server. Instead of timing out when there are too many SSE connections, TGI returns a HTTP Error with an `overloaded` error type (`huggingface_hub` returns `OverloadedError`). This allows the client to manage the overloaded server (e.g. it could display a busy error to the user or it could retry with a new request). To configure the maximum number of concurrent requests, you can specify `--max_concurrent_requests`, allowing to handle backpressure.

|

||||

|

||||

One of the limitations of Server-Sent Events is that they limit how many concurrent requests can handle by the server. Instead of timing out when there are too many SSE connections, TGI returns an HTTP Error with an `overloaded` error type (`huggingface_hub` returns `OverloadedError`). This allows the client to manage the overloaded server (e.g., it could display a busy error to the user or retry with a new request). To configure the maximum number of concurrent requests, you can specify `--max_concurrent_requests`, allowing clients to handle backpressure.

|

||||

If there are too many requests at the same time, TGI returns an HTTP Error with an `overloaded` error type (`huggingface_hub` returns `OverloadedError`). This allows the client to manage the overloaded server (e.g., it could display a busy error to the user or retry with a new request). To configure the maximum number of concurrent requests, you can specify `--max_concurrent_requests`, allowing clients to handle backpressure.

|

||||

|

||||

@ -8,7 +8,7 @@ Let's say you want to deploy [Falcon-7B Instruct](https://huggingface.co/tiiuae/

|

||||

model=tiiuae/falcon-7b-instruct

|

||||

volume=$PWD/data # share a volume with the Docker container to avoid downloading weights every run

|

||||

|

||||

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.1 --model-id $model

|

||||

docker run --gpus all --shm-size 1g -p 8080:80 -v $volume:/data ghcr.io/huggingface/text-generation-inference:1.0.3 --model-id $model

|

||||

```

|

||||

|

||||

<Tip warning={true}>

|

||||

@ -85,7 +85,7 @@ curl 127.0.0.1:8080/generate \

|

||||

To see all possible deploy flags and options, you can use the `--help` flag. It's possible to configure the number of shards, quantization, generation parameters, and more.

|

||||

|

||||

```bash

|

||||

docker run ghcr.io/huggingface/text-generation-inference:1.0.1 --help

|

||||

docker run ghcr.io/huggingface/text-generation-inference:1.0.3 --help

|

||||

```

|

||||

|

||||

</Tip>

|

||||

|

||||

@ -11,52 +11,52 @@

|

||||

},

|

||||

{

|

||||

"id": 4911,

|

||||

"logprob": -5.3632812,

|

||||

"logprob": -5.7773438,

|

||||

"text": "User"

|

||||

},

|

||||

{

|

||||

"id": 29901,

|

||||

"logprob": -0.00762558,

|

||||

"logprob": -0.0069999695,

|

||||

"text": ":"

|

||||

},

|

||||

{

|

||||

"id": 32000,

|

||||

"logprob": -0.7739258,

|

||||

"logprob": -0.8125,

|

||||

"text": "<fake_token_around_image>"

|

||||

},

|

||||

{

|

||||

"id": 32001,

|

||||

"logprob": -9.775162e-05,

|

||||

"logprob": -6.651878e-05,

|

||||

"text": "<image>"

|

||||

},

|

||||

{

|

||||

"id": 32000,

|

||||

"logprob": -1.1920929e-07,

|

||||

"logprob": -3.5762787e-07,

|

||||

"text": "<fake_token_around_image>"

|

||||

},

|

||||

{

|

||||

"id": 1815,

|

||||

"logprob": -4.4140625,

|

||||

"logprob": -4.2265625,

|

||||

"text": "Can"

|

||||

},

|

||||

{

|

||||

"id": 366,

|

||||

"logprob": -0.01436615,

|

||||

"logprob": -0.013977051,

|

||||

"text": "you"

|

||||

},

|

||||

{

|

||||

"id": 2649,

|

||||

"logprob": -4.9414062,

|

||||

"logprob": -4.4375,

|

||||

"text": "tell"

|

||||

},

|

||||

{

|

||||

"id": 592,

|

||||

"logprob": -0.3005371,

|

||||

"logprob": -0.29077148,

|

||||

"text": "me"

|

||||

},

|

||||

{

|

||||

"id": 263,

|

||||

"logprob": -3.5703125,

|

||||

"logprob": -4.2109375,

|

||||

"text": "a"

|

||||

},

|

||||

{

|

||||

@ -66,37 +66,37 @@

|

||||

},

|

||||

{

|

||||

"id": 3273,

|

||||

"logprob": -1.9111328,

|

||||

"logprob": -1.8671875,

|

||||

"text": "short"

|

||||

},

|

||||

{

|

||||

"id": 5828,

|

||||

"logprob": -0.28881836,

|

||||

"logprob": -0.26586914,

|

||||

"text": "story"

|

||||

},

|

||||

{

|

||||

"id": 2729,

|

||||

"logprob": -3.4179688,

|

||||

"logprob": -3.7460938,

|

||||

"text": "based"

|

||||

},

|

||||

{

|

||||

"id": 373,

|

||||

"logprob": -0.00056886673,

|

||||

"logprob": -0.0005350113,

|

||||

"text": "on"

|

||||

},

|

||||

{

|

||||

"id": 278,

|

||||

"logprob": -0.14123535,

|

||||

"logprob": -0.13867188,

|

||||

"text": "the"

|

||||

},

|

||||

{

|

||||

"id": 1967,

|

||||

"logprob": -0.053985596,

|

||||

"logprob": -0.06842041,

|

||||

"text": "image"

|

||||

},

|

||||

{

|

||||

"id": 29973,

|

||||

"logprob": -0.15771484,

|

||||

"logprob": -0.15319824,

|

||||

"text": "?"

|

||||

}

|

||||

],

|

||||

@ -104,25 +104,25 @@

|

||||

"tokens": [

|

||||

{

|

||||

"id": 32002,

|

||||

"logprob": -0.004295349,

|

||||

"logprob": -0.0019445419,

|

||||

"special": true,

|

||||

"text": "<end_of_utterance>"

|

||||

},

|

||||

{

|

||||

"id": 29871,

|

||||

"logprob": -7.43866e-05,

|

||||

"logprob": -8.404255e-05,

|

||||

"special": false,

|

||||

"text": " "

|

||||

},

|

||||

{

|

||||

"id": 13,

|

||||

"logprob": -2.3126602e-05,

|

||||

"logprob": -1.7881393e-05,

|

||||

"special": false,

|

||||

"text": "\n"

|

||||

},

|

||||

{

|

||||

"id": 7900,

|

||||

"logprob": -3.9339066e-06,

|

||||

"logprob": -3.0994415e-06,

|

||||

"special": false,

|

||||

"text": "Ass"

|

||||

},

|

||||

@ -134,35 +134,36 @@

|

||||

},

|

||||

{

|

||||

"id": 29901,

|

||||

"logprob": -2.6226044e-06,

|

||||

"logprob": -3.2186508e-06,

|

||||

"special": false,

|

||||

"text": ":"

|

||||

},

|

||||

{

|

||||

"id": 319,

|

||||

"logprob": -0.87841797,

|

||||

"logprob": -0.9057617,

|

||||

"special": false,

|

||||

"text": " A"

|

||||

},

|

||||

{

|

||||

"id": 521,

|

||||

"logprob": -1.3837891,

|

||||

"id": 696,

|

||||

"logprob": -1.2314453,

|

||||

"special": false,

|

||||

"text": " ch"

|

||||

"text": " ro"

|

||||

},

|

||||

{

|

||||

"id": 21475,

|

||||

"logprob": -0.00051641464,

|

||||

"id": 15664,

|

||||

"logprob": -0.00024914742,

|

||||

"special": false,

|

||||

"text": "icken"

|

||||

"text": "oster"

|

||||

},

|

||||

{

|

||||

"id": 338,

|

||||

"logprob": -1.1435547,

|

||||

"id": 15028,

|

||||

"logprob": -1.1621094,

|

||||

"special": false,

|

||||

"text": " is"

|

||||

"text": " stands"

|

||||

}

|

||||

]

|

||||

],

|

||||

"top_tokens": null

|

||||

},

|

||||

"generated_text": "\nAssistant: A chicken is"

|

||||

"generated_text": "\nAssistant: A rooster stands"

|

||||

}

|

||||

|

||||

File diff suppressed because it is too large

Load Diff

982

integration-tests/poetry.lock

generated

Normal file

982

integration-tests/poetry.lock

generated

Normal file

@ -0,0 +1,982 @@

|

||||

# This file is automatically @generated by Poetry 1.5.1 and should not be changed by hand.

|

||||

|

||||

[[package]]

|

||||

name = "aiohttp"

|

||||

version = "3.8.5"

|

||||

description = "Async http client/server framework (asyncio)"

|

||||

optional = false

|

||||

python-versions = ">=3.6"

|

||||

files = [

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-macosx_10_9_universal2.whl", hash = "sha256:a94159871304770da4dd371f4291b20cac04e8c94f11bdea1c3478e557fbe0d8"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-macosx_10_9_x86_64.whl", hash = "sha256:13bf85afc99ce6f9ee3567b04501f18f9f8dbbb2ea11ed1a2e079670403a7c84"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-macosx_11_0_arm64.whl", hash = "sha256:2ce2ac5708501afc4847221a521f7e4b245abf5178cf5ddae9d5b3856ddb2f3a"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:96943e5dcc37a6529d18766597c491798b7eb7a61d48878611298afc1fca946c"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:2ad5c3c4590bb3cc28b4382f031f3783f25ec223557124c68754a2231d989e2b"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:0c413c633d0512df4dc7fd2373ec06cc6a815b7b6d6c2f208ada7e9e93a5061d"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:df72ac063b97837a80d80dec8d54c241af059cc9bb42c4de68bd5b61ceb37caa"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:c48c5c0271149cfe467c0ff8eb941279fd6e3f65c9a388c984e0e6cf57538e14"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_aarch64.whl", hash = "sha256:368a42363c4d70ab52c2c6420a57f190ed3dfaca6a1b19afda8165ee16416a82"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_i686.whl", hash = "sha256:7607ec3ce4993464368505888af5beb446845a014bc676d349efec0e05085905"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_ppc64le.whl", hash = "sha256:0d21c684808288a98914e5aaf2a7c6a3179d4df11d249799c32d1808e79503b5"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_s390x.whl", hash = "sha256:312fcfbacc7880a8da0ae8b6abc6cc7d752e9caa0051a53d217a650b25e9a691"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-musllinux_1_1_x86_64.whl", hash = "sha256:ad093e823df03bb3fd37e7dec9d4670c34f9e24aeace76808fc20a507cace825"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-win32.whl", hash = "sha256:33279701c04351a2914e1100b62b2a7fdb9a25995c4a104259f9a5ead7ed4802"},

|

||||

{file = "aiohttp-3.8.5-cp310-cp310-win_amd64.whl", hash = "sha256:6e4a280e4b975a2e7745573e3fc9c9ba0d1194a3738ce1cbaa80626cc9b4f4df"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-macosx_10_9_universal2.whl", hash = "sha256:ae871a964e1987a943d83d6709d20ec6103ca1eaf52f7e0d36ee1b5bebb8b9b9"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-macosx_10_9_x86_64.whl", hash = "sha256:461908b2578955045efde733719d62f2b649c404189a09a632d245b445c9c975"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-macosx_11_0_arm64.whl", hash = "sha256:72a860c215e26192379f57cae5ab12b168b75db8271f111019509a1196dfc780"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:cc14be025665dba6202b6a71cfcdb53210cc498e50068bc088076624471f8bb9"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:8af740fc2711ad85f1a5c034a435782fbd5b5f8314c9a3ef071424a8158d7f6b"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:841cd8233cbd2111a0ef0a522ce016357c5e3aff8a8ce92bcfa14cef890d698f"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:5ed1c46fb119f1b59304b5ec89f834f07124cd23ae5b74288e364477641060ff"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:84f8ae3e09a34f35c18fa57f015cc394bd1389bce02503fb30c394d04ee6b938"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_aarch64.whl", hash = "sha256:62360cb771707cb70a6fd114b9871d20d7dd2163a0feafe43fd115cfe4fe845e"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_i686.whl", hash = "sha256:23fb25a9f0a1ca1f24c0a371523546366bb642397c94ab45ad3aedf2941cec6a"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_ppc64le.whl", hash = "sha256:b0ba0d15164eae3d878260d4c4df859bbdc6466e9e6689c344a13334f988bb53"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_s390x.whl", hash = "sha256:5d20003b635fc6ae3f96d7260281dfaf1894fc3aa24d1888a9b2628e97c241e5"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-musllinux_1_1_x86_64.whl", hash = "sha256:0175d745d9e85c40dcc51c8f88c74bfbaef9e7afeeeb9d03c37977270303064c"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-win32.whl", hash = "sha256:2e1b1e51b0774408f091d268648e3d57f7260c1682e7d3a63cb00d22d71bb945"},

|

||||

{file = "aiohttp-3.8.5-cp311-cp311-win_amd64.whl", hash = "sha256:043d2299f6dfdc92f0ac5e995dfc56668e1587cea7f9aa9d8a78a1b6554e5755"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-macosx_10_9_x86_64.whl", hash = "sha256:cae533195e8122584ec87531d6df000ad07737eaa3c81209e85c928854d2195c"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:4f21e83f355643c345177a5d1d8079f9f28b5133bcd154193b799d380331d5d3"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:a7a75ef35f2df54ad55dbf4b73fe1da96f370e51b10c91f08b19603c64004acc"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:2e2e9839e14dd5308ee773c97115f1e0a1cb1d75cbeeee9f33824fa5144c7634"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:c44e65da1de4403d0576473e2344828ef9c4c6244d65cf4b75549bb46d40b8dd"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:78d847e4cde6ecc19125ccbc9bfac4a7ab37c234dd88fbb3c5c524e8e14da543"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_aarch64.whl", hash = "sha256:c7a815258e5895d8900aec4454f38dca9aed71085f227537208057853f9d13f2"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_i686.whl", hash = "sha256:8b929b9bd7cd7c3939f8bcfffa92fae7480bd1aa425279d51a89327d600c704d"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_ppc64le.whl", hash = "sha256:5db3a5b833764280ed7618393832e0853e40f3d3e9aa128ac0ba0f8278d08649"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_s390x.whl", hash = "sha256:a0215ce6041d501f3155dc219712bc41252d0ab76474615b9700d63d4d9292af"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-musllinux_1_1_x86_64.whl", hash = "sha256:fd1ed388ea7fbed22c4968dd64bab0198de60750a25fe8c0c9d4bef5abe13824"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-win32.whl", hash = "sha256:6e6783bcc45f397fdebc118d772103d751b54cddf5b60fbcc958382d7dd64f3e"},

|

||||

{file = "aiohttp-3.8.5-cp36-cp36m-win_amd64.whl", hash = "sha256:b5411d82cddd212644cf9360879eb5080f0d5f7d809d03262c50dad02f01421a"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-macosx_10_9_x86_64.whl", hash = "sha256:01d4c0c874aa4ddfb8098e85d10b5e875a70adc63db91f1ae65a4b04d3344cda"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:e5980a746d547a6ba173fd5ee85ce9077e72d118758db05d229044b469d9029a"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:2a482e6da906d5e6e653be079b29bc173a48e381600161c9932d89dfae5942ef"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:80bd372b8d0715c66c974cf57fe363621a02f359f1ec81cba97366948c7fc873"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:c1161b345c0a444ebcf46bf0a740ba5dcf50612fd3d0528883fdc0eff578006a"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:cd56db019015b6acfaaf92e1ac40eb8434847d9bf88b4be4efe5bfd260aee692"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_aarch64.whl", hash = "sha256:153c2549f6c004d2754cc60603d4668899c9895b8a89397444a9c4efa282aaf4"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_i686.whl", hash = "sha256:4a01951fabc4ce26ab791da5f3f24dca6d9a6f24121746eb19756416ff2d881b"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_ppc64le.whl", hash = "sha256:bfb9162dcf01f615462b995a516ba03e769de0789de1cadc0f916265c257e5d8"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_s390x.whl", hash = "sha256:7dde0009408969a43b04c16cbbe252c4f5ef4574ac226bc8815cd7342d2028b6"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-musllinux_1_1_x86_64.whl", hash = "sha256:4149d34c32f9638f38f544b3977a4c24052042affa895352d3636fa8bffd030a"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-win32.whl", hash = "sha256:68c5a82c8779bdfc6367c967a4a1b2aa52cd3595388bf5961a62158ee8a59e22"},

|

||||

{file = "aiohttp-3.8.5-cp37-cp37m-win_amd64.whl", hash = "sha256:2cf57fb50be5f52bda004b8893e63b48530ed9f0d6c96c84620dc92fe3cd9b9d"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-macosx_10_9_universal2.whl", hash = "sha256:eca4bf3734c541dc4f374ad6010a68ff6c6748f00451707f39857f429ca36ced"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-macosx_10_9_x86_64.whl", hash = "sha256:1274477e4c71ce8cfe6c1ec2f806d57c015ebf84d83373676036e256bc55d690"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-macosx_11_0_arm64.whl", hash = "sha256:28c543e54710d6158fc6f439296c7865b29e0b616629767e685a7185fab4a6b9"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:910bec0c49637d213f5d9877105d26e0c4a4de2f8b1b29405ff37e9fc0ad52b8"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:5443910d662db951b2e58eb70b0fbe6b6e2ae613477129a5805d0b66c54b6cb7"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:2e460be6978fc24e3df83193dc0cc4de46c9909ed92dd47d349a452ef49325b7"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:fb1558def481d84f03b45888473fc5a1f35747b5f334ef4e7a571bc0dfcb11f8"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:34dd0c107799dcbbf7d48b53be761a013c0adf5571bf50c4ecad5643fe9cfcd0"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_aarch64.whl", hash = "sha256:aa1990247f02a54185dc0dff92a6904521172a22664c863a03ff64c42f9b5410"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_i686.whl", hash = "sha256:0e584a10f204a617d71d359fe383406305a4b595b333721fa50b867b4a0a1548"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_ppc64le.whl", hash = "sha256:a3cf433f127efa43fee6b90ea4c6edf6c4a17109d1d037d1a52abec84d8f2e42"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_s390x.whl", hash = "sha256:c11f5b099adafb18e65c2c997d57108b5bbeaa9eeee64a84302c0978b1ec948b"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-musllinux_1_1_x86_64.whl", hash = "sha256:84de26ddf621d7ac4c975dbea4c945860e08cccde492269db4e1538a6a6f3c35"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-win32.whl", hash = "sha256:ab88bafedc57dd0aab55fa728ea10c1911f7e4d8b43e1d838a1739f33712921c"},

|

||||

{file = "aiohttp-3.8.5-cp38-cp38-win_amd64.whl", hash = "sha256:5798a9aad1879f626589f3df0f8b79b3608a92e9beab10e5fda02c8a2c60db2e"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-macosx_10_9_universal2.whl", hash = "sha256:a6ce61195c6a19c785df04e71a4537e29eaa2c50fe745b732aa937c0c77169f3"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-macosx_10_9_x86_64.whl", hash = "sha256:773dd01706d4db536335fcfae6ea2440a70ceb03dd3e7378f3e815b03c97ab51"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-macosx_11_0_arm64.whl", hash = "sha256:f83a552443a526ea38d064588613aca983d0ee0038801bc93c0c916428310c28"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:1f7372f7341fcc16f57b2caded43e81ddd18df53320b6f9f042acad41f8e049a"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:ea353162f249c8097ea63c2169dd1aa55de1e8fecbe63412a9bc50816e87b761"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:e5d47ae48db0b2dcf70bc8a3bc72b3de86e2a590fc299fdbbb15af320d2659de"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:d827176898a2b0b09694fbd1088c7a31836d1a505c243811c87ae53a3f6273c1"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:3562b06567c06439d8b447037bb655ef69786c590b1de86c7ab81efe1c9c15d8"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_aarch64.whl", hash = "sha256:4e874cbf8caf8959d2adf572a78bba17cb0e9d7e51bb83d86a3697b686a0ab4d"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_i686.whl", hash = "sha256:6809a00deaf3810e38c628e9a33271892f815b853605a936e2e9e5129762356c"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_ppc64le.whl", hash = "sha256:33776e945d89b29251b33a7e7d006ce86447b2cfd66db5e5ded4e5cd0340585c"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_s390x.whl", hash = "sha256:eaeed7abfb5d64c539e2db173f63631455f1196c37d9d8d873fc316470dfbacd"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-musllinux_1_1_x86_64.whl", hash = "sha256:e91d635961bec2d8f19dfeb41a539eb94bd073f075ca6dae6c8dc0ee89ad6f91"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-win32.whl", hash = "sha256:00ad4b6f185ec67f3e6562e8a1d2b69660be43070bd0ef6fcec5211154c7df67"},

|

||||

{file = "aiohttp-3.8.5-cp39-cp39-win_amd64.whl", hash = "sha256:c0a9034379a37ae42dea7ac1e048352d96286626251862e448933c0f59cbd79c"},

|

||||

{file = "aiohttp-3.8.5.tar.gz", hash = "sha256:b9552ec52cc147dbf1944ac7ac98af7602e51ea2dcd076ed194ca3c0d1c7d0bc"},

|

||||

]

|

||||

|

||||

[package.dependencies]

|

||||

aiosignal = ">=1.1.2"

|

||||

async-timeout = ">=4.0.0a3,<5.0"

|

||||

attrs = ">=17.3.0"

|

||||

charset-normalizer = ">=2.0,<4.0"

|

||||

frozenlist = ">=1.1.1"

|

||||

multidict = ">=4.5,<7.0"

|

||||

yarl = ">=1.0,<2.0"

|

||||

|

||||

[package.extras]

|

||||

speedups = ["Brotli", "aiodns", "cchardet"]

|

||||

|

||||

[[package]]

|

||||

name = "aiosignal"

|

||||

version = "1.3.1"

|

||||

description = "aiosignal: a list of registered asynchronous callbacks"

|

||||

optional = false

|

||||

python-versions = ">=3.7"

|

||||

files = [

|

||||

{file = "aiosignal-1.3.1-py3-none-any.whl", hash = "sha256:f8376fb07dd1e86a584e4fcdec80b36b7f81aac666ebc724e2c090300dd83b17"},

|

||||

{file = "aiosignal-1.3.1.tar.gz", hash = "sha256:54cd96e15e1649b75d6c87526a6ff0b6c1b0dd3459f43d9ca11d48c339b68cfc"},

|

||||

]

|

||||

|

||||

[package.dependencies]

|

||||

frozenlist = ">=1.1.0"

|

||||

|

||||

[[package]]

|

||||

name = "async-timeout"

|

||||

version = "4.0.3"

|

||||

description = "Timeout context manager for asyncio programs"

|

||||

optional = false

|

||||

python-versions = ">=3.7"

|

||||

files = [

|

||||

{file = "async-timeout-4.0.3.tar.gz", hash = "sha256:4640d96be84d82d02ed59ea2b7105a0f7b33abe8703703cd0ab0bf87c427522f"},

|

||||

{file = "async_timeout-4.0.3-py3-none-any.whl", hash = "sha256:7405140ff1230c310e51dc27b3145b9092d659ce68ff733fb0cefe3ee42be028"},

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "attrs"

|

||||

version = "23.1.0"

|

||||

description = "Classes Without Boilerplate"

|

||||

optional = false

|

||||

python-versions = ">=3.7"

|

||||

files = [

|

||||

{file = "attrs-23.1.0-py3-none-any.whl", hash = "sha256:1f28b4522cdc2fb4256ac1a020c78acf9cba2c6b461ccd2c126f3aa8e8335d04"},

|

||||

{file = "attrs-23.1.0.tar.gz", hash = "sha256:6279836d581513a26f1bf235f9acd333bc9115683f14f7e8fae46c98fc50e015"},

|

||||

]

|

||||

|

||||

[package.extras]

|

||||

cov = ["attrs[tests]", "coverage[toml] (>=5.3)"]

|

||||

dev = ["attrs[docs,tests]", "pre-commit"]

|

||||

docs = ["furo", "myst-parser", "sphinx", "sphinx-notfound-page", "sphinxcontrib-towncrier", "towncrier", "zope-interface"]

|

||||

tests = ["attrs[tests-no-zope]", "zope-interface"]

|

||||

tests-no-zope = ["cloudpickle", "hypothesis", "mypy (>=1.1.1)", "pympler", "pytest (>=4.3.0)", "pytest-mypy-plugins", "pytest-xdist[psutil]"]

|

||||

|

||||

[[package]]

|

||||

name = "certifi"

|

||||

version = "2023.7.22"

|

||||

description = "Python package for providing Mozilla's CA Bundle."

|

||||

optional = false

|

||||

python-versions = ">=3.6"

|

||||

files = [

|

||||

{file = "certifi-2023.7.22-py3-none-any.whl", hash = "sha256:92d6037539857d8206b8f6ae472e8b77db8058fec5937a1ef3f54304089edbb9"},

|

||||

{file = "certifi-2023.7.22.tar.gz", hash = "sha256:539cc1d13202e33ca466e88b2807e29f4c13049d6d87031a3c110744495cb082"},

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "charset-normalizer"

|

||||

version = "3.2.0"

|

||||

description = "The Real First Universal Charset Detector. Open, modern and actively maintained alternative to Chardet."

|

||||

optional = false

|

||||

python-versions = ">=3.7.0"

|

||||

files = [

|

||||

{file = "charset-normalizer-3.2.0.tar.gz", hash = "sha256:3bb3d25a8e6c0aedd251753a79ae98a093c7e7b471faa3aa9a93a81431987ace"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-macosx_10_9_universal2.whl", hash = "sha256:0b87549028f680ca955556e3bd57013ab47474c3124dc069faa0b6545b6c9710"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-macosx_10_9_x86_64.whl", hash = "sha256:7c70087bfee18a42b4040bb9ec1ca15a08242cf5867c58726530bdf3945672ed"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-macosx_11_0_arm64.whl", hash = "sha256:a103b3a7069b62f5d4890ae1b8f0597618f628b286b03d4bc9195230b154bfa9"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:94aea8eff76ee6d1cdacb07dd2123a68283cb5569e0250feab1240058f53b623"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:db901e2ac34c931d73054d9797383d0f8009991e723dab15109740a63e7f902a"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:b0dac0ff919ba34d4df1b6131f59ce95b08b9065233446be7e459f95554c0dc8"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:193cbc708ea3aca45e7221ae58f0fd63f933753a9bfb498a3b474878f12caaad"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:09393e1b2a9461950b1c9a45d5fd251dc7c6f228acab64da1c9c0165d9c7765c"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-musllinux_1_1_aarch64.whl", hash = "sha256:baacc6aee0b2ef6f3d308e197b5d7a81c0e70b06beae1f1fcacffdbd124fe0e3"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-musllinux_1_1_i686.whl", hash = "sha256:bf420121d4c8dce6b889f0e8e4ec0ca34b7f40186203f06a946fa0276ba54029"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-musllinux_1_1_ppc64le.whl", hash = "sha256:c04a46716adde8d927adb9457bbe39cf473e1e2c2f5d0a16ceb837e5d841ad4f"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-musllinux_1_1_s390x.whl", hash = "sha256:aaf63899c94de41fe3cf934601b0f7ccb6b428c6e4eeb80da72c58eab077b19a"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-musllinux_1_1_x86_64.whl", hash = "sha256:d62e51710986674142526ab9f78663ca2b0726066ae26b78b22e0f5e571238dd"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-win32.whl", hash = "sha256:04e57ab9fbf9607b77f7d057974694b4f6b142da9ed4a199859d9d4d5c63fe96"},

|

||||

{file = "charset_normalizer-3.2.0-cp310-cp310-win_amd64.whl", hash = "sha256:48021783bdf96e3d6de03a6e39a1171ed5bd7e8bb93fc84cc649d11490f87cea"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-macosx_10_9_universal2.whl", hash = "sha256:4957669ef390f0e6719db3613ab3a7631e68424604a7b448f079bee145da6e09"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-macosx_10_9_x86_64.whl", hash = "sha256:46fb8c61d794b78ec7134a715a3e564aafc8f6b5e338417cb19fe9f57a5a9bf2"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-macosx_11_0_arm64.whl", hash = "sha256:f779d3ad205f108d14e99bb3859aa7dd8e9c68874617c72354d7ecaec2a054ac"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:f25c229a6ba38a35ae6e25ca1264621cc25d4d38dca2942a7fce0b67a4efe918"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:2efb1bd13885392adfda4614c33d3b68dee4921fd0ac1d3988f8cbb7d589e72a"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:1f30b48dd7fa1474554b0b0f3fdfdd4c13b5c737a3c6284d3cdc424ec0ffff3a"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:246de67b99b6851627d945db38147d1b209a899311b1305dd84916f2b88526c6"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:9bd9b3b31adcb054116447ea22caa61a285d92e94d710aa5ec97992ff5eb7cf3"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-musllinux_1_1_aarch64.whl", hash = "sha256:8c2f5e83493748286002f9369f3e6607c565a6a90425a3a1fef5ae32a36d749d"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-musllinux_1_1_i686.whl", hash = "sha256:3170c9399da12c9dc66366e9d14da8bf7147e1e9d9ea566067bbce7bb74bd9c2"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-musllinux_1_1_ppc64le.whl", hash = "sha256:7a4826ad2bd6b07ca615c74ab91f32f6c96d08f6fcc3902ceeedaec8cdc3bcd6"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-musllinux_1_1_s390x.whl", hash = "sha256:3b1613dd5aee995ec6d4c69f00378bbd07614702a315a2cf6c1d21461fe17c23"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-musllinux_1_1_x86_64.whl", hash = "sha256:9e608aafdb55eb9f255034709e20d5a83b6d60c054df0802fa9c9883d0a937aa"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-win32.whl", hash = "sha256:f2a1d0fd4242bd8643ce6f98927cf9c04540af6efa92323e9d3124f57727bfc1"},

|

||||

{file = "charset_normalizer-3.2.0-cp311-cp311-win_amd64.whl", hash = "sha256:681eb3d7e02e3c3655d1b16059fbfb605ac464c834a0c629048a30fad2b27489"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-macosx_10_9_x86_64.whl", hash = "sha256:c57921cda3a80d0f2b8aec7e25c8aa14479ea92b5b51b6876d975d925a2ea346"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:41b25eaa7d15909cf3ac4c96088c1f266a9a93ec44f87f1d13d4a0e86c81b982"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:f058f6963fd82eb143c692cecdc89e075fa0828db2e5b291070485390b2f1c9c"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:a7647ebdfb9682b7bb97e2a5e7cb6ae735b1c25008a70b906aecca294ee96cf4"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:eef9df1eefada2c09a5e7a40991b9fc6ac6ef20b1372abd48d2794a316dc0449"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:e03b8895a6990c9ab2cdcd0f2fe44088ca1c65ae592b8f795c3294af00a461c3"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-musllinux_1_1_aarch64.whl", hash = "sha256:ee4006268ed33370957f55bf2e6f4d263eaf4dc3cfc473d1d90baff6ed36ce4a"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-musllinux_1_1_i686.whl", hash = "sha256:c4983bf937209c57240cff65906b18bb35e64ae872da6a0db937d7b4af845dd7"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-musllinux_1_1_ppc64le.whl", hash = "sha256:3bb7fda7260735efe66d5107fb7e6af6a7c04c7fce9b2514e04b7a74b06bf5dd"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-musllinux_1_1_s390x.whl", hash = "sha256:72814c01533f51d68702802d74f77ea026b5ec52793c791e2da806a3844a46c3"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-musllinux_1_1_x86_64.whl", hash = "sha256:70c610f6cbe4b9fce272c407dd9d07e33e6bf7b4aa1b7ffb6f6ded8e634e3592"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-win32.whl", hash = "sha256:a401b4598e5d3f4a9a811f3daf42ee2291790c7f9d74b18d75d6e21dda98a1a1"},

|

||||

{file = "charset_normalizer-3.2.0-cp37-cp37m-win_amd64.whl", hash = "sha256:c0b21078a4b56965e2b12f247467b234734491897e99c1d51cee628da9786959"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-macosx_10_9_universal2.whl", hash = "sha256:95eb302ff792e12aba9a8b8f8474ab229a83c103d74a750ec0bd1c1eea32e669"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-macosx_10_9_x86_64.whl", hash = "sha256:1a100c6d595a7f316f1b6f01d20815d916e75ff98c27a01ae817439ea7726329"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-macosx_11_0_arm64.whl", hash = "sha256:6339d047dab2780cc6220f46306628e04d9750f02f983ddb37439ca47ced7149"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:e4b749b9cc6ee664a3300bb3a273c1ca8068c46be705b6c31cf5d276f8628a94"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:a38856a971c602f98472050165cea2cdc97709240373041b69030be15047691f"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:f87f746ee241d30d6ed93969de31e5ffd09a2961a051e60ae6bddde9ec3583aa"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:89f1b185a01fe560bc8ae5f619e924407efca2191b56ce749ec84982fc59a32a"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:e1c8a2f4c69e08e89632defbfabec2feb8a8d99edc9f89ce33c4b9e36ab63037"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-musllinux_1_1_aarch64.whl", hash = "sha256:2f4ac36d8e2b4cc1aa71df3dd84ff8efbe3bfb97ac41242fbcfc053c67434f46"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-musllinux_1_1_i686.whl", hash = "sha256:a386ebe437176aab38c041de1260cd3ea459c6ce5263594399880bbc398225b2"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-musllinux_1_1_ppc64le.whl", hash = "sha256:ccd16eb18a849fd8dcb23e23380e2f0a354e8daa0c984b8a732d9cfaba3a776d"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-musllinux_1_1_s390x.whl", hash = "sha256:e6a5bf2cba5ae1bb80b154ed68a3cfa2fa00fde979a7f50d6598d3e17d9ac20c"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-musllinux_1_1_x86_64.whl", hash = "sha256:45de3f87179c1823e6d9e32156fb14c1927fcc9aba21433f088fdfb555b77c10"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-win32.whl", hash = "sha256:1000fba1057b92a65daec275aec30586c3de2401ccdcd41f8a5c1e2c87078706"},

|

||||

{file = "charset_normalizer-3.2.0-cp38-cp38-win_amd64.whl", hash = "sha256:8b2c760cfc7042b27ebdb4a43a4453bd829a5742503599144d54a032c5dc7e9e"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-macosx_10_9_universal2.whl", hash = "sha256:855eafa5d5a2034b4621c74925d89c5efef61418570e5ef9b37717d9c796419c"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-macosx_10_9_x86_64.whl", hash = "sha256:203f0c8871d5a7987be20c72442488a0b8cfd0f43b7973771640fc593f56321f"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-macosx_11_0_arm64.whl", hash = "sha256:e857a2232ba53ae940d3456f7533ce6ca98b81917d47adc3c7fd55dad8fab858"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl", hash = "sha256:5e86d77b090dbddbe78867a0275cb4df08ea195e660f1f7f13435a4649e954e5"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-manylinux_2_17_ppc64le.manylinux2014_ppc64le.whl", hash = "sha256:c4fb39a81950ec280984b3a44f5bd12819953dc5fa3a7e6fa7a80db5ee853952"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-manylinux_2_17_s390x.manylinux2014_s390x.whl", hash = "sha256:2dee8e57f052ef5353cf608e0b4c871aee320dd1b87d351c28764fc0ca55f9f4"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl", hash = "sha256:8700f06d0ce6f128de3ccdbc1acaea1ee264d2caa9ca05daaf492fde7c2a7200"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-manylinux_2_5_i686.manylinux1_i686.manylinux_2_17_i686.manylinux2014_i686.whl", hash = "sha256:1920d4ff15ce893210c1f0c0e9d19bfbecb7983c76b33f046c13a8ffbd570252"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-musllinux_1_1_aarch64.whl", hash = "sha256:c1c76a1743432b4b60ab3358c937a3fe1341c828ae6194108a94c69028247f22"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-musllinux_1_1_i686.whl", hash = "sha256:f7560358a6811e52e9c4d142d497f1a6e10103d3a6881f18d04dbce3729c0e2c"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-musllinux_1_1_ppc64le.whl", hash = "sha256:c8063cf17b19661471ecbdb3df1c84f24ad2e389e326ccaf89e3fb2484d8dd7e"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-musllinux_1_1_s390x.whl", hash = "sha256:cd6dbe0238f7743d0efe563ab46294f54f9bc8f4b9bcf57c3c666cc5bc9d1299"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-musllinux_1_1_x86_64.whl", hash = "sha256:1249cbbf3d3b04902ff081ffbb33ce3377fa6e4c7356f759f3cd076cc138d020"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-win32.whl", hash = "sha256:6c409c0deba34f147f77efaa67b8e4bb83d2f11c8806405f76397ae5b8c0d1c9"},

|

||||

{file = "charset_normalizer-3.2.0-cp39-cp39-win_amd64.whl", hash = "sha256:7095f6fbfaa55defb6b733cfeb14efaae7a29f0b59d8cf213be4e7ca0b857b80"},

|

||||

{file = "charset_normalizer-3.2.0-py3-none-any.whl", hash = "sha256:8e098148dd37b4ce3baca71fb394c81dc5d9c7728c95df695d2dca218edf40e6"},

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "colorama"

|

||||

version = "0.4.6"

|

||||

description = "Cross-platform colored terminal text."

|

||||

optional = false

|

||||

python-versions = "!=3.0.*,!=3.1.*,!=3.2.*,!=3.3.*,!=3.4.*,!=3.5.*,!=3.6.*,>=2.7"

|

||||

files = [

|

||||

{file = "colorama-0.4.6-py2.py3-none-any.whl", hash = "sha256:4f1d9991f5acc0ca119f9d443620b77f9d6b33703e51011c16baf57afb285fc6"},

|

||||