commit

dd8cc364fe

@ -1,10 +1,11 @@

|

|||||||

FROM node:16 as builder

|

FROM node:16 as builder

|

||||||

|

|

||||||

WORKDIR /build

|

WORKDIR /build

|

||||||

|

COPY web/package.json .

|

||||||

|

RUN npm install

|

||||||

COPY ./web .

|

COPY ./web .

|

||||||

COPY ./VERSION .

|

COPY ./VERSION .

|

||||||

RUN npm install

|

RUN DISABLE_ESLINT_PLUGIN='true' REACT_APP_VERSION=$(cat VERSION) npm run build

|

||||||

RUN REACT_APP_VERSION=$(cat VERSION) npm run build

|

|

||||||

|

|

||||||

FROM golang AS builder2

|

FROM golang AS builder2

|

||||||

|

|

||||||

@ -13,9 +14,10 @@ ENV GO111MODULE=on \

|

|||||||

GOOS=linux

|

GOOS=linux

|

||||||

|

|

||||||

WORKDIR /build

|

WORKDIR /build

|

||||||

|

ADD go.mod go.sum ./

|

||||||

|

RUN go mod download

|

||||||

COPY . .

|

COPY . .

|

||||||

COPY --from=builder /build/build ./web/build

|

COPY --from=builder /build/build ./web/build

|

||||||

RUN go mod download

|

|

||||||

RUN go build -ldflags "-s -w -X 'one-api/common.Version=$(cat VERSION)' -extldflags '-static'" -o one-api

|

RUN go build -ldflags "-s -w -X 'one-api/common.Version=$(cat VERSION)' -extldflags '-static'" -o one-api

|

||||||

|

|

||||||

FROM alpine

|

FROM alpine

|

||||||

|

|||||||

31

README.en.md

31

README.en.md

@ -1,5 +1,5 @@

|

|||||||

<p align="right">

|

<p align="right">

|

||||||

<a href="./README.md">中文</a> | <strong>English</strong>

|

<a href="./README.md">中文</a> | <strong>English</strong> | <a href="./README.ja.md">日本語</a>

|

||||||

</p>

|

</p>

|

||||||

|

|

||||||

<p align="center">

|

<p align="center">

|

||||||

@ -57,15 +57,13 @@ _✨ Access all LLM through the standard OpenAI API format, easy to deploy & use

|

|||||||

> **Note**: The latest image pulled from Docker may be an `alpha` release. Specify the version manually if you require stability.

|

> **Note**: The latest image pulled from Docker may be an `alpha` release. Specify the version manually if you require stability.

|

||||||

|

|

||||||

## Features

|

## Features

|

||||||

1. Supports multiple API access channels:

|

1. Support for multiple large models:

|

||||||

+ [x] Official OpenAI channel (support proxy configuration)

|

+ [x] [OpenAI ChatGPT Series Models](https://platform.openai.com/docs/guides/gpt/chat-completions-api) (Supports [Azure OpenAI API](https://learn.microsoft.com/en-us/azure/ai-services/openai/reference))

|

||||||

+ [x] **Azure OpenAI API**

|

+ [x] [Anthropic Claude Series Models](https://anthropic.com)

|

||||||

+ [x] [API Distribute](https://api.gptjk.top/register?aff=QGxj)

|

+ [x] [Google PaLM2 Series Models](https://developers.generativeai.google)

|

||||||

+ [x] [OpenAI-SB](https://openai-sb.com)

|

+ [x] [Baidu Wenxin Yiyuan Series Models](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||||

+ [x] [API2D](https://api2d.com/r/197971)

|

+ [x] [Alibaba Tongyi Qianwen Series Models](https://help.aliyun.com/document_detail/2400395.html)

|

||||||

+ [x] [OhMyGPT](https://aigptx.top?aff=uFpUl2Kf)

|

+ [x] [Zhipu ChatGLM Series Models](https://bigmodel.cn)

|

||||||

+ [x] [AI Proxy](https://aiproxy.io/?i=OneAPI) (invitation code: `OneAPI`)

|

|

||||||

+ [x] Custom channel: Various third-party proxy services not included in the list

|

|

||||||

2. Supports access to multiple channels through **load balancing**.

|

2. Supports access to multiple channels through **load balancing**.

|

||||||

3. Supports **stream mode** that enables typewriter-like effect through stream transmission.

|

3. Supports **stream mode** that enables typewriter-like effect through stream transmission.

|

||||||

4. Supports **multi-machine deployment**. [See here](#multi-machine-deployment) for more details.

|

4. Supports **multi-machine deployment**. [See here](#multi-machine-deployment) for more details.

|

||||||

@ -139,7 +137,7 @@ The initial account username is `root` and password is `123456`.

|

|||||||

cd one-api/web

|

cd one-api/web

|

||||||

npm install

|

npm install

|

||||||

npm run build

|

npm run build

|

||||||

|

|

||||||

# Build the backend

|

# Build the backend

|

||||||

cd ..

|

cd ..

|

||||||

go mod download

|

go mod download

|

||||||

@ -175,7 +173,12 @@ If you encounter a blank page after deployment, refer to [#97](https://github.co

|

|||||||

<summary><strong>Deploy on Sealos</strong></summary>

|

<summary><strong>Deploy on Sealos</strong></summary>

|

||||||

<div>

|

<div>

|

||||||

|

|

||||||

Please refer to [this tutorial](https://github.com/c121914yu/FastGPT/blob/main/docs/deploy/one-api/sealos.md).

|

> Sealos supports high concurrency, dynamic scaling, and stable operations for millions of users.

|

||||||

|

|

||||||

|

> Click the button below to deploy with one click.👇

|

||||||

|

|

||||||

|

[](https://cloud.sealos.io/?openapp=system-fastdeploy?templateName=one-api)

|

||||||

|

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

</details>

|

</details>

|

||||||

@ -187,7 +190,7 @@ Please refer to [this tutorial](https://github.com/c121914yu/FastGPT/blob/main/d

|

|||||||

> Zeabur's servers are located overseas, automatically solving network issues, and the free quota is sufficient for personal usage.

|

> Zeabur's servers are located overseas, automatically solving network issues, and the free quota is sufficient for personal usage.

|

||||||

|

|

||||||

1. First, fork the code.

|

1. First, fork the code.

|

||||||

2. Go to [Zeabur](https://zeabur.com/), log in, and enter the console.

|

2. Go to [Zeabur](https://zeabur.com?referralCode=songquanpeng), log in, and enter the console.

|

||||||

3. Create a new project. In Service -> Add Service, select Marketplace, and choose MySQL. Note down the connection parameters (username, password, address, and port).

|

3. Create a new project. In Service -> Add Service, select Marketplace, and choose MySQL. Note down the connection parameters (username, password, address, and port).

|

||||||

4. Copy the connection parameters and run ```create database `one-api` ``` to create the database.

|

4. Copy the connection parameters and run ```create database `one-api` ``` to create the database.

|

||||||

5. Then, in Service -> Add Service, select Git (authorization is required for the first use) and choose your forked repository.

|

5. Then, in Service -> Add Service, select Git (authorization is required for the first use) and choose your forked repository.

|

||||||

@ -280,7 +283,7 @@ If the channel ID is not provided, load balancing will be used to distribute the

|

|||||||

+ Double-check that your interface address and API Key are correct.

|

+ Double-check that your interface address and API Key are correct.

|

||||||

|

|

||||||

## Related Projects

|

## Related Projects

|

||||||

[FastGPT](https://github.com/c121914yu/FastGPT): Build an AI knowledge base in three minutes

|

[FastGPT](https://github.com/labring/FastGPT): Knowledge question answering system based on the LLM

|

||||||

|

|

||||||

## Note

|

## Note

|

||||||

This project is an open-source project. Please use it in compliance with OpenAI's [Terms of Use](https://openai.com/policies/terms-of-use) and **applicable laws and regulations**. It must not be used for illegal purposes.

|

This project is an open-source project. Please use it in compliance with OpenAI's [Terms of Use](https://openai.com/policies/terms-of-use) and **applicable laws and regulations**. It must not be used for illegal purposes.

|

||||||

|

|||||||

298

README.ja.md

Normal file

298

README.ja.md

Normal file

@ -0,0 +1,298 @@

|

|||||||

|

<p align="right">

|

||||||

|

<a href="./README.md">中文</a> | <a href="./README.en.md">English</a> | <strong>日本語</strong>

|

||||||

|

</p>

|

||||||

|

|

||||||

|

<p align="center">

|

||||||

|

<a href="https://github.com/songquanpeng/one-api"><img src="https://raw.githubusercontent.com/songquanpeng/one-api/main/web/public/logo.png" width="150" height="150" alt="one-api logo"></a>

|

||||||

|

</p>

|

||||||

|

|

||||||

|

<div align="center">

|

||||||

|

|

||||||

|

# One API

|

||||||

|

|

||||||

|

_✨ 標準的な OpenAI API フォーマットを通じてすべての LLM にアクセスでき、導入と利用が容易です ✨_

|

||||||

|

|

||||||

|

</div>

|

||||||

|

|

||||||

|

<p align="center">

|

||||||

|

<a href="https://raw.githubusercontent.com/songquanpeng/one-api/main/LICENSE">

|

||||||

|

<img src="https://img.shields.io/github/license/songquanpeng/one-api?color=brightgreen" alt="license">

|

||||||

|

</a>

|

||||||

|

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||||

|

<img src="https://img.shields.io/github/v/release/songquanpeng/one-api?color=brightgreen&include_prereleases" alt="release">

|

||||||

|

</a>

|

||||||

|

<a href="https://hub.docker.com/repository/docker/justsong/one-api">

|

||||||

|

<img src="https://img.shields.io/docker/pulls/justsong/one-api?color=brightgreen" alt="docker pull">

|

||||||

|

</a>

|

||||||

|

<a href="https://github.com/songquanpeng/one-api/releases/latest">

|

||||||

|

<img src="https://img.shields.io/github/downloads/songquanpeng/one-api/total?color=brightgreen&include_prereleases" alt="release">

|

||||||

|

</a>

|

||||||

|

<a href="https://goreportcard.com/report/github.com/songquanpeng/one-api">

|

||||||

|

<img src="https://goreportcard.com/badge/github.com/songquanpeng/one-api" alt="GoReportCard">

|

||||||

|

</a>

|

||||||

|

</p>

|

||||||

|

|

||||||

|

<p align="center">

|

||||||

|

<a href="#deployment">デプロイチュートリアル</a>

|

||||||

|

·

|

||||||

|

<a href="#usage">使用方法</a>

|

||||||

|

·

|

||||||

|

<a href="https://github.com/songquanpeng/one-api/issues">フィードバック</a>

|

||||||

|

·

|

||||||

|

<a href="#screenshots">スクリーンショット</a>

|

||||||

|

·

|

||||||

|

<a href="https://openai.justsong.cn/">ライブデモ</a>

|

||||||

|

·

|

||||||

|

<a href="#faq">FAQ</a>

|

||||||

|

·

|

||||||

|

<a href="#related-projects">関連プロジェクト</a>

|

||||||

|

·

|

||||||

|

<a href="https://iamazing.cn/page/reward">寄付</a>

|

||||||

|

</p>

|

||||||

|

|

||||||

|

> **警告**: この README は ChatGPT によって翻訳されています。翻訳ミスを発見した場合は遠慮なく PR を投稿してください。

|

||||||

|

|

||||||

|

> **警告**: 英語版の Docker イメージは `justsong/one-api-en` です。

|

||||||

|

|

||||||

|

> **注**: Docker からプルされた最新のイメージは、`alpha` リリースかもしれません。安定性が必要な場合は、手動でバージョンを指定してください。

|

||||||

|

|

||||||

|

## 特徴

|

||||||

|

1. 複数の大型モデルをサポート:

|

||||||

|

+ [x] [OpenAI ChatGPT シリーズモデル](https://platform.openai.com/docs/guides/gpt/chat-completions-api) ([Azure OpenAI API](https://learn.microsoft.com/en-us/azure/ai-services/openai/reference) をサポート)

|

||||||

|

+ [x] [Anthropic Claude シリーズモデル](https://anthropic.com)

|

||||||

|

+ [x] [Google PaLM2 シリーズモデル](https://developers.generativeai.google)

|

||||||

|

+ [x] [Baidu Wenxin Yiyuan シリーズモデル](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||||

|

+ [x] [Alibaba Tongyi Qianwen シリーズモデル](https://help.aliyun.com/document_detail/2400395.html)

|

||||||

|

+ [x] [Zhipu ChatGLM シリーズモデル](https://bigmodel.cn)

|

||||||

|

2. **ロードバランシング**による複数チャンネルへのアクセスをサポート。

|

||||||

|

3. ストリーム伝送によるタイプライター的効果を可能にする**ストリームモード**に対応。

|

||||||

|

4. **マルチマシンデプロイ**に対応。[詳細はこちら](#multi-machine-deployment)を参照。

|

||||||

|

5. トークンの有効期限や使用回数を設定できる**トークン管理**に対応しています。

|

||||||

|

6. **バウチャー管理**に対応しており、バウチャーの一括生成やエクスポートが可能です。バウチャーは口座残高の補充に利用できます。

|

||||||

|

7. **チャンネル管理**に対応し、チャンネルの一括作成が可能。

|

||||||

|

8. グループごとに異なるレートを設定するための**ユーザーグループ**と**チャンネルグループ**をサポートしています。

|

||||||

|

9. チャンネル**モデルリスト設定**に対応。

|

||||||

|

10. **クォータ詳細チェック**をサポート。

|

||||||

|

11. **ユーザー招待報酬**をサポートします。

|

||||||

|

12. 米ドルでの残高表示が可能。

|

||||||

|

13. 新規ユーザー向けのお知らせ公開、リチャージリンク設定、初期残高設定に対応。

|

||||||

|

14. 豊富な**カスタマイズ**オプションを提供します:

|

||||||

|

1. システム名、ロゴ、フッターのカスタマイズが可能。

|

||||||

|

2. HTML と Markdown コードを使用したホームページとアバウトページのカスタマイズ、または iframe を介したスタンドアロンウェブページの埋め込みをサポートしています。

|

||||||

|

15. システム・アクセストークンによる管理 API アクセスをサポートする。

|

||||||

|

16. Cloudflare Turnstile によるユーザー認証に対応。

|

||||||

|

17. ユーザー管理と複数のユーザーログイン/登録方法をサポート:

|

||||||

|

+ 電子メールによるログイン/登録とパスワードリセット。

|

||||||

|

+ [GitHub OAuth](https://github.com/settings/applications/new)。

|

||||||

|

+ WeChat 公式アカウントの認証([WeChat Server](https://github.com/songquanpeng/wechat-server)の追加導入が必要)。

|

||||||

|

18. 他の主要なモデル API が利用可能になった場合、即座にサポートし、カプセル化する。

|

||||||

|

|

||||||

|

## デプロイメント

|

||||||

|

### Docker デプロイメント

|

||||||

|

デプロイコマンド: `docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api-en`。

|

||||||

|

|

||||||

|

コマンドを更新する: `docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrr/watchtower -cR`。

|

||||||

|

|

||||||

|

`-p 3000:3000` の最初の `3000` はホストのポートで、必要に応じて変更できます。

|

||||||

|

|

||||||

|

データはホストの `/home/ubuntu/data/one-api` ディレクトリに保存される。このディレクトリが存在し、書き込み権限があることを確認する、もしくは適切なディレクトリに変更してください。

|

||||||

|

|

||||||

|

Nginxリファレンス設定:

|

||||||

|

```

|

||||||

|

server{

|

||||||

|

server_name openai.justsong.cn; # ドメイン名は適宜変更

|

||||||

|

|

||||||

|

location / {

|

||||||

|

client_max_body_size 64m;

|

||||||

|

proxy_http_version 1.1;

|

||||||

|

proxy_pass http://localhost:3000; # それに応じてポートを変更

|

||||||

|

proxy_set_header Host $host;

|

||||||

|

proxy_set_header X-Forwarded-For $remote_addr;

|

||||||

|

proxy_cache_bypass $http_upgrade;

|

||||||

|

proxy_set_header Accept-Encoding gzip;

|

||||||

|

proxy_read_timeout 300s; # GPT-4 はより長いタイムアウトが必要

|

||||||

|

}

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

次に、Let's Encrypt certbot を使って HTTPS を設定します:

|

||||||

|

```bash

|

||||||

|

# Ubuntu に certbot をインストール:

|

||||||

|

sudo snap install --classic certbot

|

||||||

|

sudo ln -s /snap/bin/certbot /usr/bin/certbot

|

||||||

|

# 証明書の生成と Nginx 設定の変更

|

||||||

|

sudo certbot --nginx

|

||||||

|

# プロンプトに従う

|

||||||

|

# Nginx を再起動

|

||||||

|

sudo service nginx restart

|

||||||

|

```

|

||||||

|

|

||||||

|

初期アカウントのユーザー名は `root` で、パスワードは `123456` です。

|

||||||

|

|

||||||

|

### マニュアルデプロイ

|

||||||

|

1. [GitHub Releases](https://github.com/songquanpeng/one-api/releases/latest) から実行ファイルをダウンロードする、もしくはソースからコンパイルする:

|

||||||

|

```shell

|

||||||

|

git clone https://github.com/songquanpeng/one-api.git

|

||||||

|

|

||||||

|

# フロントエンドのビルド

|

||||||

|

cd one-api/web

|

||||||

|

npm install

|

||||||

|

npm run build

|

||||||

|

|

||||||

|

# バックエンドのビルド

|

||||||

|

cd ..

|

||||||

|

go mod download

|

||||||

|

go build -ldflags "-s -w" -o one-api

|

||||||

|

```

|

||||||

|

2. 実行:

|

||||||

|

```shell

|

||||||

|

chmod u+x one-api

|

||||||

|

./one-api --port 3000 --log-dir ./logs

|

||||||

|

```

|

||||||

|

3. [http://localhost:3000/](http://localhost:3000/) にアクセスし、ログインする。初期アカウントのユーザー名は `root`、パスワードは `123456` である。

|

||||||

|

|

||||||

|

より詳細なデプロイのチュートリアルについては、[このページ](https://iamazing.cn/page/how-to-deploy-a-website) を参照してください。

|

||||||

|

|

||||||

|

### マルチマシンデプロイ

|

||||||

|

1. すべてのサーバに同じ `SESSION_SECRET` を設定する。

|

||||||

|

2. `SQL_DSN` を設定し、SQLite の代わりに MySQL を使用する。すべてのサーバは同じデータベースに接続する。

|

||||||

|

3. マスターノード以外のノードの `NODE_TYPE` を `slave` に設定する。

|

||||||

|

4. データベースから定期的に設定を同期するサーバーには `SYNC_FREQUENCY` を設定する。

|

||||||

|

5. マスター以外のノードでは、オプションで `FRONTEND_BASE_URL` を設定して、ページ要求をマスターサーバーにリダイレクトすることができます。

|

||||||

|

6. マスター以外のノードには Redis を個別にインストールし、`REDIS_CONN_STRING` を設定して、キャッシュの有効期限が切れていないときにデータベースにゼロレイテンシーでアクセスできるようにする。

|

||||||

|

7. メインサーバーでもデータベースへのアクセスが高レイテンシになる場合は、Redis を有効にし、`SYNC_FREQUENCY` を設定してデータベースから定期的に設定を同期する必要がある。

|

||||||

|

|

||||||

|

Please refer to the [environment variables](#environment-variables) section for details on using environment variables.

|

||||||

|

|

||||||

|

### コントロールパネル(例: Baota)への展開

|

||||||

|

詳しい手順は [#175](https://github.com/songquanpeng/one-api/issues/175) を参照してください。

|

||||||

|

|

||||||

|

配置後に空白のページが表示される場合は、[#97](https://github.com/songquanpeng/one-api/issues/97) を参照してください。

|

||||||

|

|

||||||

|

### サードパーティプラットフォームへのデプロイ

|

||||||

|

<details>

|

||||||

|

<summary><strong>Sealos へのデプロイ</strong></summary>

|

||||||

|

<div>

|

||||||

|

|

||||||

|

> Sealos は、高い同時実行性、ダイナミックなスケーリング、数百万人のユーザーに対する安定した運用をサポートしています。

|

||||||

|

|

||||||

|

> 下のボタンをクリックすると、ワンクリックで展開できます。👇

|

||||||

|

|

||||||

|

[](https://cloud.sealos.io/?openapp=system-fastdeploy?templateName=one-api)

|

||||||

|

|

||||||

|

|

||||||

|

</div>

|

||||||

|

</details>

|

||||||

|

|

||||||

|

<details>

|

||||||

|

<summary><strong>Zeabur へのデプロイ</strong></summary>

|

||||||

|

<div>

|

||||||

|

|

||||||

|

> Zeabur のサーバーは海外にあるため、ネットワークの問題は自動的に解決されます。

|

||||||

|

|

||||||

|

1. まず、コードをフォークする。

|

||||||

|

2. [Zeabur](https://zeabur.com?referralCode=songquanpeng) にアクセスしてログインし、コンソールに入る。

|

||||||

|

3. 新しいプロジェクトを作成します。Service -> Add ServiceでMarketplace を選択し、MySQL を選択する。接続パラメータ(ユーザー名、パスワード、アドレス、ポート)をメモします。

|

||||||

|

4. 接続パラメータをコピーし、```create database `one-api` ``` を実行してデータベースを作成する。

|

||||||

|

5. その後、Service -> Add Service で Git を選択し(最初の使用には認証が必要です)、フォークしたリポジトリを選択します。

|

||||||

|

6. 自動デプロイが開始されますが、一旦キャンセルしてください。Variable タブで `PORT` に `3000` を追加し、`SQL_DSN` に `<username>:<password>@tcp(<addr>:<port>)/one-api` を追加します。変更を保存する。SQL_DSN` が設定されていないと、データが永続化されず、再デプロイ後にデータが失われるので注意すること。

|

||||||

|

7. 再デプロイを選択します。

|

||||||

|

8. Domains タブで、"my-one-api" のような適切なドメイン名の接頭辞を選択する。最終的なドメイン名は "my-one-api.zeabur.app" となります。独自のドメイン名を CNAME することもできます。

|

||||||

|

9. デプロイが完了するのを待ち、生成されたドメイン名をクリックして One API にアクセスします。

|

||||||

|

|

||||||

|

</div>

|

||||||

|

</details>

|

||||||

|

|

||||||

|

## コンフィグ

|

||||||

|

システムは箱から出してすぐに使えます。

|

||||||

|

|

||||||

|

環境変数やコマンドラインパラメータを設定することで、システムを構成することができます。

|

||||||

|

|

||||||

|

システム起動後、`root` ユーザーとしてログインし、さらにシステムを設定します。

|

||||||

|

|

||||||

|

## 使用方法

|

||||||

|

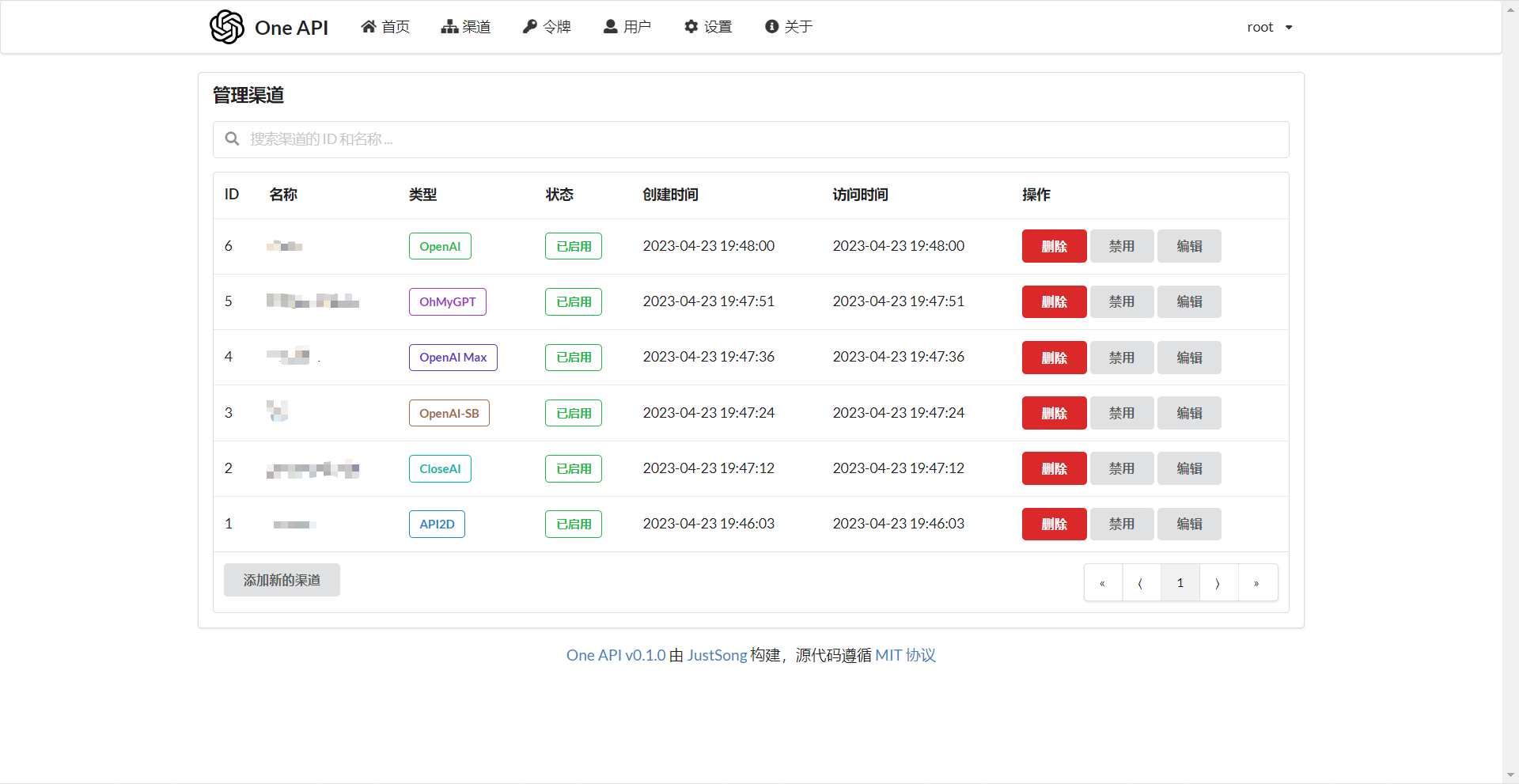

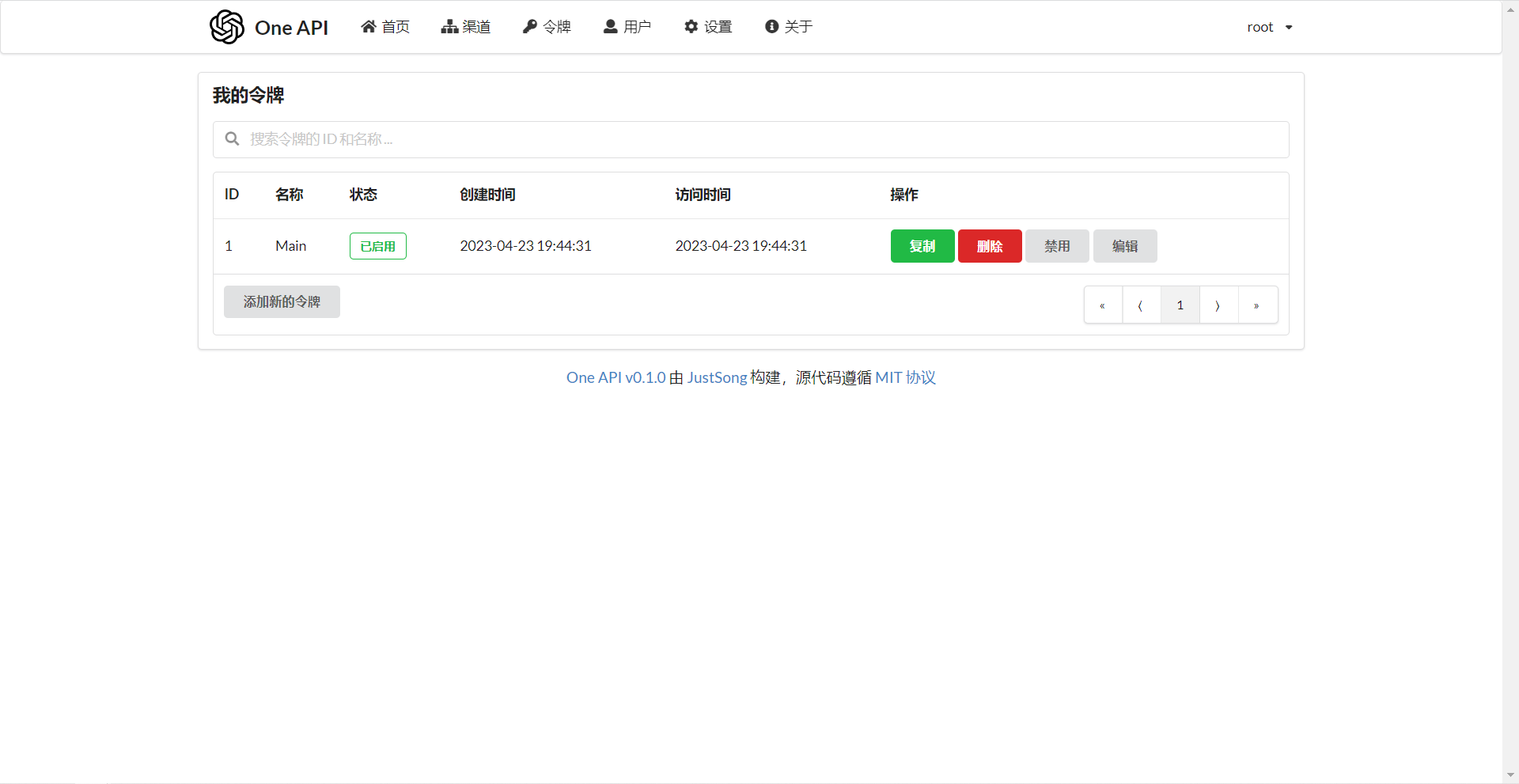

`Channels` ページで API Key を追加し、`Tokens` ページでアクセストークンを追加する。

|

||||||

|

|

||||||

|

アクセストークンを使って One API にアクセスすることができる。使い方は [OpenAI API](https://platform.openai.com/docs/api-reference/introduction) と同じです。

|

||||||

|

|

||||||

|

OpenAI API が使用されている場所では、API Base に One API のデプロイアドレスを設定することを忘れないでください(例: `https://openai.justsong.cn`)。API Key は One API で生成されたトークンでなければなりません。

|

||||||

|

|

||||||

|

具体的な API Base のフォーマットは、使用しているクライアントに依存することに注意してください。

|

||||||

|

|

||||||

|

```mermaid

|

||||||

|

graph LR

|

||||||

|

A(ユーザ)

|

||||||

|

A --->|リクエスト| B(One API)

|

||||||

|

B -->|中継リクエスト| C(OpenAI)

|

||||||

|

B -->|中継リクエスト| D(Azure)

|

||||||

|

B -->|中継リクエスト| E(その他のダウンストリームチャンネル)

|

||||||

|

```

|

||||||

|

|

||||||

|

現在のリクエストにどのチャネルを使うかを指定するには、トークンの後に チャネル ID を追加します: 例えば、`Authorization: Bearer ONE_API_KEY-CHANNEL_ID` のようにします。

|

||||||

|

チャンネル ID を指定するためには、トークンは管理者によって作成される必要があることに注意してください。

|

||||||

|

|

||||||

|

もしチャネル ID が指定されない場合、ロードバランシングによってリクエストが複数のチャネルに振り分けられます。

|

||||||

|

|

||||||

|

### 環境変数

|

||||||

|

1. `REDIS_CONN_STRING`: 設定すると、リクエストレート制限のためのストレージとして、メモリの代わりに Redis が使われる。

|

||||||

|

+ 例: `REDIS_CONN_STRING=redis://default:redispw@localhost:49153`

|

||||||

|

2. `SESSION_SECRET`: 設定すると、固定セッションキーが使用され、システムの再起動後もログインユーザーのクッキーが有効であることが保証されます。

|

||||||

|

+ 例: `SESSION_SECRET=random_string`

|

||||||

|

3. `SQL_DSN`: 設定すると、SQLite の代わりに指定したデータベースが使用されます。MySQL バージョン 8.0 を使用してください。

|

||||||

|

+ 例: `SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||||

|

4. `FRONTEND_BASE_URL`: 設定されると、バックエンドアドレスではなく、指定されたフロントエンドアドレスが使われる。

|

||||||

|

+ 例: `FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||||

|

5. `SYNC_FREQUENCY`: 設定された場合、システムは定期的にデータベースからコンフィグを秒単位で同期する。設定されていない場合、同期は行われません。

|

||||||

|

+ 例: `SYNC_FREQUENCY=60`

|

||||||

|

6. `NODE_TYPE`: 設定すると、ノードのタイプを指定する。有効な値は `master` と `slave` である。設定されていない場合、デフォルトは `master`。

|

||||||

|

+ 例: `NODE_TYPE=slave`

|

||||||

|

7. `CHANNEL_UPDATE_FREQUENCY`: 設定すると、チャンネル残高を分単位で定期的に更新する。設定されていない場合、更新は行われません。

|

||||||

|

+ 例: `CHANNEL_UPDATE_FREQUENCY=1440`

|

||||||

|

8. `CHANNEL_TEST_FREQUENCY`: 設定すると、チャンネルを定期的にテストする。設定されていない場合、テストは行われません。

|

||||||

|

+ 例: `CHANNEL_TEST_FREQUENCY=1440`

|

||||||

|

9. `POLLING_INTERVAL`: チャネル残高の更新とチャネルの可用性をテストするときのリクエスト間の時間間隔 (秒)。デフォルトは間隔なし。

|

||||||

|

+ 例: `POLLING_INTERVAL=5`

|

||||||

|

|

||||||

|

### コマンドラインパラメータ

|

||||||

|

1. `--port <port_number>`: サーバがリッスンするポート番号を指定。デフォルトは `3000` です。

|

||||||

|

+ 例: `--port 3000`

|

||||||

|

2. `--log-dir <log_dir>`: ログディレクトリを指定。設定しない場合、ログは保存されません。

|

||||||

|

+ 例: `--log-dir ./logs`

|

||||||

|

3. `--version`: システムのバージョン番号を表示して終了する。

|

||||||

|

4. `--help`: コマンドの使用法ヘルプとパラメータの説明を表示。

|

||||||

|

|

||||||

|

## スクリーンショット

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

## FAQ

|

||||||

|

1. ノルマとは何か?どのように計算されますか?One API にはノルマ計算の問題はありますか?

|

||||||

|

+ ノルマ = グループ倍率 * モデル倍率 * (プロンプトトークンの数 + 完了トークンの数 * 完了倍率)

|

||||||

|

+ 完了倍率は、公式の定義と一致するように、GPT3.5 では 1.33、GPT4 では 2 に固定されています。

|

||||||

|

+ ストリームモードでない場合、公式 API は消費したトークンの総数を返す。ただし、プロンプトとコンプリートの消費倍率は異なるので注意してください。

|

||||||

|

2. アカウント残高は十分なのに、"insufficient quota" と表示されるのはなぜですか?

|

||||||

|

+ トークンのクォータが十分かどうかご確認ください。トークンクォータはアカウント残高とは別のものです。

|

||||||

|

+ トークンクォータは最大使用量を設定するためのもので、ユーザーが自由に設定できます。

|

||||||

|

3. チャンネルを使おうとすると "No available channels" と表示されます。どうすればいいですか?

|

||||||

|

+ ユーザーとチャンネルグループの設定を確認してください。

|

||||||

|

+ チャンネルモデルの設定も確認してください。

|

||||||

|

4. チャンネルテストがエラーを報告する: "invalid character '<' looking for beginning of value"

|

||||||

|

+ このエラーは、返された値が有効な JSON ではなく、HTML ページである場合に発生する。

|

||||||

|

+ ほとんどの場合、デプロイサイトのIPかプロキシのノードが CloudFlare によってブロックされています。

|

||||||

|

5. ChatGPT Next Web でエラーが発生しました: "Failed to fetch"

|

||||||

|

+ デプロイ時に `BASE_URL` を設定しないでください。

|

||||||

|

+ インターフェイスアドレスと API Key が正しいか再確認してください。

|

||||||

|

|

||||||

|

## 関連プロジェクト

|

||||||

|

[FastGPT](https://github.com/labring/FastGPT): LLM に基づく知識質問応答システム

|

||||||

|

|

||||||

|

## 注

|

||||||

|

本プロジェクトはオープンソースプロジェクトです。OpenAI の[利用規約](https://openai.com/policies/terms-of-use)および**適用される法令**を遵守してご利用ください。違法な目的での利用はご遠慮ください。

|

||||||

|

|

||||||

|

このプロジェクトは MIT ライセンスで公開されています。これに基づき、ページの最下部に帰属表示と本プロジェクトへのリンクを含める必要があります。

|

||||||

|

|

||||||

|

このプロジェクトを基にした派生プロジェクトについても同様です。

|

||||||

|

|

||||||

|

帰属表示を含めたくない場合は、事前に許可を得なければなりません。

|

||||||

|

|

||||||

|

MIT ライセンスによると、このプロジェクトを利用するリスクと責任は利用者が負うべきであり、このオープンソースプロジェクトの開発者は責任を負いません。

|

||||||

82

README.md

82

README.md

@ -1,5 +1,5 @@

|

|||||||

<p align="right">

|

<p align="right">

|

||||||

<strong>中文</strong> | <a href="./README.en.md">English</a>

|

<strong>中文</strong> | <a href="./README.en.md">English</a> | <a href="./README.ja.md">日本語</a>

|

||||||

</p>

|

</p>

|

||||||

|

|

||||||

|

|

||||||

@ -51,11 +51,13 @@ _✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用

|

|||||||

<a href="https://iamazing.cn/page/reward">赞赏支持</a>

|

<a href="https://iamazing.cn/page/reward">赞赏支持</a>

|

||||||

</p>

|

</p>

|

||||||

|

|

||||||

> **Note**:本项目为开源项目,使用者必须在遵循 OpenAI 的[使用条款](https://openai.com/policies/terms-of-use)以及**法律法规**的情况下使用,不得用于非法用途。

|

> **Note**

|

||||||

|

> 本项目为开源项目,使用者必须在遵循 OpenAI 的[使用条款](https://openai.com/policies/terms-of-use)以及**法律法规**的情况下使用,不得用于非法用途。

|

||||||

|

>

|

||||||

|

> 根据[《生成式人工智能服务管理暂行办法》](http://www.cac.gov.cn/2023-07/13/c_1690898327029107.htm)的要求,请勿对中国地区公众提供一切未经备案的生成式人工智能服务。

|

||||||

|

|

||||||

> **Note**:使用 Docker 拉取的最新镜像可能是 `alpha` 版本,如果追求稳定性请手动指定版本。

|

> **Warning**

|

||||||

|

> 使用 Docker 拉取的最新镜像可能是 `alpha` 版本,如果追求稳定性请手动指定版本。

|

||||||

> **Warning**:从 `v0.3` 版本升级到 `v0.4` 版本需要手动迁移数据库,请手动执行[数据库迁移脚本](./bin/migration_v0.3-v0.4.sql)。

|

|

||||||

|

|

||||||

## 功能

|

## 功能

|

||||||

1. 支持多种大模型:

|

1. 支持多种大模型:

|

||||||

@ -63,14 +65,16 @@ _✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用

|

|||||||

+ [x] [Anthropic Claude 系列模型](https://anthropic.com)

|

+ [x] [Anthropic Claude 系列模型](https://anthropic.com)

|

||||||

+ [x] [Google PaLM2 系列模型](https://developers.generativeai.google)

|

+ [x] [Google PaLM2 系列模型](https://developers.generativeai.google)

|

||||||

+ [x] [百度文心一言系列模型](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

+ [x] [百度文心一言系列模型](https://cloud.baidu.com/doc/WENXINWORKSHOP/index.html)

|

||||||

|

+ [x] [阿里通义千问系列模型](https://help.aliyun.com/document_detail/2400395.html)

|

||||||

|

+ [x] [讯飞星火认知大模型](https://www.xfyun.cn/doc/spark/Web.html)

|

||||||

+ [x] [智谱 ChatGLM 系列模型](https://bigmodel.cn)

|

+ [x] [智谱 ChatGLM 系列模型](https://bigmodel.cn)

|

||||||

|

+ [x] [360 智脑](https://ai.360.cn)

|

||||||

2. 支持配置镜像以及众多第三方代理服务:

|

2. 支持配置镜像以及众多第三方代理服务:

|

||||||

+ [x] [API Distribute](https://api.gptjk.top/register?aff=QGxj)

|

|

||||||

+ [x] [OpenAI-SB](https://openai-sb.com)

|

+ [x] [OpenAI-SB](https://openai-sb.com)

|

||||||

|

+ [x] [CloseAI](https://console.closeai-asia.com/r/2412)

|

||||||

+ [x] [API2D](https://api2d.com/r/197971)

|

+ [x] [API2D](https://api2d.com/r/197971)

|

||||||

+ [x] [OhMyGPT](https://aigptx.top?aff=uFpUl2Kf)

|

+ [x] [OhMyGPT](https://aigptx.top?aff=uFpUl2Kf)

|

||||||

+ [x] [AI Proxy](https://aiproxy.io/?i=OneAPI) (邀请码:`OneAPI`)

|

+ [x] [AI Proxy](https://aiproxy.io/?i=OneAPI) (邀请码:`OneAPI`)

|

||||||

+ [x] [CloseAI](https://console.closeai-asia.com/r/2412)

|

|

||||||

+ [x] 自定义渠道:例如各种未收录的第三方代理服务

|

+ [x] 自定义渠道:例如各种未收录的第三方代理服务

|

||||||

3. 支持通过**负载均衡**的方式访问多个渠道。

|

3. 支持通过**负载均衡**的方式访问多个渠道。

|

||||||

4. 支持 **stream 模式**,可以通过流式传输实现打字机效果。

|

4. 支持 **stream 模式**,可以通过流式传输实现打字机效果。

|

||||||

@ -93,7 +97,7 @@ _✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用

|

|||||||

19. 支持通过系统访问令牌访问管理 API。

|

19. 支持通过系统访问令牌访问管理 API。

|

||||||

20. 支持 Cloudflare Turnstile 用户校验。

|

20. 支持 Cloudflare Turnstile 用户校验。

|

||||||

21. 支持用户管理,支持**多种用户登录注册方式**:

|

21. 支持用户管理,支持**多种用户登录注册方式**:

|

||||||

+ 邮箱登录注册以及通过邮箱进行密码重置。

|

+ 邮箱登录注册(支持注册邮箱白名单)以及通过邮箱进行密码重置。

|

||||||

+ [GitHub 开放授权](https://github.com/settings/applications/new)。

|

+ [GitHub 开放授权](https://github.com/settings/applications/new)。

|

||||||

+ 微信公众号授权(需要额外部署 [WeChat Server](https://github.com/songquanpeng/wechat-server))。

|

+ 微信公众号授权(需要额外部署 [WeChat Server](https://github.com/songquanpeng/wechat-server))。

|

||||||

|

|

||||||

@ -101,16 +105,18 @@ _✨ 通过标准的 OpenAI API 格式访问所有的大模型,开箱即用

|

|||||||

### 基于 Docker 进行部署

|

### 基于 Docker 进行部署

|

||||||

部署命令:`docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api`

|

部署命令:`docker run --name one-api -d --restart always -p 3000:3000 -e TZ=Asia/Shanghai -v /home/ubuntu/data/one-api:/data justsong/one-api`

|

||||||

|

|

||||||

如果上面的镜像无法拉取,可以尝试使用 GitHub 的 Docker 镜像,将上面的 `justsong/one-api` 替换为 `ghcr.io/songquanpeng/one-api` 即可。

|

其中,`-p 3000:3000` 中的第一个 `3000` 是宿主机的端口,可以根据需要进行修改。

|

||||||

|

|

||||||

如果你的并发量较大,推荐设置 `SQL_DSN`,详见下面[环境变量](#环境变量)一节。

|

|

||||||

|

|

||||||

更新命令:`docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower -cR`

|

|

||||||

|

|

||||||

`-p 3000:3000` 中的第一个 `3000` 是宿主机的端口,可以根据需要进行修改。

|

|

||||||

|

|

||||||

数据将会保存在宿主机的 `/home/ubuntu/data/one-api` 目录,请确保该目录存在且具有写入权限,或者更改为合适的目录。

|

数据将会保存在宿主机的 `/home/ubuntu/data/one-api` 目录,请确保该目录存在且具有写入权限,或者更改为合适的目录。

|

||||||

|

|

||||||

|

如果启动失败,请添加 `--privileged=true`,具体参考 https://github.com/songquanpeng/one-api/issues/482 。

|

||||||

|

|

||||||

|

如果上面的镜像无法拉取,可以尝试使用 GitHub 的 Docker 镜像,将上面的 `justsong/one-api` 替换为 `ghcr.io/songquanpeng/one-api` 即可。

|

||||||

|

|

||||||

|

如果你的并发量较大,**务必**设置 `SQL_DSN`,详见下面[环境变量](#环境变量)一节。

|

||||||

|

|

||||||

|

更新命令:`docker run --rm -v /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower -cR`

|

||||||

|

|

||||||

Nginx 的参考配置:

|

Nginx 的参考配置:

|

||||||

```

|

```

|

||||||

server{

|

server{

|

||||||

@ -152,7 +158,7 @@ sudo service nginx restart

|

|||||||

cd one-api/web

|

cd one-api/web

|

||||||

npm install

|

npm install

|

||||||

npm run build

|

npm run build

|

||||||

|

|

||||||

# 构建后端

|

# 构建后端

|

||||||

cd ..

|

cd ..

|

||||||

go mod download

|

go mod download

|

||||||

@ -205,14 +211,23 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

|||||||

|

|

||||||

注意修改端口号、`OPENAI_API_BASE_URL` 和 `OPENAI_API_KEY`。

|

注意修改端口号、`OPENAI_API_BASE_URL` 和 `OPENAI_API_KEY`。

|

||||||

|

|

||||||

|

#### QChatGPT - QQ机器人

|

||||||

|

项目主页:https://github.com/RockChinQ/QChatGPT

|

||||||

|

|

||||||

|

根据文档完成部署后,在`config.py`设置配置项`openai_config`的`reverse_proxy`为 One API 后端地址,设置`api_key`为 One API 生成的key,并在配置项`completion_api_params`的`model`参数设置为 One API 支持的模型名称。

|

||||||

|

|

||||||

|

可安装 [Switcher 插件](https://github.com/RockChinQ/Switcher)在运行时切换所使用的模型。

|

||||||

|

|

||||||

### 部署到第三方平台

|

### 部署到第三方平台

|

||||||

<details>

|

<details>

|

||||||

<summary><strong>部署到 Sealos </strong></summary>

|

<summary><strong>部署到 Sealos </strong></summary>

|

||||||

<div>

|

<div>

|

||||||

|

|

||||||

> Sealos 可视化部署,仅需 1 分钟。

|

> Sealos 的服务器在国外,不需要额外处理网络问题,支持高并发 & 动态伸缩。

|

||||||

|

|

||||||

参考这个[教程](https://github.com/c121914yu/FastGPT/blob/main/docs/deploy/one-api/sealos.md)中 1~5 步。

|

点击以下按钮一键部署(部署后访问出现 404 请等待 3~5 分钟):

|

||||||

|

|

||||||

|

[](https://cloud.sealos.io/?openapp=system-fastdeploy?templateName=one-api)

|

||||||

|

|

||||||

</div>

|

</div>

|

||||||

</details>

|

</details>

|

||||||

@ -224,7 +239,7 @@ docker run --name chatgpt-web -d -p 3002:3002 -e OPENAI_API_BASE_URL=https://ope

|

|||||||

> Zeabur 的服务器在国外,自动解决了网络的问题,同时免费的额度也足够个人使用。

|

> Zeabur 的服务器在国外,自动解决了网络的问题,同时免费的额度也足够个人使用。

|

||||||

|

|

||||||

1. 首先 fork 一份代码。

|

1. 首先 fork 一份代码。

|

||||||

2. 进入 [Zeabur](https://zeabur.com/),登录,进入控制台。

|

2. 进入 [Zeabur](https://zeabur.com?referralCode=songquanpeng),登录,进入控制台。

|

||||||

3. 新建一个 Project,在 Service -> Add Service 选择 Marketplace,选择 MySQL,并记下连接参数(用户名、密码、地址、端口)。

|

3. 新建一个 Project,在 Service -> Add Service 选择 Marketplace,选择 MySQL,并记下连接参数(用户名、密码、地址、端口)。

|

||||||

4. 复制链接参数,运行 ```create database `one-api` ``` 创建数据库。

|

4. 复制链接参数,运行 ```create database `one-api` ``` 创建数据库。

|

||||||

5. 然后在 Service -> Add Service,选择 Git(第一次使用需要先授权),选择你 fork 的仓库。

|

5. 然后在 Service -> Add Service,选择 Git(第一次使用需要先授权),选择你 fork 的仓库。

|

||||||

@ -269,15 +284,23 @@ graph LR

|

|||||||

不加的话将会使用负载均衡的方式使用多个渠道。

|

不加的话将会使用负载均衡的方式使用多个渠道。

|

||||||

|

|

||||||

### 环境变量

|

### 环境变量

|

||||||

1. `REDIS_CONN_STRING`:设置之后将使用 Redis 作为请求频率限制的存储,而非使用内存存储。

|

1. `REDIS_CONN_STRING`:设置之后将使用 Redis 作为缓存使用。

|

||||||

+ 例子:`REDIS_CONN_STRING=redis://default:redispw@localhost:49153`

|

+ 例子:`REDIS_CONN_STRING=redis://default:redispw@localhost:49153`

|

||||||

|

+ 如果数据库访问延迟很低,没有必要启用 Redis,启用后反而会出现数据滞后的问题。

|

||||||

2. `SESSION_SECRET`:设置之后将使用固定的会话密钥,这样系统重新启动后已登录用户的 cookie 将依旧有效。

|

2. `SESSION_SECRET`:设置之后将使用固定的会话密钥,这样系统重新启动后已登录用户的 cookie 将依旧有效。

|

||||||

+ 例子:`SESSION_SECRET=random_string`

|

+ 例子:`SESSION_SECRET=random_string`

|

||||||

3. `SQL_DSN`:设置之后将使用指定数据库而非 SQLite,请使用 MySQL 8.0 版本。

|

3. `SQL_DSN`:设置之后将使用指定数据库而非 SQLite,请使用 MySQL 或 PostgreSQL。

|

||||||

+ 例子:`SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

+ 例子:

|

||||||

|

+ MySQL:`SQL_DSN=root:123456@tcp(localhost:3306)/oneapi`

|

||||||

|

+ PostgreSQL:`SQL_DSN=postgres://postgres:123456@localhost:5432/oneapi`(适配中,欢迎反馈)

|

||||||

+ 注意需要提前建立数据库 `oneapi`,无需手动建表,程序将自动建表。

|

+ 注意需要提前建立数据库 `oneapi`,无需手动建表,程序将自动建表。

|

||||||

+ 如果使用本地数据库:部署命令可添加 `--network="host"` 以使得容器内的程序可以访问到宿主机上的 MySQL。

|

+ 如果使用本地数据库:部署命令可添加 `--network="host"` 以使得容器内的程序可以访问到宿主机上的 MySQL。

|

||||||

+ 如果使用云数据库:如果云服务器需要验证身份,需要在连接参数中添加 `?tls=skip-verify`。

|

+ 如果使用云数据库:如果云服务器需要验证身份,需要在连接参数中添加 `?tls=skip-verify`。

|

||||||

|

+ 请根据你的数据库配置修改下列参数(或者保持默认值):

|

||||||

|

+ `SQL_MAX_IDLE_CONNS`:最大空闲连接数,默认为 `100`。

|

||||||

|

+ `SQL_MAX_OPEN_CONNS`:最大打开连接数,默认为 `1000`。

|

||||||

|

+ 如果报错 `Error 1040: Too many connections`,请适当减小该值。

|

||||||

|

+ `SQL_CONN_MAX_LIFETIME`:连接的最大生命周期,默认为 `60`,单位分钟。

|

||||||

4. `FRONTEND_BASE_URL`:设置之后将重定向页面请求到指定的地址,仅限从服务器设置。

|

4. `FRONTEND_BASE_URL`:设置之后将重定向页面请求到指定的地址,仅限从服务器设置。

|

||||||

+ 例子:`FRONTEND_BASE_URL=https://openai.justsong.cn`

|

+ 例子:`FRONTEND_BASE_URL=https://openai.justsong.cn`

|

||||||

5. `SYNC_FREQUENCY`:设置之后将定期与数据库同步配置,单位为秒,未设置则不进行同步。

|

5. `SYNC_FREQUENCY`:设置之后将定期与数据库同步配置,单位为秒,未设置则不进行同步。

|

||||||

@ -290,6 +313,14 @@ graph LR

|

|||||||

+ 例子:`CHANNEL_TEST_FREQUENCY=1440`

|

+ 例子:`CHANNEL_TEST_FREQUENCY=1440`

|

||||||

9. `POLLING_INTERVAL`:批量更新渠道余额以及测试可用性时的请求间隔,单位为秒,默认无间隔。

|

9. `POLLING_INTERVAL`:批量更新渠道余额以及测试可用性时的请求间隔,单位为秒,默认无间隔。

|

||||||

+ 例子:`POLLING_INTERVAL=5`

|

+ 例子:`POLLING_INTERVAL=5`

|

||||||

|

10. `BATCH_UPDATE_ENABLED`:启用数据库批量更新聚合,会导致用户额度的更新存在一定的延迟可选值为 `true` 和 `false`,未设置则默认为 `false`。

|

||||||

|

+ 例子:`BATCH_UPDATE_ENABLED=true`

|

||||||

|

+ 如果你遇到了数据库连接数过多的问题,可以尝试启用该选项。

|

||||||

|

11. `BATCH_UPDATE_INTERVAL=5`:批量更新聚合的时间间隔,单位为秒,默认为 `5`。

|

||||||

|

+ 例子:`BATCH_UPDATE_INTERVAL=5`

|

||||||

|

12. 请求频率限制:

|

||||||

|

+ `GLOBAL_API_RATE_LIMIT`:全局 API 速率限制(除中继请求外),单 ip 三分钟内的最大请求数,默认为 `180`。

|

||||||

|

+ `GLOBAL_WEB_RATE_LIMIT`:全局 Web 速率限制,单 ip 三分钟内的最大请求数,默认为 `60`。

|

||||||

|

|

||||||

### 命令行参数

|

### 命令行参数

|

||||||

1. `--port <port_number>`: 指定服务器监听的端口号,默认为 `3000`。

|

1. `--port <port_number>`: 指定服务器监听的端口号,默认为 `3000`。

|

||||||

@ -313,6 +344,7 @@ https://openai.justsong.cn

|

|||||||

+ 额度 = 分组倍率 * 模型倍率 * (提示 token 数 + 补全 token 数 * 补全倍率)

|

+ 额度 = 分组倍率 * 模型倍率 * (提示 token 数 + 补全 token 数 * 补全倍率)

|

||||||

+ 其中补全倍率对于 GPT3.5 固定为 1.33,GPT4 为 2,与官方保持一致。

|

+ 其中补全倍率对于 GPT3.5 固定为 1.33,GPT4 为 2,与官方保持一致。

|

||||||

+ 如果是非流模式,官方接口会返回消耗的总 token,但是你要注意提示和补全的消耗倍率不一样。

|

+ 如果是非流模式,官方接口会返回消耗的总 token,但是你要注意提示和补全的消耗倍率不一样。

|

||||||

|

+ 注意,One API 的默认倍率就是官方倍率,是已经调整过的。

|

||||||

2. 账户额度足够为什么提示额度不足?

|

2. 账户额度足够为什么提示额度不足?

|

||||||

+ 请检查你的令牌额度是否足够,这个和账户额度是分开的。

|

+ 请检查你的令牌额度是否足够,这个和账户额度是分开的。

|

||||||

+ 令牌额度仅供用户设置最大使用量,用户可自由设置。

|

+ 令牌额度仅供用户设置最大使用量,用户可自由设置。

|

||||||

@ -325,11 +357,13 @@ https://openai.justsong.cn

|

|||||||

5. ChatGPT Next Web 报错:`Failed to fetch`

|

5. ChatGPT Next Web 报错:`Failed to fetch`

|

||||||

+ 部署的时候不要设置 `BASE_URL`。

|

+ 部署的时候不要设置 `BASE_URL`。

|

||||||

+ 检查你的接口地址和 API Key 有没有填对。

|

+ 检查你的接口地址和 API Key 有没有填对。

|

||||||

|

+ 检查是否启用了 HTTPS,浏览器会拦截 HTTPS 域名下的 HTTP 请求。

|

||||||

6. 报错:`当前分组负载已饱和,请稍后再试`

|

6. 报错:`当前分组负载已饱和,请稍后再试`

|

||||||

+ 上游通道 429 了。

|

+ 上游通道 429 了。

|

||||||

|

|

||||||

## 相关项目

|

## 相关项目

|

||||||

[FastGPT](https://github.com/c121914yu/FastGPT): 三分钟搭建 AI 知识库

|

* [FastGPT](https://github.com/labring/FastGPT): 基于 LLM 大语言模型的知识库问答系统

|

||||||

|

* [ChatGPT Next Web](https://github.com/Yidadaa/ChatGPT-Next-Web): 一键拥有你自己的跨平台 ChatGPT 应用

|

||||||

|

|

||||||

## 注意

|

## 注意

|

||||||

|

|

||||||

@ -337,4 +371,4 @@ https://openai.justsong.cn

|

|||||||

|

|

||||||

同样适用于基于本项目的二开项目。

|

同样适用于基于本项目的二开项目。

|

||||||

|

|

||||||

依据 MIT 协议,使用者需自行承担使用本项目的风险与责任,本开源项目开发者与此无关。

|

依据 MIT 协议,使用者需自行承担使用本项目的风险与责任,本开源项目开发者与此无关。

|

||||||

|

|||||||

@ -42,6 +42,21 @@ var WeChatAuthEnabled = false

|

|||||||

var TurnstileCheckEnabled = false

|

var TurnstileCheckEnabled = false

|

||||||

var RegisterEnabled = true

|

var RegisterEnabled = true

|

||||||

|

|

||||||

|

var EmailDomainRestrictionEnabled = false

|

||||||

|

var EmailDomainWhitelist = []string{

|

||||||

|

"gmail.com",

|

||||||

|

"163.com",

|

||||||

|

"126.com",

|

||||||

|

"qq.com",

|

||||||

|

"outlook.com",

|

||||||

|

"hotmail.com",

|

||||||

|

"icloud.com",

|

||||||

|

"yahoo.com",

|

||||||

|

"foxmail.com",

|

||||||

|

}

|

||||||

|

|

||||||

|

var DebugEnabled = os.Getenv("DEBUG") == "true"

|

||||||

|

|

||||||

var LogConsumeEnabled = true

|

var LogConsumeEnabled = true

|

||||||

|

|

||||||

var SMTPServer = ""

|

var SMTPServer = ""

|

||||||

@ -77,6 +92,11 @@ var IsMasterNode = os.Getenv("NODE_TYPE") != "slave"

|

|||||||

var requestInterval, _ = strconv.Atoi(os.Getenv("POLLING_INTERVAL"))

|

var requestInterval, _ = strconv.Atoi(os.Getenv("POLLING_INTERVAL"))

|

||||||

var RequestInterval = time.Duration(requestInterval) * time.Second

|

var RequestInterval = time.Duration(requestInterval) * time.Second

|

||||||

|

|

||||||

|

var SyncFrequency = 10 * 60 // unit is second, will be overwritten by SYNC_FREQUENCY

|

||||||

|

|

||||||

|

var BatchUpdateEnabled = false

|

||||||

|

var BatchUpdateInterval = GetOrDefault("BATCH_UPDATE_INTERVAL", 5)

|

||||||

|

|

||||||

const (

|

const (

|

||||||

RoleGuestUser = 0

|

RoleGuestUser = 0

|

||||||

RoleCommonUser = 1

|

RoleCommonUser = 1

|

||||||

@ -94,10 +114,10 @@ var (

|

|||||||

// All duration's unit is seconds

|

// All duration's unit is seconds

|

||||||

// Shouldn't larger then RateLimitKeyExpirationDuration

|

// Shouldn't larger then RateLimitKeyExpirationDuration

|

||||||

var (

|

var (

|

||||||

GlobalApiRateLimitNum = 180

|

GlobalApiRateLimitNum = GetOrDefault("GLOBAL_API_RATE_LIMIT", 180)

|

||||||

GlobalApiRateLimitDuration int64 = 3 * 60

|

GlobalApiRateLimitDuration int64 = 3 * 60

|

||||||

|

|

||||||

GlobalWebRateLimitNum = 60

|

GlobalWebRateLimitNum = GetOrDefault("GLOBAL_WEB_RATE_LIMIT", 60)

|

||||||

GlobalWebRateLimitDuration int64 = 3 * 60

|

GlobalWebRateLimitDuration int64 = 3 * 60

|

||||||

|

|

||||||

UploadRateLimitNum = 10

|

UploadRateLimitNum = 10

|

||||||

@ -137,41 +157,53 @@ const (

|

|||||||

)

|

)

|

||||||

|

|

||||||

const (

|

const (

|

||||||

ChannelTypeUnknown = 0

|

ChannelTypeUnknown = 0

|

||||||

ChannelTypeOpenAI = 1

|

ChannelTypeOpenAI = 1

|

||||||

ChannelTypeAPI2D = 2

|

ChannelTypeAPI2D = 2

|

||||||

ChannelTypeAzure = 3

|

ChannelTypeAzure = 3

|

||||||

ChannelTypeCloseAI = 4

|

ChannelTypeCloseAI = 4

|

||||||

ChannelTypeOpenAISB = 5

|

ChannelTypeOpenAISB = 5

|

||||||

ChannelTypeOpenAIMax = 6

|

ChannelTypeOpenAIMax = 6

|

||||||

ChannelTypeOhMyGPT = 7

|

ChannelTypeOhMyGPT = 7

|

||||||

ChannelTypeCustom = 8

|

ChannelTypeCustom = 8

|

||||||

ChannelTypeAILS = 9

|

ChannelTypeAILS = 9

|

||||||

ChannelTypeAIProxy = 10

|

ChannelTypeAIProxy = 10

|

||||||

ChannelTypePaLM = 11

|

ChannelTypePaLM = 11

|

||||||

ChannelTypeAPI2GPT = 12

|

ChannelTypeAPI2GPT = 12

|

||||||

ChannelTypeAIGC2D = 13

|

ChannelTypeAIGC2D = 13

|

||||||

ChannelTypeAnthropic = 14

|

ChannelTypeAnthropic = 14

|

||||||

ChannelTypeBaidu = 15

|

ChannelTypeBaidu = 15

|

||||||

ChannelTypeZhipu = 16

|

ChannelTypeZhipu = 16

|

||||||

|

ChannelTypeAli = 17

|

||||||

|

ChannelTypeXunfei = 18

|

||||||

|

ChannelType360 = 19

|

||||||

|

ChannelTypeOpenRouter = 20

|

||||||

|

ChannelTypeAIProxyLibrary = 21

|

||||||

|

ChannelTypeFastGPT = 22

|

||||||

)

|

)

|

||||||

|

|

||||||

var ChannelBaseURLs = []string{

|

var ChannelBaseURLs = []string{

|

||||||

"", // 0

|

"", // 0

|

||||||

"https://api.openai.com", // 1

|

"https://api.openai.com", // 1

|

||||||

"https://oa.api2d.net", // 2

|

"https://oa.api2d.net", // 2

|

||||||

"", // 3

|

"", // 3

|

||||||

"https://api.closeai-proxy.xyz", // 4

|

"https://api.closeai-proxy.xyz", // 4

|

||||||

"https://api.openai-sb.com", // 5

|

"https://api.openai-sb.com", // 5

|

||||||

"https://api.openaimax.com", // 6

|

"https://api.openaimax.com", // 6

|

||||||

"https://api.ohmygpt.com", // 7

|

"https://api.ohmygpt.com", // 7

|

||||||

"", // 8

|

"", // 8

|

||||||

"https://api.caipacity.com", // 9

|

"https://api.caipacity.com", // 9

|

||||||

"https://api.aiproxy.io", // 10

|

"https://api.aiproxy.io", // 10

|

||||||

"", // 11

|

"", // 11

|

||||||

"https://api.api2gpt.com", // 12

|

"https://api.api2gpt.com", // 12

|

||||||

"https://api.aigc2d.com", // 13

|

"https://api.aigc2d.com", // 13

|

||||||

"https://api.anthropic.com", // 14

|

"https://api.anthropic.com", // 14

|

||||||

"https://aip.baidubce.com", // 15

|

"https://aip.baidubce.com", // 15

|

||||||

"https://open.bigmodel.cn", // 16

|

"https://open.bigmodel.cn", // 16

|

||||||

|

"https://dashscope.aliyuncs.com", // 17

|

||||||

|

"", // 18

|

||||||

|

"https://ai.360.cn", // 19

|

||||||

|

"https://openrouter.ai/api", // 20

|

||||||

|

"https://api.aiproxy.io", // 21

|

||||||

|

"https://fastgpt.run/api/openapi", // 22

|

||||||

}

|

}

|

||||||

|

|||||||

@ -1,6 +1,9 @@

|

|||||||

package common

|

package common

|

||||||

|

|

||||||

import "encoding/json"

|

import (

|

||||||

|

"encoding/json"

|

||||||

|

"strings"

|

||||||

|

)

|

||||||

|

|

||||||

// ModelRatio

|

// ModelRatio

|

||||||

// https://platform.openai.com/docs/models/model-endpoint-compatibility

|

// https://platform.openai.com/docs/models/model-endpoint-compatibility

|

||||||

@ -10,42 +13,52 @@ import "encoding/json"

|

|||||||

// 1 === $0.002 / 1K tokens

|

// 1 === $0.002 / 1K tokens

|

||||||

// 1 === ¥0.014 / 1k tokens

|

// 1 === ¥0.014 / 1k tokens

|

||||||

var ModelRatio = map[string]float64{

|

var ModelRatio = map[string]float64{

|

||||||

"gpt-4": 15,

|

"gpt-4": 15,

|

||||||

"gpt-4-0314": 15,

|

"gpt-4-0314": 15,

|

||||||

"gpt-4-0613": 15,

|

"gpt-4-0613": 15,

|

||||||

"gpt-4-32k": 30,

|

"gpt-4-32k": 30,

|

||||||

"gpt-4-32k-0314": 30,

|

"gpt-4-32k-0314": 30,

|

||||||

"gpt-4-32k-0613": 30,

|

"gpt-4-32k-0613": 30,

|

||||||

"gpt-3.5-turbo": 0.75, // $0.0015 / 1K tokens

|

"gpt-3.5-turbo": 0.75, // $0.0015 / 1K tokens

|

||||||

"gpt-3.5-turbo-0301": 0.75,

|

"gpt-3.5-turbo-0301": 0.75,

|

||||||

"gpt-3.5-turbo-0613": 0.75,

|

"gpt-3.5-turbo-0613": 0.75,

|

||||||

"gpt-3.5-turbo-16k": 1.5, // $0.003 / 1K tokens

|

"gpt-3.5-turbo-16k": 1.5, // $0.003 / 1K tokens

|

||||||

"gpt-3.5-turbo-16k-0613": 1.5,

|

"gpt-3.5-turbo-16k-0613": 1.5,

|

||||||

"text-ada-001": 0.2,

|

"text-ada-001": 0.2,

|

||||||

"text-babbage-001": 0.25,

|

"text-babbage-001": 0.25,

|

||||||

"text-curie-001": 1,

|

"text-curie-001": 1,

|

||||||

"text-davinci-002": 10,

|

"text-davinci-002": 10,

|

||||||

"text-davinci-003": 10,

|

"text-davinci-003": 10,

|

||||||

"text-davinci-edit-001": 10,

|

"text-davinci-edit-001": 10,

|

||||||

"code-davinci-edit-001": 10,

|

"code-davinci-edit-001": 10,

|

||||||

"whisper-1": 10,

|

"whisper-1": 15, // $0.006 / minute -> $0.006 / 150 words -> $0.006 / 200 tokens -> $0.03 / 1k tokens

|

||||||

"davinci": 10,

|

"davinci": 10,

|

||||||

"curie": 10,

|

"curie": 10,

|

||||||

"babbage": 10,

|

"babbage": 10,

|

||||||

"ada": 10,

|

"ada": 10,

|

||||||

"text-embedding-ada-002": 0.05,

|

"text-embedding-ada-002": 0.05,

|

||||||

"text-search-ada-doc-001": 10,

|

"text-search-ada-doc-001": 10,

|

||||||

"text-moderation-stable": 0.1,

|

"text-moderation-stable": 0.1,

|

||||||

"text-moderation-latest": 0.1,

|

"text-moderation-latest": 0.1,

|

||||||

"dall-e": 8,

|

"dall-e": 8,

|

||||||

"claude-instant-1": 0.75,

|

"claude-instant-1": 0.815, // $1.63 / 1M tokens

|

||||||

"claude-2": 30,

|

"claude-2": 5.51, // $11.02 / 1M tokens

|

||||||

"ERNIE-Bot": 0.8572, // ¥0.012 / 1k tokens

|

"ERNIE-Bot": 0.8572, // ¥0.012 / 1k tokens

|

||||||

"ERNIE-Bot-turbo": 0.5715, // ¥0.008 / 1k tokens

|

"ERNIE-Bot-turbo": 0.5715, // ¥0.008 / 1k tokens

|

||||||

"PaLM-2": 1,

|

"Embedding-V1": 0.1429, // ¥0.002 / 1k tokens

|

||||||

"chatglm_pro": 0.7143, // ¥0.01 / 1k tokens

|

"PaLM-2": 1,

|

||||||

"chatglm_std": 0.3572, // ¥0.005 / 1k tokens

|

"chatglm_pro": 0.7143, // ¥0.01 / 1k tokens

|

||||||

"chatglm_lite": 0.1429, // ¥0.002 / 1k tokens

|

"chatglm_std": 0.3572, // ¥0.005 / 1k tokens

|

||||||

|

"chatglm_lite": 0.1429, // ¥0.002 / 1k tokens

|

||||||

|

"qwen-v1": 0.8572, // ¥0.012 / 1k tokens

|

||||||

|

"qwen-plus-v1": 1, // ¥0.014 / 1k tokens

|

||||||

|

"text-embedding-v1": 0.05, // ¥0.0007 / 1k tokens

|

||||||

|

"SparkDesk": 1.2858, // ¥0.018 / 1k tokens

|

||||||

|

"360GPT_S2_V9": 0.8572, // ¥0.012 / 1k tokens

|

||||||

|

"embedding-bert-512-v1": 0.0715, // ¥0.001 / 1k tokens

|

||||||

|

"embedding_s1_v1": 0.0715, // ¥0.001 / 1k tokens

|

||||||

|

"semantic_similarity_s1_v1": 0.0715, // ¥0.001 / 1k tokens

|

||||||

|

"360GPT_S2_V9.4": 0.8572, // ¥0.012 / 1k tokens

|

||||||

}

|

}

|

||||||

|

|

||||||

func ModelRatio2JSONString() string {

|

func ModelRatio2JSONString() string {

|

||||||

@ -69,3 +82,19 @@ func GetModelRatio(name string) float64 {

|

|||||||

}

|

}

|

||||||

return ratio

|

return ratio

|

||||||

}

|

}

|

||||||

|

|

||||||

|

func GetCompletionRatio(name string) float64 {

|

||||||

|

if strings.HasPrefix(name, "gpt-3.5") {

|

||||||

|

return 1.333333

|

||||||

|

}

|

||||||

|

if strings.HasPrefix(name, "gpt-4") {

|

||||||

|

return 2

|

||||||

|

}

|

||||||

|

if strings.HasPrefix(name, "claude-instant-1") {

|

||||||

|

return 3.38

|

||||||

|

}

|

||||||

|

if strings.HasPrefix(name, "claude-2") {

|

||||||

|

return 2.965517

|

||||||

|

}

|

||||||

|

return 1

|

||||||

|

}

|

||||||

|

|||||||

@ -61,3 +61,8 @@ func RedisDel(key string) error {

|

|||||||

ctx := context.Background()

|

ctx := context.Background()

|

||||||

return RDB.Del(ctx, key).Err()

|

return RDB.Del(ctx, key).Err()

|

||||||

}

|

}

|

||||||

|

|

||||||

|

func RedisDecrease(key string, value int64) error {

|

||||||

|

ctx := context.Background()

|

||||||

|

return RDB.DecrBy(ctx, key, value).Err()

|

||||||

|

}

|

||||||

|

|||||||

@ -7,6 +7,7 @@ import (

|

|||||||

"log"

|

"log"

|

||||||

"math/rand"

|

"math/rand"

|

||||||

"net"

|

"net"

|

||||||

|

"os"

|

||||||

"os/exec"

|

"os/exec"

|

||||||

"runtime"

|

"runtime"

|

||||||

"strconv"

|

"strconv"

|

||||||

@ -177,3 +178,15 @@ func Max(a int, b int) int {

|

|||||||

return b

|

return b

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

func GetOrDefault(env string, defaultValue int) int {

|

||||||

|

if env == "" || os.Getenv(env) == "" {

|

||||||

|

return defaultValue

|

||||||

|

}

|

||||||

|

num, err := strconv.Atoi(os.Getenv(env))

|

||||||

|

if err != nil {

|

||||||

|

SysError(fmt.Sprintf("failed to parse %s: %s, using default value: %d", env, err.Error(), defaultValue))

|

||||||

|

return defaultValue

|

||||||

|

}

|

||||||

|

return num

|

||||||

|

}

|

||||||

|

|||||||

@ -85,7 +85,6 @@ func GetAuthHeader(token string) http.Header {

|

|||||||

}

|

}

|

||||||

|

|

||||||

func GetResponseBody(method, url string, channel *model.Channel, headers http.Header) ([]byte, error) {

|

func GetResponseBody(method, url string, channel *model.Channel, headers http.Header) ([]byte, error) {

|

||||||

client := &http.Client{}

|

|

||||||

req, err := http.NewRequest(method, url, nil)

|

req, err := http.NewRequest(method, url, nil)

|

||||||

if err != nil {

|

if err != nil {

|

||||||

return nil, err

|

return nil, err

|

||||||

@ -93,7 +92,7 @@ func GetResponseBody(method, url string, channel *model.Channel, headers http.He

|

|||||||

for k := range headers {

|

for k := range headers {

|

||||||

req.Header.Add(k, headers.Get(k))

|

req.Header.Add(k, headers.Get(k))

|

||||||

}

|

}

|

||||||

res, err := client.Do(req)

|

res, err := httpClient.Do(req)

|

||||||

if err != nil {

|

if err != nil {

|

||||||

return nil, err

|

return nil, err

|

||||||

}

|

}

|

||||||

|

|||||||

@ -14,10 +14,29 @@ import (

|

|||||||

"time"

|

"time"

|

||||||

)

|

)

|

||||||

|

|

||||||

func testChannel(channel *model.Channel, request ChatRequest) (error, *OpenAIError) {

|

func testChannel(channel *model.Channel, request ChatRequest) (err error, openaiErr *OpenAIError) {

|

||||||

switch channel.Type {

|

switch channel.Type {

|

||||||

|

case common.ChannelTypePaLM:

|

||||||

|

fallthrough

|

||||||

|

case common.ChannelTypeAnthropic:

|

||||||

|

fallthrough

|

||||||

|

case common.ChannelTypeBaidu:

|

||||||

|

fallthrough

|

||||||

|

case common.ChannelTypeZhipu:

|

||||||

|

fallthrough

|

||||||

|

case common.ChannelTypeAli:

|

||||||

|

fallthrough

|

||||||

|

case common.ChannelType360:

|

||||||

|

fallthrough

|

||||||

|

case common.ChannelTypeXunfei:

|

||||||

|

return errors.New("该渠道类型当前版本不支持测试,请手动测试"), nil

|

||||||

case common.ChannelTypeAzure:

|

case common.ChannelTypeAzure:

|

||||||

request.Model = "gpt-35-turbo"

|

request.Model = "gpt-35-turbo"

|

||||||

|

defer func() {

|

||||||

|

if err != nil {

|

||||||

|

err = errors.New("请确保已在 Azure 上创建了 gpt-35-turbo 模型,并且 apiVersion 已正确填写!")

|

||||||

|

}

|

||||||

|

}()

|

||||||

default:

|

default:

|

||||||

request.Model = "gpt-3.5-turbo"

|

request.Model = "gpt-3.5-turbo"

|

||||||

}

|

}

|

||||||

@ -45,8 +64,7 @@ func testChannel(channel *model.Channel, request ChatRequest) (error, *OpenAIErr

|

|||||||

req.Header.Set("Authorization", "Bearer "+channel.Key)

|

req.Header.Set("Authorization", "Bearer "+channel.Key)

|

||||||

}

|

}

|

||||||

req.Header.Set("Content-Type", "application/json")

|

req.Header.Set("Content-Type", "application/json")

|

||||||

client := &http.Client{}

|

resp, err := httpClient.Do(req)

|

||||||

resp, err := client.Do(req)

|

|

||||||

if err != nil {

|

if err != nil {

|

||||||

return err, nil

|

return err, nil

|

||||||

}

|

}

|

||||||

@ -165,7 +183,7 @@ func testAllChannels(notify bool) error {

|

|||||||

err = errors.New(fmt.Sprintf("响应时间 %.2fs 超过阈值 %.2fs", float64(milliseconds)/1000.0, float64(disableThreshold)/1000.0))

|

err = errors.New(fmt.Sprintf("响应时间 %.2fs 超过阈值 %.2fs", float64(milliseconds)/1000.0, float64(disableThreshold)/1000.0))

|

||||||

disableChannel(channel.Id, channel.Name, err.Error())

|

disableChannel(channel.Id, channel.Name, err.Error())

|

||||||

}

|

}

|

||||||

if shouldDisableChannel(openaiErr) {

|

if shouldDisableChannel(openaiErr, -1) {

|

||||||

disableChannel(channel.Id, channel.Name, err.Error())

|

disableChannel(channel.Id, channel.Name, err.Error())

|

||||||

}

|

}

|

||||||

channel.UpdateResponseTime(milliseconds)

|

channel.UpdateResponseTime(milliseconds)

|

||||||

|

|||||||

@ -85,7 +85,7 @@ func AddChannel(c *gin.Context) {

|

|||||||

}

|

}

|

||||||

channel.CreatedTime = common.GetTimestamp()

|

channel.CreatedTime = common.GetTimestamp()

|

||||||

keys := strings.Split(channel.Key, "\n")

|

keys := strings.Split(channel.Key, "\n")

|

||||||

channels := make([]model.Channel, 0)

|

channels := make([]model.Channel, 0, len(keys))

|

||||||

for _, key := range keys {

|

for _, key := range keys {

|

||||||

if key == "" {

|

if key == "" {

|

||||||

continue

|

continue

|

||||||

|

|||||||

@ -79,6 +79,14 @@ func getGitHubUserInfoByCode(code string) (*GitHubUser, error) {

|

|||||||

|

|

||||||

func GitHubOAuth(c *gin.Context) {

|

func GitHubOAuth(c *gin.Context) {

|

||||||

session := sessions.Default(c)

|

session := sessions.Default(c)

|

||||||

|

state := c.Query("state")

|

||||||

|

if state == "" || session.Get("oauth_state") == nil || state != session.Get("oauth_state").(string) {

|

||||||

|

c.JSON(http.StatusForbidden, gin.H{

|

||||||

|

"success": false,

|

||||||

|

"message": "state is empty or not same",

|

||||||

|

})

|

||||||

|

return

|

||||||

|

}

|

||||||

username := session.Get("username")

|

username := session.Get("username")

|

||||||

if username != nil {

|

if username != nil {

|

||||||

GitHubBind(c)

|

GitHubBind(c)

|

||||||

@ -205,3 +213,22 @@ func GitHubBind(c *gin.Context) {

|

|||||||

})

|

})

|

||||||

return

|

return

|

||||||

}

|

}

|

||||||

|

|

||||||

|

func GenerateOAuthCode(c *gin.Context) {

|

||||||

|

session := sessions.Default(c)

|

||||||

|

state := common.GetRandomString(12)

|

||||||

|

session.Set("oauth_state", state)

|

||||||

|

err := session.Save()

|

||||||

|

if err != nil {

|

||||||

|

c.JSON(http.StatusOK, gin.H{

|

||||||

|

"success": false,

|

||||||

|

"message": err.Error(),

|

||||||

|

})

|

||||||

|

return

|

||||||

|

}

|

||||||

|

c.JSON(http.StatusOK, gin.H{

|

||||||

|

"success": true,

|

||||||

|

"message": "",

|

||||||

|

"data": state,

|

||||||

|

})

|

||||||

|

}

|

||||||

|

|||||||

@ -3,10 +3,12 @@ package controller

|

|||||||

import (

|

import (

|

||||||

"encoding/json"

|

"encoding/json"

|

||||||

"fmt"

|

"fmt"

|

||||||

"github.com/gin-gonic/gin"

|

|

||||||

"net/http"

|

"net/http"

|

||||||

"one-api/common"

|

"one-api/common"

|

||||||

"one-api/model"

|

"one-api/model"

|

||||||

|

"strings"

|

||||||

|

|

||||||

|

"github.com/gin-gonic/gin"

|

||||||

)

|

)

|

||||||

|

|

||||||

func GetStatus(c *gin.Context) {

|

func GetStatus(c *gin.Context) {

|

||||||

@ -78,6 +80,22 @@ func SendEmailVerification(c *gin.Context) {

|

|||||||

})

|

})

|

||||||

return

|

return

|

||||||

}

|

}

|

||||||

|

if common.EmailDomainRestrictionEnabled {

|

||||||

|

allowed := false

|

||||||

|

for _, domain := range common.EmailDomainWhitelist {

|

||||||

|

if strings.HasSuffix(email, "@"+domain) {

|

||||||

|

allowed = true

|

||||||

|

break

|

||||||

|

}

|

||||||

|

}

|

||||||

|

if !allowed {

|

||||||

|

c.JSON(http.StatusOK, gin.H{

|

||||||

|

"success": false,

|

||||||

|

"message": "管理员启用了邮箱域名白名单,您的邮箱地址的域名不在白名单中",

|

||||||

|

})

|

||||||

|

return

|

||||||

|

}

|

||||||

|

}

|

||||||

if model.IsEmailAlreadyTaken(email) {

|

if model.IsEmailAlreadyTaken(email) {

|

||||||

c.JSON(http.StatusOK, gin.H{

|

c.JSON(http.StatusOK, gin.H{

|

||||||

"success": false,

|

"success": false,

|

||||||

|

|||||||

@ -63,6 +63,15 @@ func init() {

|

|||||||

Root: "dall-e",

|

Root: "dall-e",

|

||||||

Parent: nil,

|

Parent: nil,

|

||||||

},

|

},

|

||||||

|

{

|

||||||

|

Id: "whisper-1",

|

||||||

|

Object: "model",

|

||||||

|

Created: 1677649963,

|

||||||

|

OwnedBy: "openai",

|

||||||

|

Permission: permission,

|

||||||

|

Root: "whisper-1",

|

||||||

|

Parent: nil,

|

||||||

|

},

|

||||||

{

|

{

|

||||||

Id: "gpt-3.5-turbo",

|

Id: "gpt-3.5-turbo",

|

||||||

Object: "model",

|

Object: "model",

|

||||||

@ -288,6 +297,15 @@ func init() {

|

|||||||

Root: "ERNIE-Bot-turbo",

|

Root: "ERNIE-Bot-turbo",

|

||||||

Parent: nil,

|

Parent: nil,

|

||||||

},

|

},

|

||||||

|

{

|

||||||

|

Id: "Embedding-V1",

|

||||||

|

Object: "model",

|

||||||

|

Created: 1677649963,

|

||||||

|

OwnedBy: "baidu",

|

||||||

|

Permission: permission,

|

||||||

|

Root: "Embedding-V1",

|

||||||

|

Parent: nil,

|

||||||

|

},

|

||||||

{

|

{

|

||||||

Id: "PaLM-2",

|

Id: "PaLM-2",

|

||||||

Object: "model",

|

Object: "model",

|

||||||

@ -324,6 +342,87 @@ func init() {

|

|||||||

Root: "chatglm_lite",

|

Root: "chatglm_lite",

|

||||||

Parent: nil,

|

Parent: nil,

|

||||||

},

|

},

|

||||||

|

{

|

||||||

|

Id: "qwen-v1",

|

||||||

|

Object: "model",

|

||||||

|

Created: 1677649963,

|

||||||

|

OwnedBy: "ali",

|

||||||

|

Permission: permission,

|

||||||

|

Root: "qwen-v1",

|

||||||

|

Parent: nil,

|

||||||

|

},

|

||||||

|

{

|

||||||

|

Id: "qwen-plus-v1",

|

||||||

|

Object: "model",

|

||||||

|

Created: 1677649963,

|

||||||

|

OwnedBy: "ali",

|

||||||

|

Permission: permission,

|

||||||

|

Root: "qwen-plus-v1",

|

||||||

|

Parent: nil,

|

||||||

|

},

|

||||||

|

{

|

||||||

|